Online promotion, spam and fraud — Content Moderation

Page contents

- Definitions: Online promotion, spam and fraud in user content

- What's at stake

- How to detect online promotion, spam and fraud

- What actions should be taken with online promotion, spam and fraud

Definitions: Online promotion, spam and fraud in user content

Spam refers to "unsolicited usually commercial messages (such as emails, text messages, or Internet postings) sent to a large number of recipients or posted in a large number of places" with the intent to further promote their misleading contents.

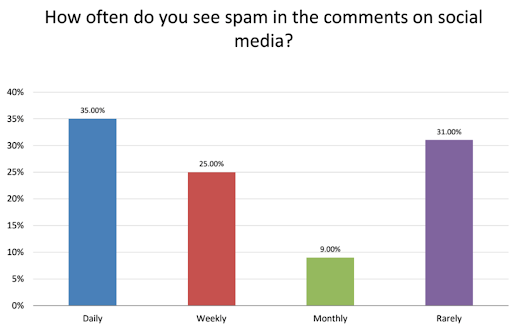

In a survey from Orbit Media, QuestionPro and Foundation asking more than 1000 people about spam, 35% indicated seeing spam comments every day on social media (see graphic below).

Number of people (out of ~1000) seeing spam on social media daily, weekly, monthly or rarely

Number of people (out of ~1000) seeing spam on social media daily, weekly, monthly or rarely

For platforms and applications, it mainly corresponds to unwanted advertising or promotion, but also problematic behaviors such as:

- personal promotion, i.e. comments from users promoting their personal work, website or content, but also promoting false information and services, and asking for likes, shares, follows or clicks

- company promotion, i.e. comments from companies and brands promoting their products and solutions, and asking for likes, shares, follows or clicks

- sexual promotion, i.e. comments promoting sexual services (including false services), websites or content

- scams or fraud and phishing attempts, i.e. comments from fraudsters that can compromise your financials or personal details if you get tricked

- and more specifically catfishing, i.e. a type of phishing where a user creates a fake profile and identity, generally on a dating or social networking website, with the goal of seducing a victim into a sexual or emotional relationship to extort money or personal data in the end

Moreover, these behaviors occur through a platform circumvention method: users are led to leave the platform to go to another one.

What's at stake

Platforms and applications should decide if they want to detect promotional messages and spam, as there is no legal obligation to do so, even if spam is actually illegal in most countries. However, promotion and spam could have consequences regarding the reputation of the platform and the safety of its users. A platform deciding not to detect such content would risk having its reputation damaged as users may want to leave because of a "negative customer experience", says Meta.

Below are some facts and pros for online promotion and spam detection:

- Spam and online promotion are forms of abuse of a platform as they are an improper use of its services.

- Promotion, spam and fraud are putting the safety of users at risk, especially when false information or services are spread.

- For example, a comment could ask for personal information of users in exchange for something else (an existing or false service). This would possibly lead users to a lack of trust in the platform and ultimately impact its reputation.

- Another example that would cause a decline in reputation would be a comment asking to perform an action (like, share, etc.) in order to get a service (again, it could be a false service) or a non-existing function of the platform (a non-existing button for instance).

- In the context of trust and safety, catfishing is also a concern because it can be used to manipulate and exploit vulnerable individuals. It can also be used to perpetrate other types of online fraud or harassment.

- Users of a social network with a lot of spam and promotion, for example, might find it less easy to communicate with each other because of the amount of spam (i.e. irrelevant and disturbing messages) sent.

- Company promotion is also a direct way to impact a platform since it may be advertising for potential competitors.

More generally, platform circumvention is a method that should be prohibited on platforms and applications to maintain safety and comfort for users, but also reputation and no unfair competition. Upwork, a marketplace for freelancers, says that freelancers getting paid by clients outside their platform is dangerous and against their terms of service for two reasons:

- "it can hurt" the freelancers if they give away their contact and billing information

- "it hurts [Upwork's] business too" as they do not get the fee they normally get when clients pay freelancers

How to detect online promotion, spam and fraud

Apps and platforms generally resort to four levels of detection measures to detect online promotion and spam, as well as fraud attempts:

- user reporting

- human moderators

- keyword-based filters

- ML models

User reporting

Platforms should consider relying on the help that other users can provide: they can report potential spam, promotion or platform circumvention attempts to the trust and safety team so that these are reviewed by moderators and handled by the platform. It's a simple way to do moderation, the user just needs a way to do the report. The main disadvantage is the delay needed to properly handle a potentially large volume of reports.

To simplify the handling of user reports and make the difference between the types of issue, it is important that users have the option to categorize their reports, with categories such as scam / phishing, sexual promotion or company promotion.

Human moderators

Human moderators are also good at detecting this kind of content, especially because spam and promotion comments are often similar. But note that human moderation has some common issues like the lack of speed and consistency.

When it comes to fraud attempts such as catfishing, it can be challenging to identify such cases since the attackers frequently use techniques to make their profiles seem real. Yet there are some warning signs that content moderators can watch out for, like:

- Inconsistencies in the profile: catfishers frequently use fictitious photos or take pictures from other people's profiles. A catphishing attempt may be detected if the profile image appears to be too good to be true. Also, if the person's information looks contradictory or inconsistent, it can be a red flag that the profile is fake.

- Too much, too soon: catfishers frequently take swift action to build a relationship with their victim. Early on in the dialogue, they might complement or express affection to them. It can be an indication of catphishing if someone seems to be moving too quickly or seems overly invested in the relationship from the start.

- Refusal to meet in person: a catfisher may cite a multitude of justifications to avoid seeing their victim in person. It may be a hint that the profile is fake if the person is reluctant or unwilling to meet up in person.

- Requests for money or personal information: catfishers may attempt to trick their victims into giving them money or personal information. Early on in a relationship, if someone asks for money or sensitive personal information, it might be a catfishing attempt.

Keyword-based filters

Keywords are actually not a very good way to detect such content as there are no specific words allowing to filter the data but more syntactic patterns such as:

List of words such as follow, share or click could still be used to try to get a shorter sample of data to be verified by human moderators, but it would probably miss many examples and catch too many false positives (i.e. comments containing these words but that are not spam nor promotion).

ML models

The use of ML models would possibly be more relevant than a keyword approach for this topic. It would help detect such content with annotated datasets containing spam and promotion comments, but also circumvention attempts.

Automated moderation can also be used to moderate images and videos submitted by users by detecting for instance cases of impersonation or scams.

What actions should be taken with online promotion, spam and fraud

Scam, fraud and phishing

When identifying cases of scam, fraud or phishing, platforms and apps should always restrict or permanently disable the responsible user accounts. It means that if possible, platforms should be able to identify the user's IP address to avoid the creation of new fraudulent accounts.

In the worst scenario, when a user has been tricked and their money or personal data stolen, platforms should offer help to facilitate procedures with the local authorities by providing as much information as possible to be able to identify the fraudster or scammer in real life.

Promotion and spam

Actions that can be taken when encountering spam and promotion in comments are mainly the following:

- Reminding the author that this is against the rules of the platform and giving him a warning

- Also reminding all users the rules and conditions of use of the platform

- Removing the problematic comment or post from the platform to guarantee its safety and reputation

- Suspending the account of the user for a limited time or permanently, especially if he already had one or more warnings before

Spam contents as well as fraud should always be taken seriously as they may lead to the reputation and brand of the companies to be tarnished. Spam contents can also lead to misinformation and be used to spread fake news. More generally, users being scammed on a platform could start feeling insecure, lacking trust and leave. Detecting this kind of content is essential from the company's point of view.

Read more

AI-generated images - The Trust & Safety Guide

This is a complete guide to the Trust&Safety challenges, regulations and evolutions around GenAI for imagery.

Sexual abuse and unsolicited sex — Content Moderation

This is a guide to detecting, moderating and handling sexual abuse and unsolicited sex in texts and images.