How to spot AI images and deepfakes: tips and limits

Find out what tricks you can use to manually detect AI-generated images! This blogpost brings together all the clues you need and also explains the difficulties and limitations of human screening.

Table of content

- Introduction

- Details in images that can help determine if it is AI

- Concrete cases

- Why the task is hard

- Available tools to be certain the image is not fake

- Conclusion

Introduction

The rise of AI-generated images and deepfakes has introduced new complexities to the digital world. According to this Everypixel study, people are creating an average of 34 million images per day using text-to-image tools such as DALLE-2 or Adobe Firefly. These images, while fascinating and often highly realistic, can be used to mislead or deceive people.

GenAI in Trust & Safety is an essential matter. New regulations are currently being developed and applied. Platforms hosting user-generated content have begun implementing new features to keep people informed and aware of misleading content. But knowing how to identify AI-generated content is crucial, whether you’re a journalist, researcher, or just a curious user. In this article, we'll explore how human perception can help in identifying AI-generated images, why this task is becoming increasingly hard, and the tools available to verify the authenticity of images.

Human perception

Human perception is our primary defense against AI-generated images. It’s also what we might call “intuition”, and even if you think otherwise, everyone has some, it just takes a little practice! While AI has become incredibly sophisticated, there are still subtle details that humans can grasp, revealing an image's artificial origin.

Details in images that can help determine if it is AI

Textures

One of the common giveaways in AI-generated images is the image texture. AI often struggles with replicating natural textures, leading to surfaces that appear unnaturally smooth or inconsistent.

The most common example is the representation of human skin, which often lacks pores or appears overly polished, far from reality. Although flawless skin is often depicted in magazines and on social networks, pimples are common in real life. AI-generated human faces are sometimes so perfect they look like they come straight out of an animated movie.

The person in the image below ticks all the boxes: no flaws, no pores, perfect, smooth, etc.

AI image showing a woman cooking

AI image showing a woman cooking

But the image also shows that texture issues are not only skin-related. Any visible object in this image seems to have a texture problem:

- Look at the fabrics, for example: from the towels in the foreground to the bags in the background, all are very smooth and sorely lacking in the fine mesh detail that would make them more plausible.

- It's the same for the tomatoes. I don't know about you, but I've never seen such beautiful, symmetrical, shiny tomatoes when shopping.

- And let's not talk about the salt poured over them. It too has a very soft texture and would almost look like snow, which isn't very realistic.

These are just a few examples of texture issues you could find in AI-generated images. You can use them as an inspiration to better identify such glitches in the images you encounter online.

Human faces, hands and limbs

AI-generated human faces are getting more convincing, but they’re not perfect.

In addition to human skin that sometimes has specific characteristics when generated by AI, you should always pay attention to features like ears, hands, or eyes—these areas often have subtle distortions. Eyes might lack symmetry or have unusual reflections, while fingers can be misshapen or positioned in unnatural ways.

Fingers and hands are probably the most difficult body parts to generate for AI. The following mistakes are the most common ones:

- Finger count: Have a look at the image given as an example above: the person’s left hand seems to be lacking a finger. This is one of the most common errors made by AI models: an AI-generated hand might have too many or too few fingers.

- Finger shape: Even when the number of fingers is correct, their shape may be unnatural. They might seem too long or too short, or simply unproportioned. They could also be distorted in a physically impossible way.

- Finger positioning: Finally, their position can look unnatural or odd. Fingers spread apart in an exaggerated way or overlapping in a strange way are clear indicators of an AI image.

Text and digits

AI typically struggles with generating text within images. Here are some common mistakes found in AI images:

- Character shape: Letters and numbers in AI images often appear warped, stretched or twisted in unnatural ways.

- Spacing: Spacing between characters might be inconsistent. Characters might be too far apart, too close together or overlapping. The natural flow of text may then be impacted, which is a clear indicator of the presence of AI.

- Readability: It is frequent to see an AI producing nonsensical text with random characters that are not a known language.

- Font: The generated text might switch between different fonts within the same word or sentence.

- Context: Finally, some generated texts or digits are completely out of context because they don’t match the style or the language expected for the location represented in the image.

Let’s practice! See the image example below. Everything looks normal at first sight. But if you have a closer look, you might notice signs which, when zoomed in, are just random nonsensical texts, with a strange font and inconsistent size.

AI image showing the front of a restaurant

AI image showing the front of a restaurant

Background

The background in AI-generated images can often appear strange or out of place. This can result in a disjointed or surreal image that feels "off."

Here are some common issues related to backgrounds in AI images:

- Lighting and shadows: The background might not match the lighting of the foreground, with shadows falling in different directions or absent where they should be present.

- Blurring: Producing sharp backgrounds is difficult. It is very frequent to observe overly blurred backgrounds, prioritizing the main subject of the image.

- Context: Backgrounds sometimes include some surreal or nonsensical elements such as objects with unexpected shapes and positions.

- Pattern repetition: Repetitive patterns in the background sometimes happen when generating an image with AI. It can be repetitive textures, colors or objects that look unnatural.

- Perspective: Finally, perspective in AI images can be inconsistent or completely wrong, making the image feel physically impossible.

Image likeliness

All the features above are good indicators to know if an image is likely to be real or not. But sometimes, AI images don’t show any of these hints.

The last resort is then to look at whether the content of the image is probable or not. In other words, the question you should ask yourself as a human with world knowledge and good intuition is “Would it be possible for me to see this in real life?”.

The example below shows incredibly big snowdrops growing in the wild, but also on a woman’s hat… And it feels particularly unreal!

AI image showing a woman surrounded by snowdrops

AI image showing a woman surrounded by snowdrops

Concrete cases

Let’s have a look at a few famous fake photos that appeared online during the last months.

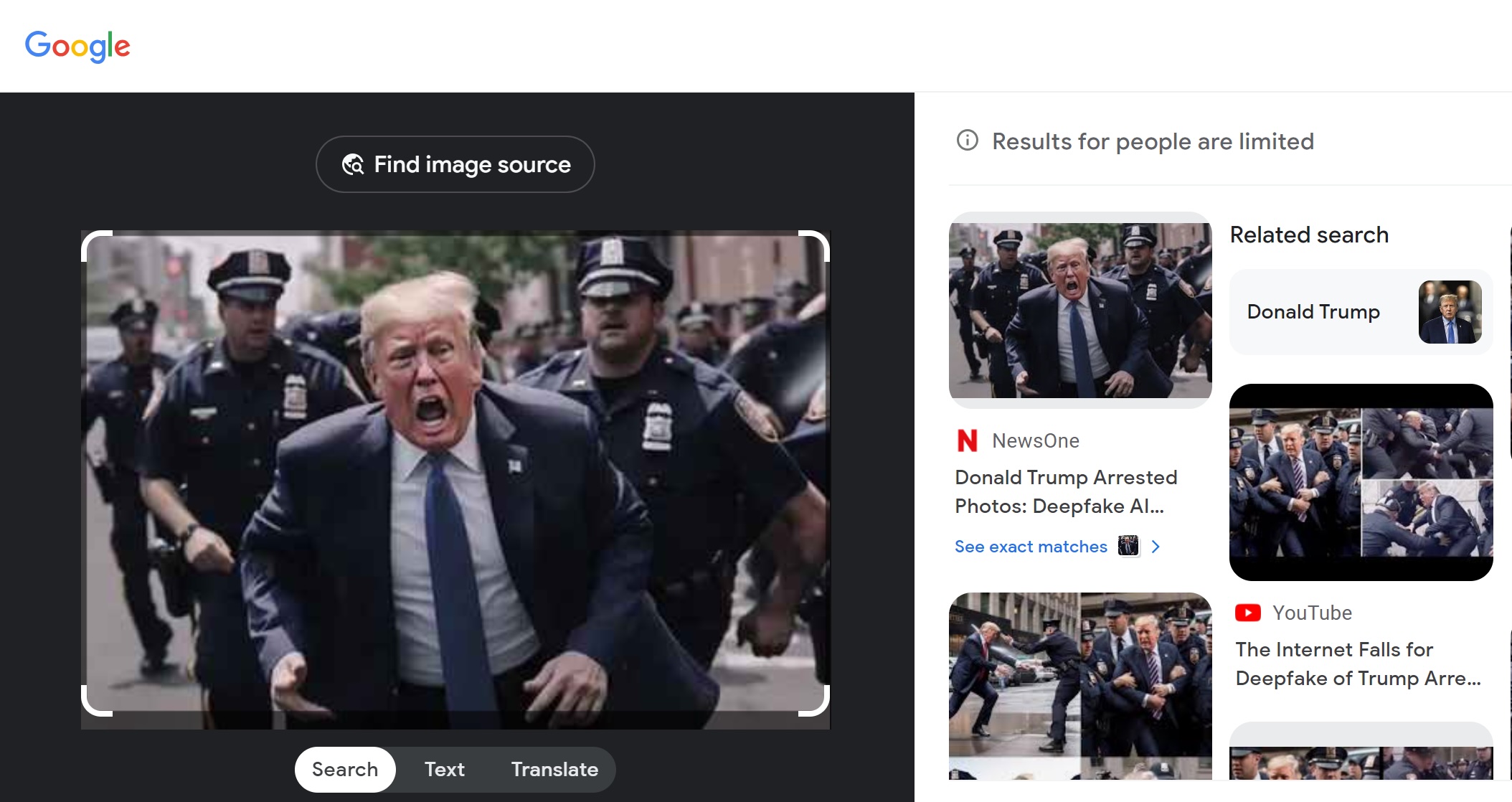

Image 1 shows Donald Trump, a well-known American politician, being arrested by policemen. The image background is blurred and details are not sharp. The background also looks like a copy-paste of police officers and uniforms, which seems a little strange. The face in the foreground is quite realistic, but the fingers of his right hand don't look complete.

Image 2 also shows Donald Trump in an outfit that looks like a mix between a suit and a jail uniform. This feels again very odd. The background is blurred and shows the judges’ table with far too many armchairs to be true! And let’s talk about Trump’s arms and hands: arms seem too short and fingers are missing…

Finally, Image 3 shows Pope Francis wearing an exaggerated white down jacket, which is unlikely. The texture of the image is a little too smooth, and the lighting of the foreground compared to the background seems far too strong.

All these indicators should be easy to spot for trained eyes but can go unnoticed if you just take a quick look.

Image showing three known fakes

Image showing three known fakes

Why the task is hard

Despite the clues outlined above, spotting AI-generated images is becoming increasingly difficult. The evolution of AI technology and the increasing number of models make these images more realistic than ever.

"AI or not?" game

One way to get an idea of this task difficulty is to have a look at how well humans perform. We at Sightengine have decided to build an “AI or not?” game to challenge our website’s visitors and test their GenAI detection skills.

Results of the game are based on 150 images, half of them AI and half of them real. They show that the 2,500 participants that went through the test achieved an accuracy of 71%. This is a good score but when looking at more detailed stats, we notice that 20% of images fooled most players, meaning that more than half of them mislabelled these images. Also, people seem to have more doubts when it comes to real images identification: only 23% of them are correctly recognized by 80% or more people while it is 45% for AI images.

The above takeaways suggest that distinguishing between real and generated content is becoming increasingly challenging, even for trained eyes.

AI models improvements

AI-generated images have experienced remarkable advancements in recent years, thanks to improvements in models like GANs (Generative Adversarial Networks) or diffusion models. These advancements make the task of differentiating real images from AI-generated images more difficult especially because they have significantly enhanced:

- Creativity and diversity: Recent models allow users to generate images in a wide variety of artistic styles and offer more control over stylistic elements (specific artistic medium and movement are easily generated). Prompts also allow users to specify what they want to generate with incredible flexibility, and models can understand subtle details in text inputs to then provide an accurate visual representation of the request.

- Realism, details and quality: Generators can now produce high-resolution images that can capture small details, from texture to lighting, making the images look more realistic. While earlier models sometimes produced blurry or distorted images, new models are able to mimic professional photography or art.

The four images below are examples of how models can evolve. The same prompt “photo close up of an old sleepy fisherman's face at night” was used to generate each one of these images with different versions of Midjourney models:

- Model Version 5.0, released in March 2023

- Model Version 5.2, released in June 2023

- Model Version 6.0, released on December 20, 2023

- Model Version 6.1, released on July 30, 2024

Image showing results of the same prompt with four different MJ model versions

Image showing results of the same prompt with four different MJ model versions

These are good examples of how quick and impressive the evolution of genAI results is. The generated face shows more and more realistic details. At first, the face almost looks animated because of how smooth the skin looks. The final image shows human facial flaws similar to those you would find in any older man’s face. It makes the image very authentic, closer to what we see in real life.

Available tools to be certain the image is not fake

When human judgment is not enough, several tools can help verify the authenticity of an image.

Reverse image search

Reverse image search tools such as Google Lens allow you to upload an image and see where it has appeared online.

To use Google Lens, nothing simpler! Here are the two ways:

- Instead of doing a regular textual search, you simply need to search with an image

- If you encounter an image and right-click it, you should be able to use the same feature

After searching with your image, you get a list of links where the image seems to appear. This can help you determine if the image has been altered, reused, or taken out of context. If an image appears on multiple unrelated websites, it may be a sign that it has been manipulated. Sometimes, known deepfakes are even indicated as such on some web pages.

Image showing a reverse search example with Google Lens

Image showing a reverse search example with Google Lens

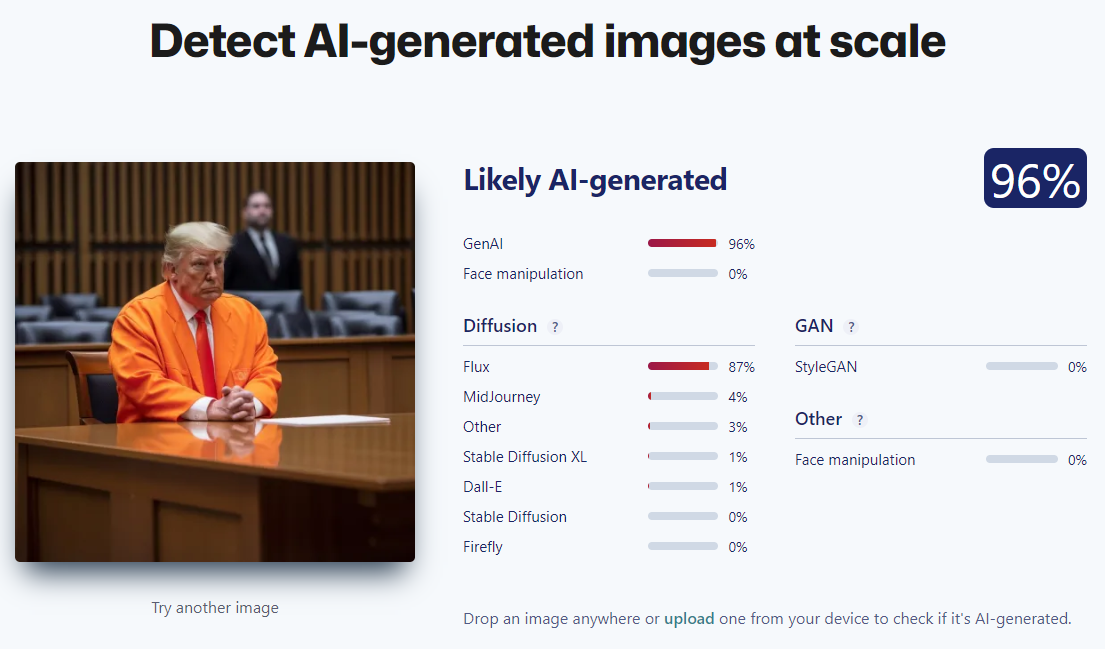

AI detector

There are specific AI detection tools designed to analyze images and determine whether they are AI-generated. They can be useful, especially because many images do not contain any clues indicating they could be AI-generated, and because

We at Sightengine have been working on an AI-generated image detection model that was trained on millions of artificially-created and human-created images spanning all sorts of content such as photography, art, drawings, memes and more.

Image showing Sightengine's AI detector

Image showing Sightengine's AI detector

This model can be used for personal use to make sure the images encountered online are real. It can also be used by platforms and apps seeking to protect their users from seeing deepfakes for instance: the model flags AI images and the user can apply some specific moderation processes to detected images.

The algorithm does not rely on watermarks, i.e. visible or invisible signs added to digital images allowing to identify the generator. Watermarks are indeed not always present on images and can be easily removed from them. It is the visual content that is analyzed during the process, making the approach much more scalable.

Images generated by the main models currently in use (Stable Diffusion, MidJourney or GANs for instance) are flagged and associated with a probability score.

Have a look at our documentation to learn more about the model and how the API works.

Conclusion

As AI continues to evolve, the line between reality and artificiality blurs. While human perception can still recognize inconsistencies in AI-generated images, the task is becoming increasingly challenging, even when knowing all the tips! Engaging with tools such as AI detectors can provide additional assurance in verifying the authenticity of an image. Staying informed and vigilant is key in safely navigating the internet.

Read more

Self-harm and mental health — Content Moderation

This is a guide to detecting, moderating and handling self-harm, self-injury and suicide-related topics in texts and images.

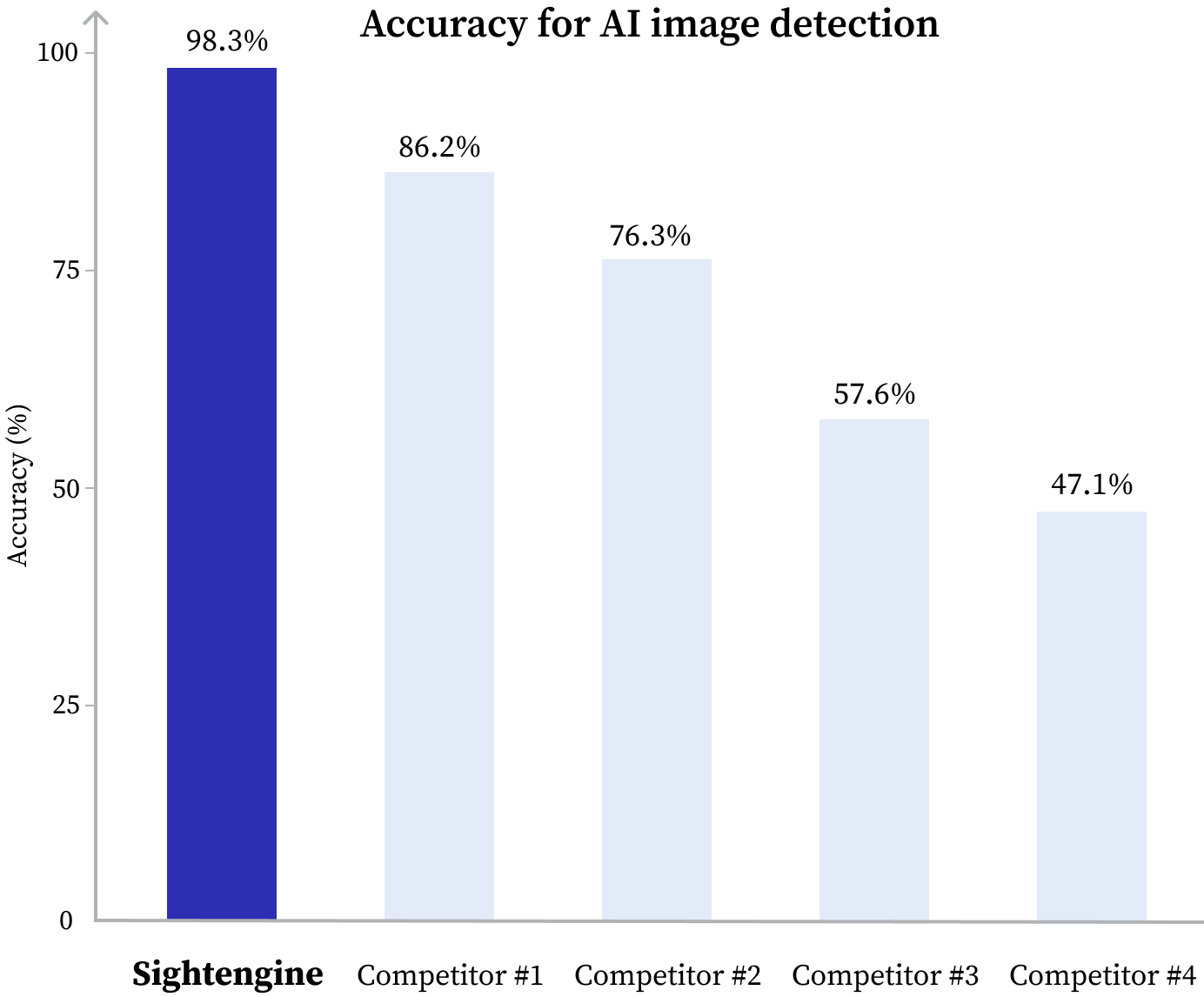

Sightengine Ranks #1 in AI-Media Detection Accuracy: Insights from an Independent Benchmark

Learn how Sightengine performed in an independent AI-media detection benchmark, outperforming competitors with advanced methodologies.