Content Moderation Guides & Resources

Learn and stay informed with the Knowledge Center.

Read our guides and articles on Trust & Safety.

Guides to Content Moderation

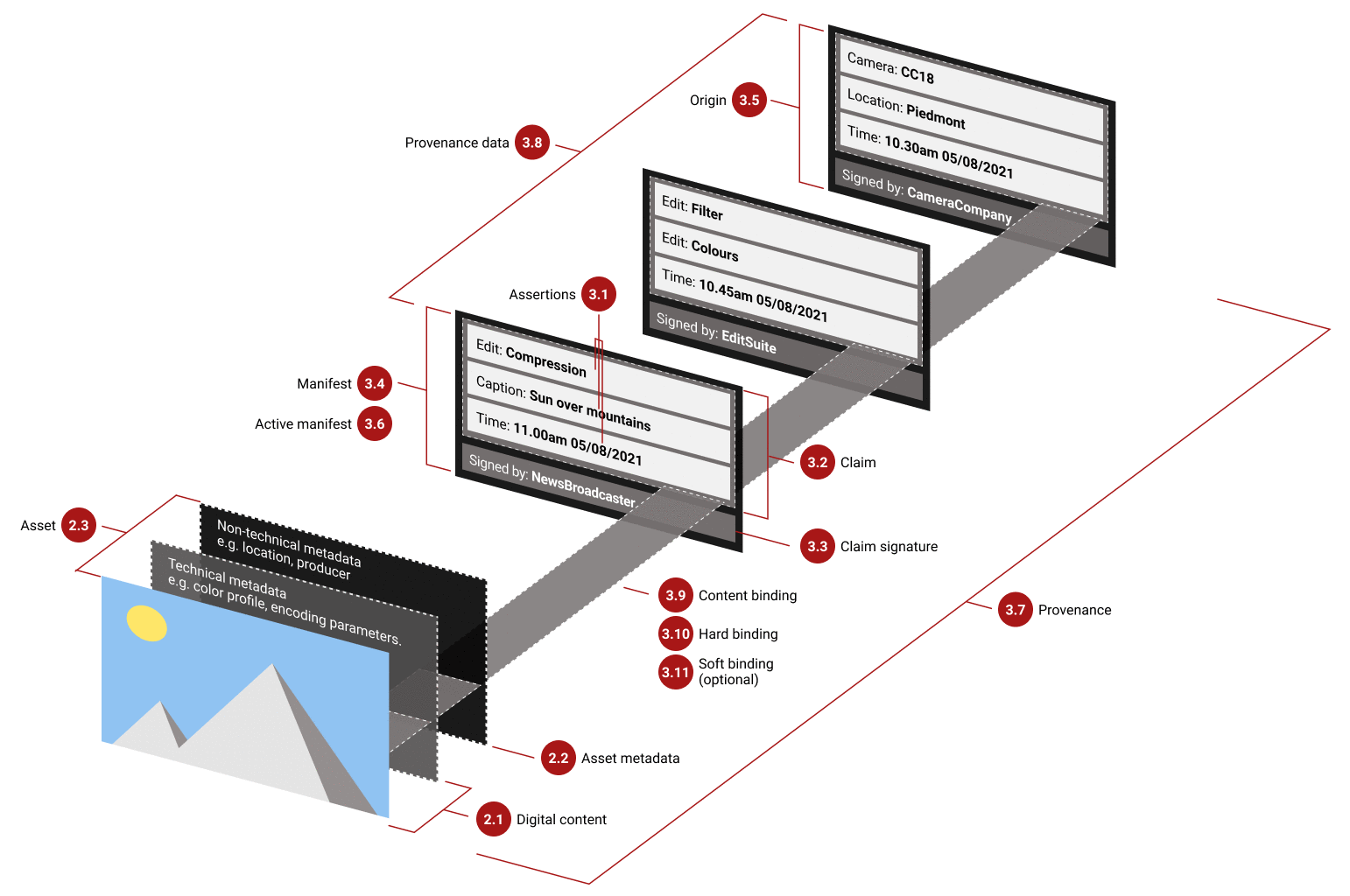

Python C2PA Tutorial: A Hands-on Guide to Verifying Images and Detecting Tampering

A developer's guide to C2PA, showing how to read metadata, detect tampering, and verify content authenticity with Python examples.

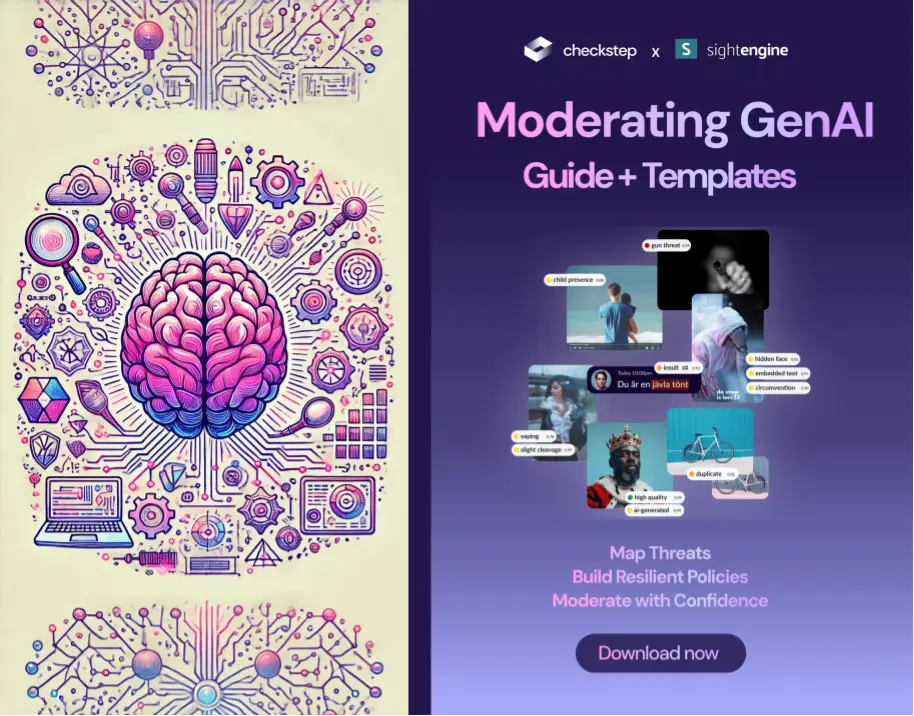

Detecting and moderating AI-generated content with technology

Learn about the evolution of GenAI models and the best strategies to detect AI-generated content.

How to spot AI images and deepfakes: tips and limits

Learn how to recognize AI-Generated images and deepfakes. Improve your detection skills to stay ahead of the game.

AI images: testing human abilities to identify fakes

Results and insights from our AI or not game: how well humans identify AI images, when they get fooled and what we can learn from this.

AI-generated images - The Trust & Safety Guide

This is a complete guide to the Trust&Safety challenges, regulations and evolutions around GenAI for imagery.

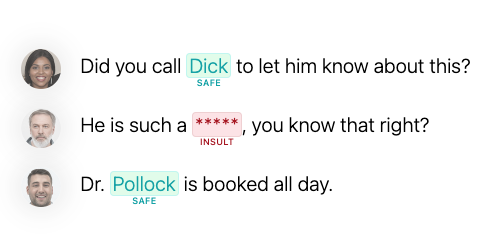

Toxic keyword lists and filters in 2024, the definitive guide

This is a complete guide to keyword filtering to text moderation through keyword filtering and keyword lists in 2024. Learn about best practices, trends and use-cases like profanity, toxicity filtering, obfuscation, languages and more.

Self-harm and mental health — Content Moderation

This is a guide to detecting, moderating and handling self-harm, self-injury and suicide-related topics in texts and images.

Personal & Identity Attacks — Content Moderation

This is a guide to detecting, moderating and handling insults, personal attacks and identity attacks in texts and images.

Harassment, bullying and intimidation — Content Moderation

This is a guide to detecting, moderating and handling harassment, bullying and intimidation in texts and images.

Online promotion, spam and fraud — Content Moderation

This is a guide to detecting, moderating and handling online promotion, spam and fraud in texts and images.

Sexual abuse and unsolicited sex — Content Moderation

This is a guide to detecting, moderating and handling sexual abuse and unsolicited sex in texts and images.

Illegal traffic and trade — Content Moderation

This is a guide to detecting, moderating and handling illegal traffic and trade in texts and images.

Unsafe organizations and individuals — Content Moderation

This is a guide to detecting, moderating and handling unsafe organizations and individuals.

Resources

An efficient and scalable solution to Content ModerationSee how Sightengine can help you analyze and filter images, videos, messages and more | Request demo Get started |

Introductory Material — Trust & Safety

- 1. Digital Services Act and Content Moderation

- 2. UK Online Safety Bill and Content Moderation

- 3. What are the different types of Content Moderation

- 4. What is Content Moderation - The Introductory Guide

- 5. Do's and Don't's of Content Moderation

- 6. What is Trust and Safety - The Introductory Guide

- 7. Developing Effective Content Moderation Guidelines

From our Blog

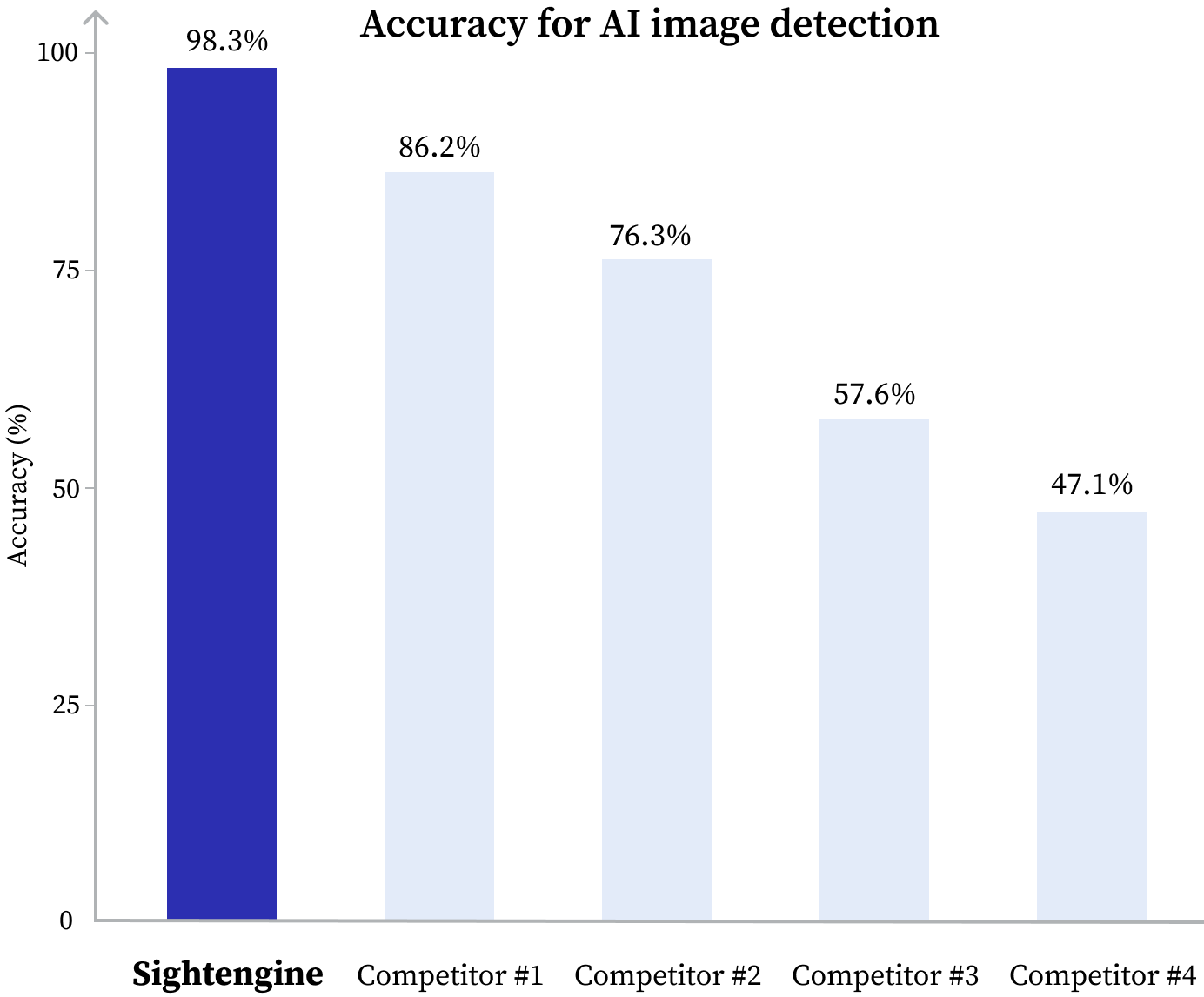

Sightengine Ranks #1 in AI-Media Detection Accuracy: Insights from an Independent Benchmark

Learn how Sightengine performed in an independent AI-media detection benchmark, outperforming competitors with advanced methodologies.

Joining the Online Dating & Discovery Association: Towards Safer Connections

This is a step to further enhance end-user safety in the online dating realm.

Towards a fine-grained and contextual approach to Nudity Moderation

Blanket bans on nudity and specifically on bare breasts are coming under increased scrutiny. We hear they clash with cultural expectations and impede right to expression for women, trans and nonbinary people.

See why the world's best companies are using Sightengine

Empower your business with Powerful Content Analysis and Filtering.

GET STARTEDREQUEST DEMO