Self-harm and mental health — Content Moderation

Page contents

- Definitions: Self-harm in user content

- What's at stake

- How to detect self-harm

- What actions should be taken with self-harm content

Definitions: Self-harm in user content

Self-harm and mental-health related content encompasses a large variety of situations:

- suicide and self-injury

- anxiety and depression

- eating disorders such as anorexia

- sadness or loneliness

- panic attacks

- PTSD (Post-Traumatic Stress Disorder)

- body dysmorphic disorders

- etc.

Self-harm related content ranges from the most extreme, such as someone expressing their immediate intention to commit suicide, to more subtle ones such as expressions of sadness or sorrow, or dissatisfaction with one's own body image.

Suicide is the 12th leading cause of death in the US and the second one among people aged 10-34 (Curtin et al., 2022). Depression affects 3.8% of the world's population, and is a leading cause of self-injury and self-harm.

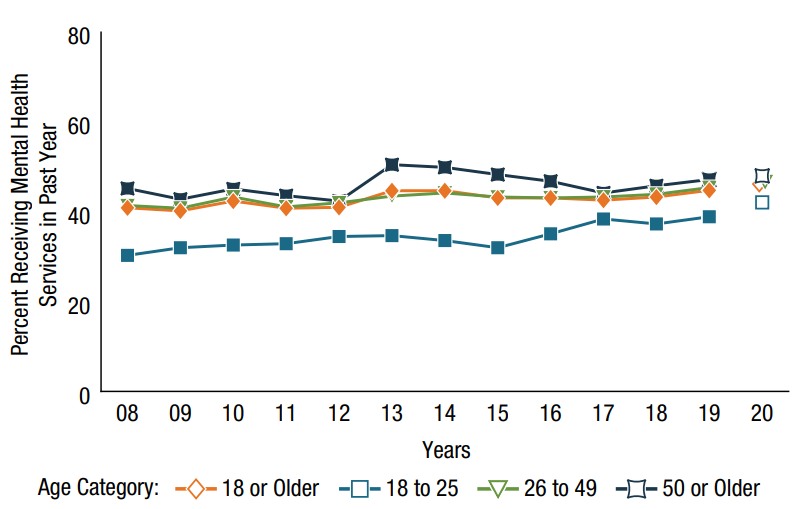

Mental Health Services Received in the Past Year: Among Adults Aged 18 or Older with Any Mental Illness in the Past Year; 2008-2020 (Substance Abuse and Mental Health Services Administration, 2021)

Mental Health Services Received in the Past Year: Among Adults Aged 18 or Older with Any Mental Illness in the Past Year; 2008-2020 (Substance Abuse and Mental Health Services Administration, 2021)

As a result, self-harm related content is prevalent and frequently encountered, especially among younger users. In 2021, Instagram took action on ~17 millions of suicide and self-injury content.

What's at stake

"In recent years, there has been increasing acknowledgement of the important role mental health plays in achieving global development goals."

World Health Organization

Mental health is an increasingly important topic, especially among younger users. Its importance is reflected on social media, in apps and in virtual worlds as users increasingly express, share and discuss their issues online.

In some countries like the UK, suicide promotion is illegal. Self-harm promotion might soon become illegal too. As such, messages promoting suicide, anorexia or other potentially dangerous behaviors should be moderated by platforms.

Should you consider any related content to be promotion? Should people in distress be censored or punished? Applications and platforms may choose to moderate and take actions on content expressing sorrow, grief, dissatisfaction, but there is no specific legal obligation to do so.

However, platforms hosting user-generated content have an ethical responsibility to act:

- This is a matter of life and death since a person's life is at stake. Users searching or asking for suicide methods, and users expressing their intention to commit suicide are in urgent need for help. "Young people are known to express their suicidal ideation on social networking sites, and occasionally use these media to find out about suicidal methods" (Rice et al., 2016). This is even true for content not related directly related to suicide, as "people with severe mental health conditions die prematurely" (WHO).

- There is a viral component, where self-harm can spread among users, especially among young people. Leaving self-harm content online can lead to other users being influenced in a negative way and trying to mimic such behaviors. Removing content quickly is the most remedy.

Beyond the legal and ethical needs to act, cases of promoted or even simply described suicide or self-harm will harm the experience and reputation of the platform or application.

How to detect self-harm

Detecting and removing self-harm-related content is a very difficult task, for multiple reasons:

- Messages often contain vague references and clues, and can be difficult to distinguish from sadness or sorrow.

- The severity of a given situation is hard to determine without knowing the context.

- Users discussing topics such as anorexia or anxiety are not necessarily affected by those.

- Users often try to evade filters, by using coded words or paraphrases.

That said, apps and platforms typically resort to four levels of detection measures:

- user reporting

- human moderators

- keyword-based filters

- ML models

User reporting

Platforms should consider the great help that other users can provide: they can report alarming messages to the trust and safety team so that these are reviewed by moderators and handled by the platform. It's a simple way to do text moderation, the user just needs a way to do the report. The main disadvantage is the delay needed to properly handle a potentially large volume of reports.

To simplify the handling and prioritization of user reports, it is important that users have the option to categorize their reports, with categories such as self-harm or suicide.

Human moderators

Human moderators are useful for detecting potential self harm or suicide, even if according to Twitter, "judging behavior based on online posts alone is challenging". This means that moderators (and even users) should be provided with clear guidelines: what are the warning signs, what constitutes suicidal or dangerous thoughts, etc. For instance, someone describing self-harm or suicide without identifying himself as suicidal should probably be treated differently as a person who identifies as suicidal or has already attempted suicide or frequently posts messages about self-harm, depression or suicide.

In addition to the challenges inherent to human moderation, such as slower speed and lower consistency, there are a few challenges specific to self-harm that need to be taken into account:

- Being exposed to harmful messages may have an impact on their mental health and well-being.

- Many terms or expressions used to refer to self-harm are not widely known and might be missed by untrained moderators.

Keyword-based filters

Keywords can be used to pre-filter comments and messages by detecting words related to mental health, such as:

- suicide and self-injury: suicide, kill myself, kms, hurt myself, cutting tw, sewerslide, etc.

- anxiety and depression: depressed, hate myself, mentally tired, nobody cares, etc.

- eating disorders: anorexia, bulimia, pro ana, ana tips, etc.

- etc.

Keywords such as those will necessarily flag many false positives, meaning messages that contain those words without being problematic. But they are still helpful as human moderators can then focus on a shorter list of messages to be verified, leading to better reactivity.

Of course, there is a risk of missing cases by using only keywords since many worrying messages do not contain any problematic keywords:

ML models

The use of ML models may complement the keywords and human moderators approach by detecting such cases with the help of annotated datasets containing self-harm or suicide-related content.

Automated moderation can also be used to moderate images and videos submitted by users, and by detecting depictions of self-harm, self-injury, violence or weapons.

What actions should be taken with self-harm content

Some solutions provide help to platforms and applications hosting user-generated content. For example, kokocares is a free initiative whose goal is to make mental health accessible to everyone. They created a Suicide Prevention Kit that provides keywords to detect risky situations in real time, support resources for users and dashboards and statistics to measure outcomes.

In any case, detected suspicious messages should be appropriately treated. When detecting risky situations, platforms should do a triage to handle each of them according to their priority.

High-risk messages

Comments that suggest an upcoming act or great violence like I'm going to cut myself tonight or I'm so depressed I want to die are considered high-risk messages.

Because they are urgent, platforms should set up a clearly defined process for immediate escalation that could include steps like:

- Reach out to the user to make sure he is ok.

- Remind the user of the phone numbers of helplines or contact information of associations supporting mental health, like Reddit does.

- Directly report the case to dedicated hotlines, so they can contact the user.

- Redirect the user to a specialist like a doctor or a psychologist: for example, Reddit has partnered with Crisis Text Line "to provide redditors who may be considering suicide or seriously hurting themselves with support from trained Crisis Counselors". Some other solutions such as ThroughLine suggest their integration in platforms to provide help with lists of verified helplines in the world.

- Directly involve a specialist such as a psychologist to make contact with the user.

- Decide whether to remove the risky comment from the platform or to keep it: platforms targeting minors should most likely remove such content. In any case, illegal content (such as promoting suicide) should be removed.

- If the content is not removed, the platform should allow users to decide whether or not they wish to read the content by using a warning screen. Facebook indicates that warning or sensitivity screens could also be used for content about recovery of suicide or self-harm that should be allowed but could still be a triggering subject.

Low or medium-risk messages

These messages are less urgent or less obvious, such as Sometimes I just feel so lonely or I guess hurting myself is not a solution. A user who mentioned once being anxious is not necessarily a person with a risk of self-harm or suicide for example, but if this same users talks every day about his anxiety problems, the risk is bigger.

Platforms could decide to take some time to better understand the context and see if the user posts more similar comments.

Setting up a moderation log to allow moderators to report the incidents encountered for a specific user is a way to track possible at-risk users.

The process defined for high-risk situations could then be used for users that are in fact at risk.

Mental health is a growing concern, both in real life and online. It can be difficult to detect risky messages related to self-harm, suicide, anxiety, depression or eating disorders, especially as context is so important. However, it is essential to be able to detect and handle these situations quickly, even at less urgent stages, as the results of early intervention have been proven to be very promising.

Read more

How to spot AI images and deepfakes: tips and limits

Learn how to recognize AI-Generated images and deepfakes. Improve your detection skills to stay ahead of the game.

Unsafe organizations and individuals — Content Moderation

This is a guide to detecting, moderating and handling unsafe organizations and individuals.