Harassment, Bullying and Intimidation — Content Moderation

Page contents

- Definitions: Harassment, bullying and intimidation in user content

- What's at stake

- How to detect harassment, bullying and intimidation

- What actions should be taken with harassment, bullying and intimidation

Definitions: Harassment, bullying and intimidation in user content

There are many definitions of what cyberbullying is but we will focus on the common definitions of harassment, bullying and intimidation in this section.

Harassment is a behavior that aims to humiliate or embarrass a person. It includes cases of bullying, where a person uses threats and humiliation repeatedly to abuse and intimidate another person.

Verbal and social bullying are common types of cyberbullying. It consists either in teasing, name calling, inappropriate comments, threats or social exclusion, rumors spreading and embarrassing comments.

Harassment also includes sexual harassment.

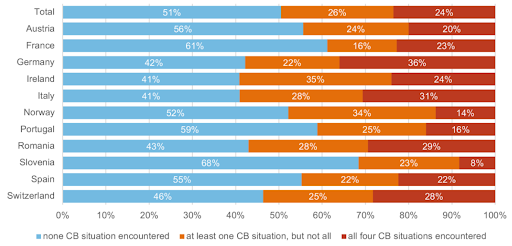

Bullying and intimidation are common behaviors online especially among young people. According to a report from the Joint Research Center (JRC) of the European Commission, 49% of 6195 children (from 10 to 18) who were interrogated in 2020 have already experienced cyberbullying (see figure below).

Percentage of children who have been victims of cyberbullying situations ("Nasty or hurtful messages were sent to me", "Nasty or hurtful messages about me were passed around or posted where others could see", "I was left out or excluded from a group or activity on the internet", "I was threatened on the internet"), by country

Percentage of children who have been victims of cyberbullying situations ("Nasty or hurtful messages were sent to me", "Nasty or hurtful messages about me were passed around or posted where others could see", "I was left out or excluded from a group or activity on the internet", "I was threatened on the internet"), by country

What's at stake

Several countries like the UK and Canada, some states in the US and some countries in the EU such as Poland have elaborated or use existing laws to fight cyberbullying and intimidation.

One such law is the Malicious Communications Act 1988 and the Communications Act 2003 from the UK which makes it a criminal offense for individuals to send messages which are indecent, grossly offensive or containing threats.

Bullying can have serious consequences on the victims. It can lead to "physical injury, social and emotional distress, self-harm, and even death", "depression, anxiety, sleep difficulties, lower academic achievement, and dropping out of school" according to the Centers for Disease Control.

Cyberbullying is the online equivalent of bullying and should therefore be considered as a major issue for platforms and applications.

All social media platforms do not tolerate these behaviors and have a really strict policy about it. For example, Facebook indicated that 8.2M of content containing bullying or harassment were actioned between April and June 2022.

How to detect harassment, bullying and intimidation

Generally speaking, situations of bullying can be detected the same way insults and hate speech are. The only difference is the repetitive aspect of attacks.

Online harassment and bullying strongly relies on the context. It can be very difficult to make the difference between an abusive comment or a simple joke, even sometimes for human moderators.

There are several ways to detect this kind of behavior but it can be difficult as it is often not explicit:

- user reporting

- human moderators

- keyword-based filters

- ML models

User reporting

Platforms should rely on the reports coming from their users. Users can easily report cases of bullying and harassment to the trust and safety team so that these are reviewed by moderators and handled by the platform. It's a simple way to do moderation, the user just needs a way to do the report. The main disadvantage of user reports is the delay for the content to be reported and handled by the platform.

Human moderators

Human moderators can also help to detect such content, especially because they can easily see if a user has been receiving repeated insults or threats and therefore determine if the situation actually matches the definition of harassment and bullying.

However, human moderation has some common issues such as the lack of speed and consistency. To overcome this, platforms could set up tools making easier profiles review such as a moderation log that could help determine if the bad comments directed against a specific user have been repeated several times.

Keyword-based filters

Keywords can help filter the data by detecting all the words that could be associated with bullying and harassment so that a shorter sample can be verified by human moderators, but alone, they are not efficient enough to distinguish a one-time insult and a bullying situation where insults and offensive comments are repeated: words like idiot or dumb could be used in a bullying context or as one-time insults.

Also, it can be difficult using keyword-based filters to detect situations where no specific word is actually being used to bully or intimidate someone.

ML models

The use of ML models may complement the keywords and human moderators approaches by detecting cases where dangerous products are mentioned in a more ambiguous way with the help of annotated datasets containing harassment and cyberbullying cases. The data should contain conversations where it is clear that the bullying behavior is repeated.

Automated moderation can also be used to moderate images and videos submitted by users and by detecting hateful or insulting symbols that could be used to harass a user.

What actions should be taken with harassment, bullying and intimidation

As soon as the unsafe content is found and verified, the platform must handle the issue depending on its intensity and severity.

High-priority messages

Most existing platforms and applications uses one of these actions when encountering harassment or cyberbullying:

- Removing the abusive or offensive content from the platform

- Suspending the account of the user who is the author of the abusive or offensive comment

- Involving local authorities

These solutions depend on the severity of the case. Extreme cases of bullying that led to a suicide for example should be reported and judged from a legal perspective.

To help decide which solution is the appropriate one, and to check if the case is actually bullying, it would probably be useful to set up a moderation log to have the history of the incidents that have occurred for a given user.

This moderation log could also be used to get in touch with the victims and make sure they are safe by providing them phone numbers of helplines or contact information of associations supporting victims of bullying for example.

Medium and low-priority messages

When encountering messages that could be considered as the first signs of hassment such as borderline jokes, platforms could use:

- Warning and education: the platform may issue a warning and educate its users on the platform's community guidelines to ensure that they won't continue to violate any policies and mitigate any risk that may further jeopardize the victim.

- Algorithmic demotion: the platform can use algorithms to demote users having a history of sharing or publishing ambiguous content that could be associated with harassment, bullying or intimidation and therefore reduce their visibility on the platform.

Platforms and applications should more generally encourage their users to behave in a positive way and to respect other users belonging to the community.

Read more

Joining the Online Dating & Discovery Association: Towards Safer Connections

This is a step to further enhance end-user safety in the online dating realm.

Detecting and moderating AI-generated content with technology

Learn about the evolution of GenAI models and the best strategies to detect AI-generated content.