Sexual abuse and unsolicited sex — Content Moderation

Page contents

- Definitions: Sexual abuse in user content

- What's at stake

- How to detect sexual abuse

- What actions should be taken with sexually abusive contents

Definitions: Sexual abuse in user content

Sexual content includes both legal sex and sexual abuse. Sexual abuse is characterized by its non consensual or illegal aspect, either because it involves exploitation, violence or trafficking.

The different types of sexual abuse between adults are:

- rape and sexual assault: content including rape or sexual assault threat or description

- sexual harassment: repeated sexually explicit comments that can be considered as a threat for the receiver

- unsolicited sexual advances: sexually explicit comments that were not consented to by the receiver

- sextortion: the practice of extorting money or sexual favors from someone by threatening to reveal evidence of their sexual activity

- sex solicitation: refers to when an individual offers something such as money, property, object, or token in exchange for a sexual act

- revenge porn: sharing of private, sexual materials, either photos or videos, of another person without their consent and with the purpose of causing embarrassment or distress

- prostitution: comments that aim to engage a sexual relationship in exchange for money

- zoophilia: comments expressing a sexual attraction of a human toward a nonhuman animal

Sexual abuse also refers to Child Sexual Exploitation (CSE) content including:

- Child Sexual Abuse Material (CSAM): illegal material involving pedophile content

- Child Sexual Exploitation Material (CSEM): legal content including children in a pose that could be seen as sexualized

- grooming: a practice in which an adult makes friends with a minor with the intent of committing sexual abuse against that minor

Note that some categories such as sextorsion could also apply to minors.

What's at stake

The first step is to decide whether you want to make the difference between abusive / illegal sexual content and legal sex.

Decisions are different and nuanced depending on the platform. For example:

- Twitter makes the difference between consensually produced and non-consensually produced adult content: the first one is allowed whereas the second one is not.

- Meta on the other hand is more strict: any sexual content is not allowed as the platform says that its community may be sensitive to this type of content. According to them, it is safer to remove all types of sexual content to avoid missing non-consensual content.

When it comes to sexual abuse, platforms have the responsibility to detect it from a legal and ethical point of view, as it mainly refers to activities and behaviors that are considered as illegal in the UK, US and EU and most part of the world.

Sexual content moderation really depends on your platform but one thing is sure: if your platform or application hosts minors, you must be very careful with this content as they shouldn't be able to see it. Also, minors should be protected from CSE. Failure to do so will bring serious legal consequences. There are more and more initiatives to help making the internet safer for children:

- To make it easier for minors or young adults to remove exploitative and sexualized content from participating platforms, the National Center for Missing and Exploited Children (NCMEC) has launched a tool called Take it Down. This tool is developed for minors to report photographs and videos of themselves. A child's parents or other responsible adults may also file a report on their behalf. Adults who appeared in such content while under the age of 18 can use the site to report and have it removed.

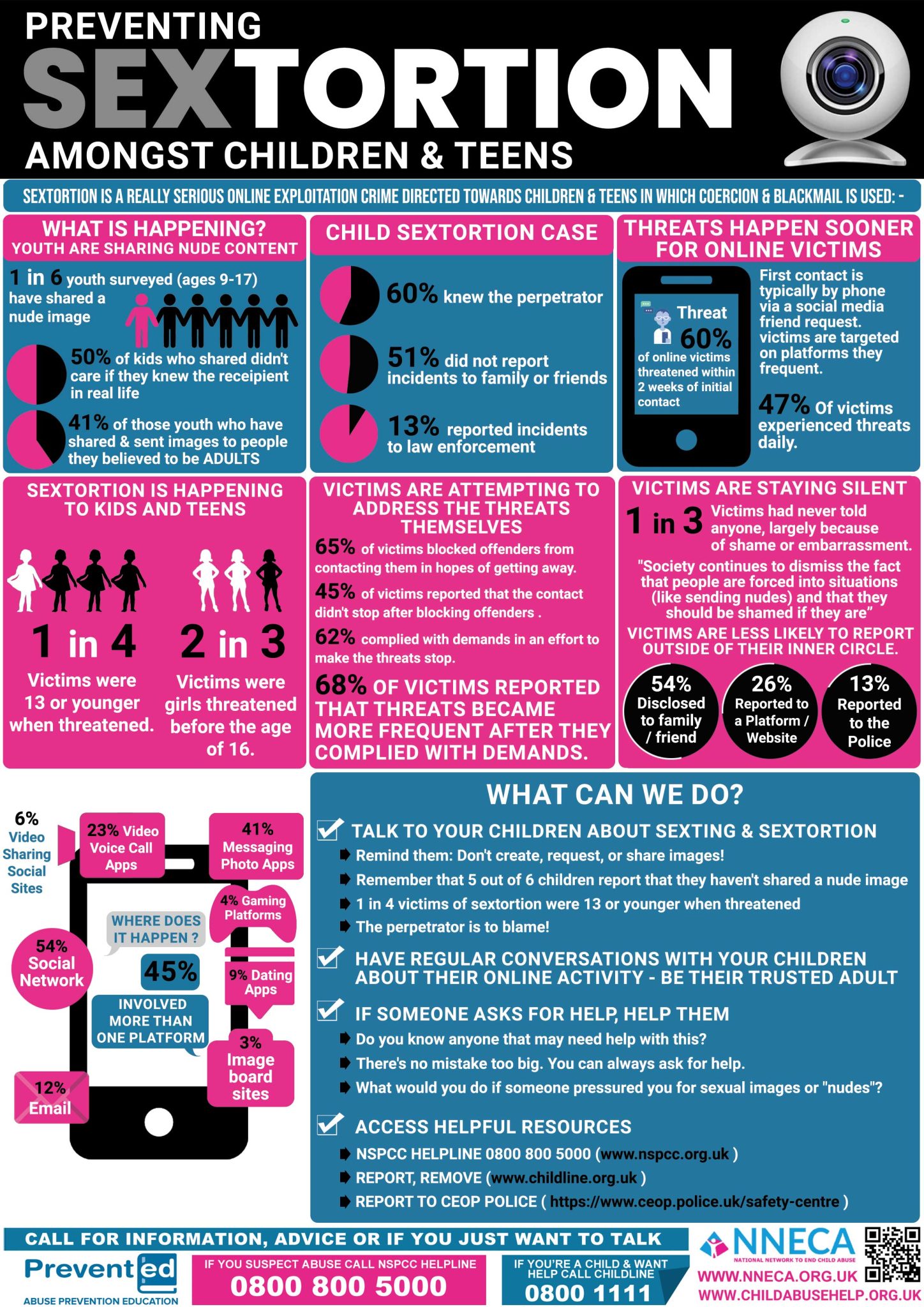

- Organizations such as the National Network to End Child Abuse (NNECA) provide very informative resources to help identify CSE and sextorsion amongst children and teens, as shown in the image below.

Resource from NNECA to prevent sextorsion amongst children and teens

Resource from NNECA to prevent sextorsion amongst children and teens

How to detect sexual abuse

The main challenge to detect sexual abuse and unsolicited sex is to differentiate it from legal and consensual adult content.

When it comes to protecting minors, image-identification and content filtering technologies such as MD5, SHA-1, PhotoDNA or PDQ hashes are widely used to detect CSAM by relying on databases of known images.

That said, apps and platforms typically resort to four levels of detection measures:

- user reporting

- human moderators

- keyword-based filters

- ML models

User reporting

Platforms should rely on the reports coming from their users. Users can easily make the difference between what is legal and what is abuse and report it to the trust and safety team so that these are reviewed by moderators, handled by the platform and / or reported to the authorities. It's a simple way to do moderation, the user just needs a way to do the report. The main disadvantage of user reports is the delay for the content to be reported and handled by the platform.

To simplify the handling of user reports and make the difference between the products mentioned in the message, it is important that users have the option to categorize their reports, with categories such as legal and abuse or CSE for instance.

Human moderators

Human moderators can also help to detect content related to sexual abuse as they are expert in making the difference between what is legal or illegal in terms of sexual content.

However, human moderation has some common issues such as the lack of speed and consistency. To assist human moderators, it is important to have well defined guidelines and examples, as well as resources with already encountered grey area cases. Platforms should also take into consideration the wellness of moderators, especially for those working with CSE content.

Keyword-based filters

Keywords are a good way to filter the data by detecting all the words related to legal and illegal sex so that a shorter sample can be verified by human moderators but they are not very efficient to distinguish the two types of content:

- Words like dick, erection, wet, sex, penetrate, woody, back door, etc.can be used to refer to sex in a sexual context but also in an illegal and abusive context.

- Some of these words having several meanings like wet, penetrate, woody or back doorare sometimes used in a safe context.

- Some words can be used to detect sexual abuse but they are unusual and limited. For example, words like rape and forcedcould be use to detect potentially abusive content related to rape and sexual assault.

- Most examples of illegal sexual content would probably be missed by a keyword-only approach. For example, these sentences could be dangerous, especially if said to minors:

ML models

The use of ML models may complement the keywords and human moderators approach by detecting sexual content with the help of annotated datasets containing different types of sexual content, abuse or not. It is very important to remember that collecting CSAM to train your models is totally illegal.

Automated moderation can also be used to moderate images and videos submitted by users and by detecting depictions of any type of nudity (nudity in a sexual context as well as people in underware for instance) or minors.

What actions should be taken with sexually abusive contents

Sexual content related to adults

More or less severe sanctions can be used by platforms and applications depending on the case. Removing the sexual comment, suspending the account of the user for a limited time or permanently or reporting the user to the legal authorities are examples of sanctions that can be applied.

Several criteria should be taken into account when deciding on the sanction:

- Is the sexual content consensual or non-consensual?

- If the content contains a consensual sexual message, the sanction should probably be less severe than if the message is non-consensual (for example, revenge porn should probably be more severely punished than common pornography)

- Is it a known behavior from the user (in the case of unsolicited sexual advances for example)?

- A moderation log can be used by moderators to allow them to report any incidents encountered for a specific user. Users having previously published sexual messages on the platform could easily be identified.

- Is the content clearly sexual?

- If the content is ambiguous (for example if it has two meanings), it is more complicated to penalize a user. However, it is advisable to err on the caution side and ask for a second opinion.

Sexual content and CSE

Sexual content involving minors is illegal in the US, UK, EU and in most regions of the world. It should therefore lead to serious consequences.

If you encounter contents related to CSE and especially CSAM, grooming or sextorsion directed to minors on your platform, you should report the case to the local authorities and to specialized NGOs such as:

- National Center for Missing and Exploited Children (NCMEC)

- Internet Watch Foundation (IWF)

- InHope

- UK Safer Internet Center

Sexual abuse proactive detection and prevention should be one of your priorities when building your platform and its community guidelines, especially if your platform is hosting users being minors and therefore more vulnerable. Remember that this type of behavior may have a serious negative impact on the life of affected users.

Read more

Joining the Online Dating & Discovery Association: Towards Safer Connections

This is a step to further enhance end-user safety in the online dating realm.

Personal & Identity Attacks — Content Moderation

This is a guide to detecting, moderating and handling insults, personal attacks and identity attacks in texts and images.