Toxic keyword lists and filters in 2026, the definitive guide

Keyword lists are as old as the Internet, right? But do they work?

Wait. I hear it’s common practice. How are they used, and will ML models replace them? Let’s see what those keyword lists mean in practice.

Page contents

- Text moderation challenges in 2026

- Keyword lists: what you need to know

- Limitations of keyword lists

- Language models to the rescue

- Using keyword lists and making them work in 2026

Text moderation challenges in 2026

In 2022, about 4.7 billion people were active on social networks. That's ~59% of the world population! 🌍 This represents an 8% increase over 2021 and is expected to continue increasing over time, along with the number of internet users. 📈

More users means more user-generated content, and in turn more problematic messages that need to be detected and moderated, especially as new types of toxicity such as harassment or self-harm have been expanding over the years.

Moderating each piece of user-generated content is time consuming for human moderators. Human moderation is also not always consistent as guidelines are not always clear or followed. Finally, the well-being of moderators is at risk because of the amount of disturbing content they read.

Automated text moderation with the use of NLP (Natural Language Processing) is one of the solutions available to address these issues. The main goal of NLP is to use computational techniques to be able to understand and reproduce human language. One of the difficulties that is also encountered in text moderation is to understand the intent behind the text. 🔍

Keyword lists: what you need to know

The oldest and most straightforward way to do automated text moderation is to use lists of keywords in a particular language. These lexicons contain keywords that are related to toxic, offensive, sexual or otherwise problematic content.

There are plenty of lists available online (⚠ but beware of their quality).

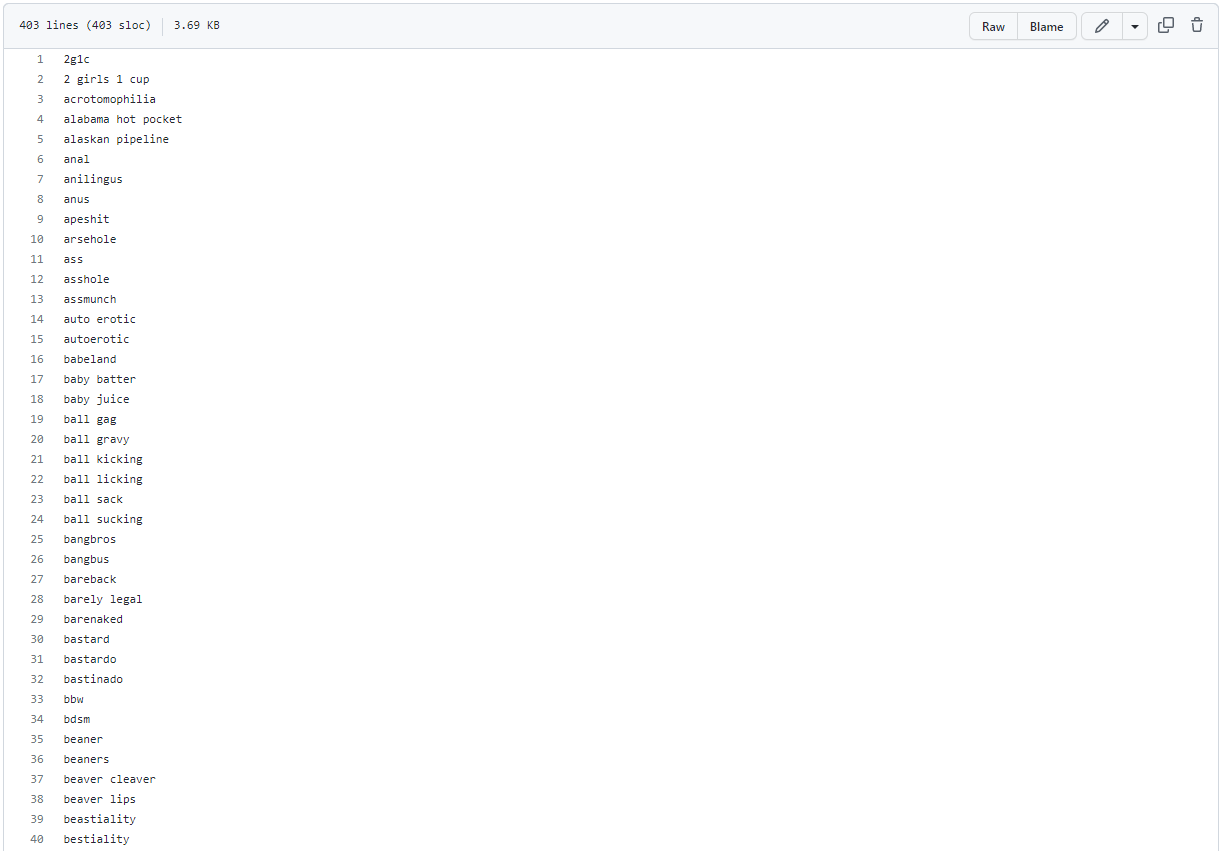

The open-source list of English 🇬🇧 🇺🇸 keywords below (available on GitHub) is the one used by Shutterstock to filter results from their autocomplete server and image recommendation engine. The list is short (403 items) and mainly contains sex-related words. It does not cover every problematic subject. For example, there are almost no words related to hate speech. Also, some entries like barely legal are words that we wouldn't necessarily want to flag.

Extract of the list of keywords from Shutterstock

Extract of the list of keywords from Shutterstock

How keyword lists can be used

Keyword lists are used to flag texts that are potentially toxic. The simplest way to use keyword lists would be to do something like this when a user posts a text (message, review, comment...):

- Determine the language of the text (not always easy, especially with short messages or mixed-language texts)

- Apply the keyword list of the corresponding language(s) (more on this later)

- Flag the text for further review/action if a keyword is detected

Pros of working with keyword lists

Keyword lists are quite straightforward to start working with and to set up. Compared to other options such as human reviewers or Machine Learning, they have a few advantages:

- Easier to set up than other solutions. Naive lookup or regex-based implementations can be up and running very quickly (but beware, having an efficient detection tool is actually very hard, more on this later)

- Efficient when targeting specific topics, languages or communities. For instance trying to detect mentions of competitors is easier to set up through a dedicated list than with ML models or human moderators

- Simple to iterate with. Adding/removing terms is very fast

That said, there are multiple limitations that you need to have in mind when working with keyword lists.

Limitations of keyword lists

The use of lexicons has limits. Here are the most common ones.

📜 Lists of keywords are not exhaustive. Keyword lists tend to miss tons of words. This is true with all English-language lists that can be found online, and is especially true with lists for lesser frequent languages where open-source lists are very sparse.

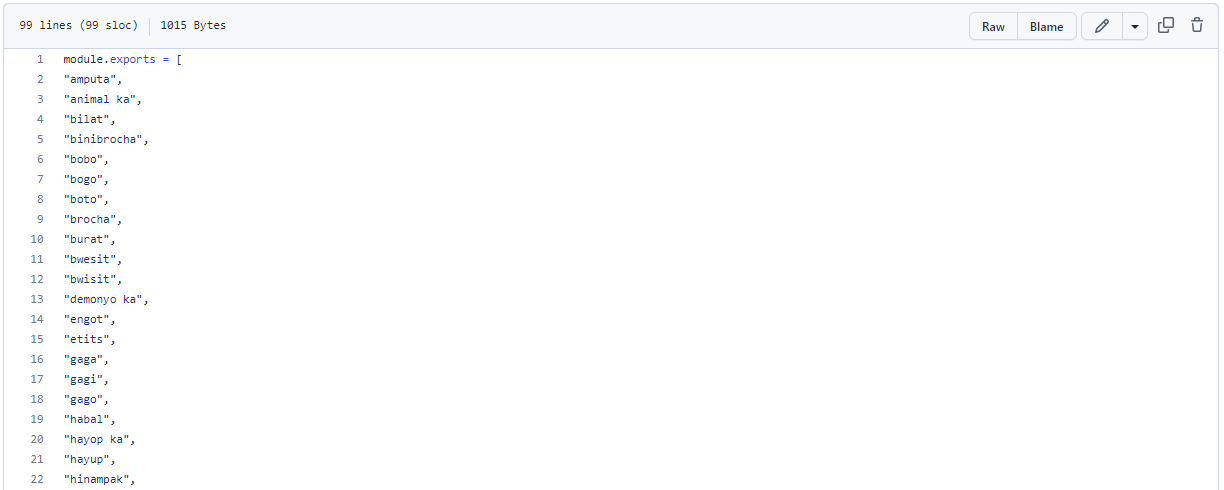

To create a keyword list in English 🇬🇧 🇺🇸, the best way would be to collect and review existing open-source lists, while the best way to do it in Tagalog 🇵🇭 would probably to create it from scratch with the help of native speakers.

An open-source list of keywords for Tagalog, containing only 97 words and missing some word variations

An open-source list of keywords for Tagalog, containing only 97 words and missing some word variations

🌱 Language is constantly evolving. New words and expressions are created. Words get new meanings. Lists need to stay up-to-date and include newer and upcoming language. For example, some slang in English is relatively recent and used by teenagers.

🔀 Not all keywords are equal. Words can vary in intensity: from the most toxic and extreme expressions to ones that can be acceptable in some settings or circumstances. Words belong to different types of toxic content, and need to be handled differently depending on their type: sexual, hate, insults, profanities, inappropriate terms, extremism, etc.

🤨 Some bad words are context-dependent. A bad word can have several meanings and therefore be used as an acceptable word in some contexts.

Some bad words are ok in some contexts and bad in others, depending on who the writer/receiver is, and depending on the overall conversation.

🔞 Some texts are unsafe but they do not contain specific bad words.

The recall is far from perfect with keyword lists, as many instances of toxic/unwanted content will not contain any particular bad words:

🕵️ Simple or naive implementations can easily be circumvented or fooled by end-users. Obfuscation is common and should be addressed (more on this later).

🗺️ Bad words are culture-dependent. We cannot simply translate keywords from one language to another. For example, there are not as many bad words related to religions in Hindi 🇮🇳 as in English 🇬🇧 🇺🇸, as religions are highly respected in countries where Hindi is spoken.

💬 In addition to the above limitations, keyword lists must also consider specific characteristics of the languages.

Related to the type of language:

- Some languages like Turkish 🇹🇷 are agglutinative. They use affixes that each have a specific function and that are easy to recognize in the word. The good news is that it is easy to find all the possible affixes used in the language. Rules can be created to generate all possible variations of the words.

- çiçek (flower), çiçek-ler (flowers), çiçek-ler-im (my flowers)

- Some languages like Swedish 🇸🇪, Polish 🇵🇱 or French 🇫🇷 are fusional languages. They also use affixes but these are more difficult to recognize in the word and they can have several functions. These languages are often called irregular. Conjugations and grammatical agreements can only be partially solved with automated rules as there are many exceptions that make the rules very complex. Another way to solve these is by adding all possible variations to the keyword lists, but the lists are then really big and could cause false positive detection. Here are some French examples:

- fleur (flower), fleurs (flowers)

- cheval (horse), chevaux (horses) and not chevals

Related to the different writing systems or rules:

- In Chinese 🇨🇳 for example, there are not necessarily spaces between words. A very good tokenization is needed to be able to split the text correctly before searching for bad words. Keywords can not work alone. For example, the text 创造性是重要的 (creativity is important) contains the character 性 that could be a sexual word in some contexts but that shouldn't be detected here as it is part of the word 创造性 (creativity).

- Several alphabets are used in Hindi (Latin and Devanagari) 🇮🇳 or in Japanese (Hiragana, Katakana and Kanji) 🇯🇵. This implies having different lexicons depending on the alphabet to detect all possible bad words. For example, the word घर (house) in Hindi would be transliterated into ghara. Also, some Hindi words that are written in the Devanagari script might be transliterated into several Latin versions.

Language models to the rescue

Another way to automatically moderate content is to use language models that are trained on large annotated datasets. These datasets contain potentially offensive and harmful content.

One of the main advantages is that models make predictions while taking context into consideration, as well as culture. Because they do not only rely on specific words, they are able to better understand the intent of the text author.

For example, the word woody will appear in mainly two contexts (to describe nature and forest or to refer to male genitals in a sexual way - or also to refer to a famous animated character) but only one of them is problematic.

Models can learn the context in which the words appear when they are used in an unacceptable way. That is why they are less likely to make mistakes regarding the meaning of words.

Using keyword lists and making them work in 2026

When working with language models, it is not always easy to find or to create relevant annotated datasets matching what we want to detect.

Also, large datasets are generally unbalanced ⚖️ and rarely contain as many problematic texts as safe texts. For example, promising performances for hate speech detection are obtained with transformers-based approaches but the models "are still weak in terms of macro F1 performance [...], indicating the great difficulty in achieving high precision and recall rates of identifying the minority hatred tweets from the massive tweets" (Malik et al., 2022).

Datasets do not always contain profanity that are acronyms, abbreviations or obfuscated words for example. Models that are trained on such datasets do not work well for any possible cases.

Models are not efficient enough to detect all the ways someone could try to bypass a moderation system.

There are millions of possible obfuscated words, e.g. variations of bad words used to circumvent basic word-based filters, for one given bad word. Let’s see some examples of possible variations of words:

Repetitions

Repeating letters is the most straightforward change, and probably the easiest one to counter. But simple filters that remove repeated letters don't necessarily work. beet and bet don't have the same meaning in English. Some languages accept 3- or 4-letter repetitions. The Hebrew word ממממניו (pronounced mimemamnayv, meaning "from those who fund him") contains four times the same letter in a row.

Replacements

Letter replacements can become very creative. Letters are replaced with punctuation, symbols, digits or even other letters.

Emoji obfuscation

Replacing letters, groups of letters with emojis is also a common way to evade filters. Emojis can be used to replace the entire word it represents (like star in rockstar) but also a group of letters or a word having a similar sound (like anker which sounds like anchor) or simply a single letter (such as w).

Users tend to be very creative when it comes to emojis, and can even express intentions, sentences through emojis, or use emojis as modifiers.

Insertions

Inserting extra spaces or punctuation in between the letters of the word is a simple way to evade naive filters. Humans can easily read a word with extra inserted non-letter characters. Words become harder to read with inserted letters, such as in the above example, but can still be read.

Special characters

If users can use the full UTF-8 set then they have access to roughly a million of character points, many of which look very similar to a human eye, but not to a computer. Here are just a few examples, out of hundreds of possible characters resembling p and a:

| p | ρ | ϱ | р | ⍴ | ⲣ | 𝐩 | 𝑝 | 𝒑 |

| LATIN SMALL LETTER P (0070) | GREEK SMALL LETTER RHO (03C1) | GREEK RHO SYMBOL (03F1) | CYRILLIC SMALL LETTER ER (0440) | APL FUNCTIONAL SYMBOL RHO (2374) | COPTIC SMALL LETTER RO (2CA3) | MATHEMATICAL BOLD SMALL P (1D429) | MATHEMATICAL ITALIC SMALL P (1D45D) | MATHEMATICAL BOLD ITALIC SMALL P (1D491) |

| a | ɑ | α | а | ⍺ | 𝐚 | 𝑎 | 𝒂 | 𝒶 |

| LATIN SMALL LETTER A (0061) | LATIN SMALL LETTER ALPHA (0251) | GREEK SMALL LETTER ALPHA (03B1) | CYRILLIC SMALL LETTER A (0430) | APL FUNCTIONAL SYMBOL ALPHA (237A) | MATHEMATICAL BOLD SMALL A (1D41A) | MATHEMATICAL ITALIC SMALL A (1D4EE) | MATHEMATICAL BOLD ITALIC SMALL A (1D482) | MATHEMATICAL SCRIPT SMALL A (1D4B6) |

Spelling mistakes and phonetic variations

Here again, users can be creative in the way they spell words and expressions. Phonetic variations are very language-specific, so that any attempt to detect and substitute those has to take into account the specific pronunciation rules of each language.

Leet speak

Leet is a way of spelling words and replacing letters by making heavy use of codified digit and punctuation substitutions. Here are just a few examples for D:

| ) | |) | (| | [) | I> | |> | ? | T) | I7 | cl | |} | |] |

Images

Another way to evade filters would be for users to use Images or Videos in which they include the text they want to convey.

Using a solution to Moderate Text in Images and Videos can be helpful.

Embedded words

Removing spaces in between the letters of the word is also a simple way to evade naive filters. Humans can still understand the intended meaning, such as in the above example.

While automated rules can look for embedded words, this can generate large numbers of false positives. For instance the word ass, is embedded in safe words such as amass or bassguitar.

Keyword lists are the most suitable solution for obfuscated words detection.

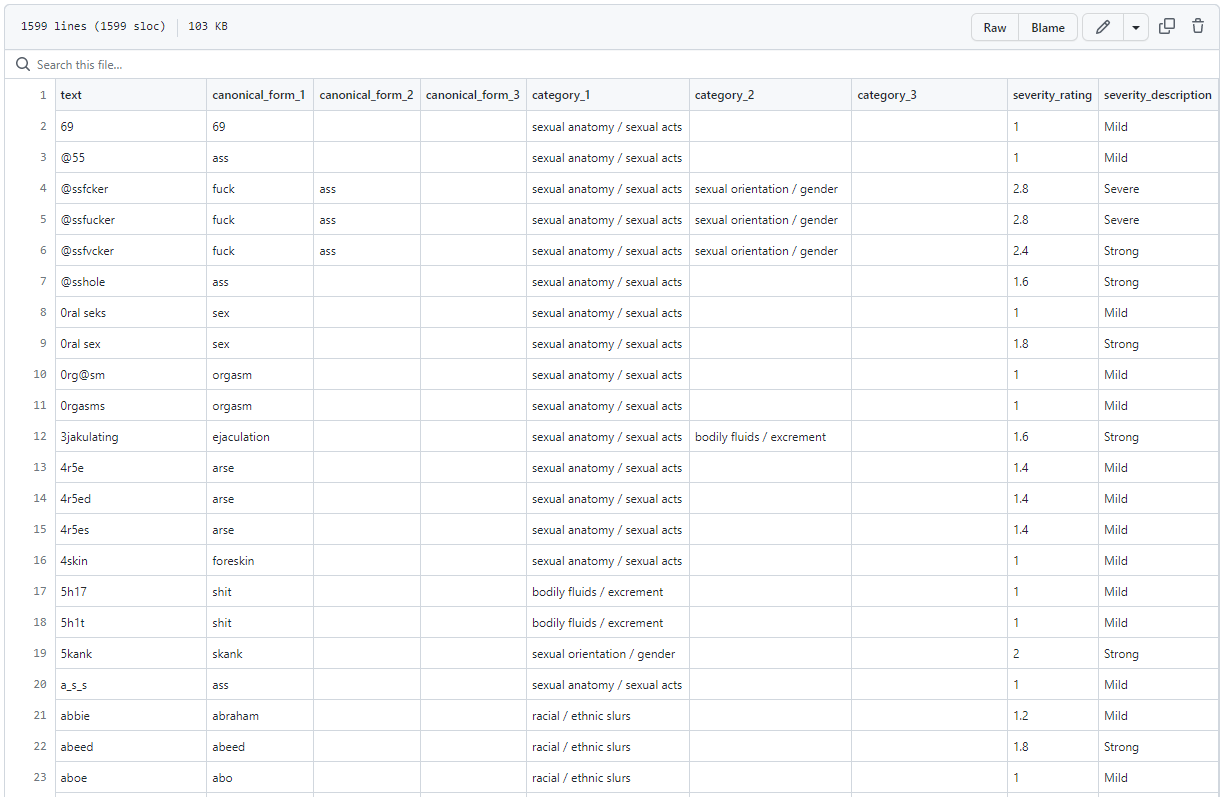

For this to work, words must be combined with rules. Note that there are sometimes open-source word lists such as the one below from Surge AI available on GitHub that contain obfuscation cases. This is not the best way to handle obfuscations: it makes the lists bigger and still not exhaustive as there are thousands of possible variations.

List of keywords from Surge AI containing some but not all possible obfuscations

List of keywords from Surge AI containing some but not all possible obfuscations

They are also useful to prefilter and reduce the data. This way, every potentially unsafe word (even words that are context-dependent like woody) is flagged and human moderators can focus on a shorter sample to be verified. It would make their work easier by making the task less time-consuming and less harmful.

Keywords can be used to retrieve data related to topics that are more difficult to detect. Words like suicide, hurt, anxious or die could be part of a list used as a first filter to detect self-harm for example.

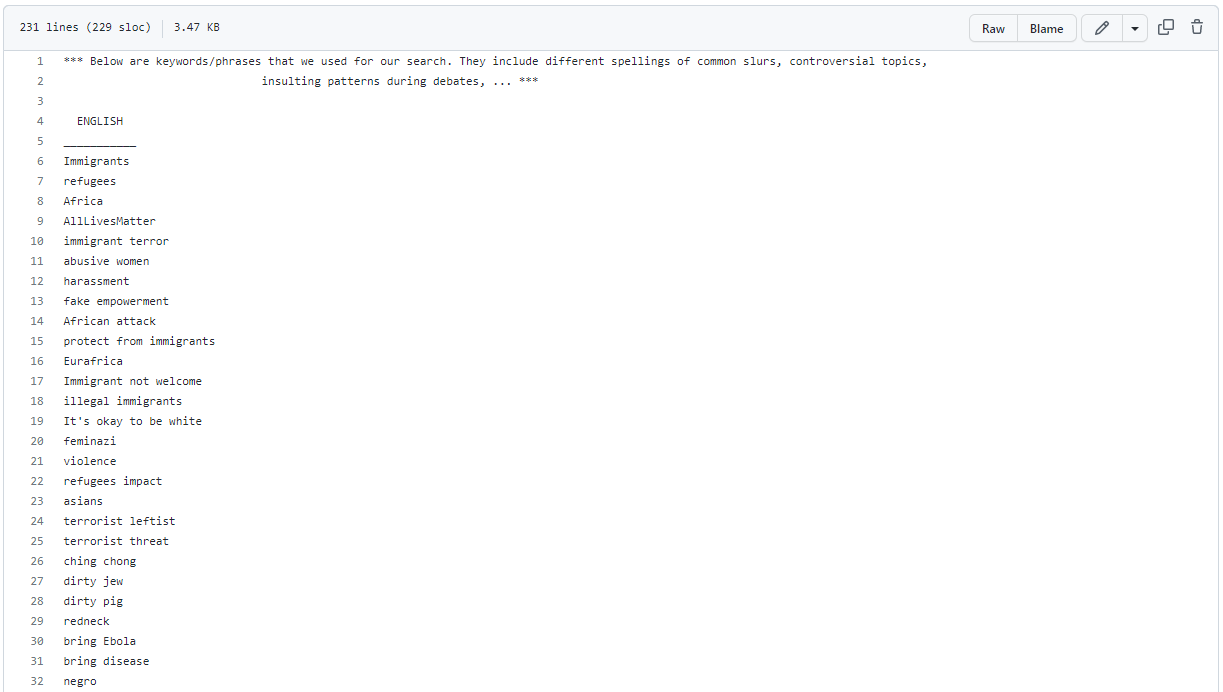

Keyword lists are also convenient to focus on a specific topic to detect, such as the open-source list below (Ousidhoum et al., 2019 - available on GitHub) which only contains words and expressions related to hate speech.

List of keywords focusing on hate speech detection

List of keywords focusing on hate speech detection

Knowing the exact word that makes the text problematic is helpful if we want to determine intensity levels, thresholds or subcategories. As an example, the word stupid is an insult but it is not the most offensive insult. It should probably not be processed the same way as a very offensive word. Also, when detecting extremist content ❌ for example, it is interesting to know if the detected word refers to the name of a group, a slogan, a symbol, and if it is legal or not. And it is the same for medical drugs 💊: is it a drug used against depression? Or to lose weight? Is a prescription needed to get it?

Keyword lists can also be used to handle code-switching, which often occurs among speakers with multiple native languages or for languages that can be written with different scripts, such as languages spoken in India 🇮🇳 or in Nepal 🇳🇵 that use Devanagari and Latin alphabets.

Finally, lexicons are a quick and easy solution to run tests, especially on large amounts of data. They allow a simple but insightful analysis of the kind of problematic content found in the data.

Hybrid moderation

Language models are far from perfect and won't detect just any problematic text. Lexicons of keywords are still heavily used and, if used with smart de-obfuscation and an advanced taxonomy, can be used to detect and handle obfuscated toxicity. This is quite typical with user-generated content. They are also very useful to flag the position of a problematic word in a text.

Combining keywords, automated rules and models while considering the way languages work is a powerful way to combine the best of both worlds.

For a hosted, low-code solution to this, get started.

Read more

Sexual abuse and unsolicited sex — Content Moderation

This is a guide to detecting, moderating and handling sexual abuse and unsolicited sex in texts and images.

How to spot AI images and deepfakes: tips and limits

Learn how to recognize AI-Generated images and deepfakes. Improve your detection skills to stay ahead of the game.