Unsafe organizations and individuals — Content Moderation

Page contents

- Definitions: Unsafe organizations and individuals in user content

- What's at stake

- How to detect unsafe organizations and individuals

- What actions should be taken with unsafe organizations and individuals related content

Definitions: Unsafe organizations and individuals in user content

Unsafe organizations are often illegal and related to:

- terrorism

- unauthorized events planning (it could be terrorist attacks but also unpermitted protests that could lead to dangerous situations)

- sectarian groups

- extremist groups

- organized crime groups

Content related to unsafe organizations may include names of people or movements, slogans or ideologies such as antisemitism, white supremacism, islamism or anti-government ideas.

As user-generated content grows on social media, so does extremist content: for example, "between August 1, 2015 and December 31, 2017, [Twitter] suspended 1,210,357 accounts [...] for violations related to the promotion of terrorism" (more info here).

What's at stake

Most of the content promoting or supporting unsafe organizations like terrorism, unauthorized events or sectarism is actually illegal in the UK, US and EU.

Extremism is not always illegal but is generally not tolerated by platforms and applications users. Also, promotion of extremism often leads to hate speech and identity attacks towards protected groups, which should not be accepted on platforms hosting user-generated messages.

It is therefore important, if not mandatory, to detect this type of content and report it to legal authorities when necessary.

Ignoring this threatening content may lead to very serious and unsafe situations involving injury and death in the worst case scenario.

How to detect unsafe organizations and individuals

Here are some examples of ways a platform could be used for illegal purposes related to unsafe organizations:

- Social media platforms are sometimes used to disseminate propaganda / extremist ideas.

- These platforms are also sometimes used to communicate with people, especially young ones, and make them join a sectarian or extremist group for example. Likewise, dating applications can be used to recruit potential terrorists or new members of a group.

- Religious preachers sometimes perform livestream videos of their extremism teachings or sermons.

- Real-time attacks can be organized through social networks.

- Gaming platforms are sometimes used for combat training.

- Social networks can be used to spread misinformation and prevent the authorities from doing their job properly for the safety of others.

There are several ways to detect these kinds of behaviors but it can be difficult to do so as they are often not explicit:

- user reporting

- human moderators

- keyword-based filters

- ML models

User reporting

Platforms should rely on the reports coming from their users. Users can report suspicious messages to the trust and safety team so that these are reviewed by moderators, handled by the platform and / or reported to the authorities. It's a simple way to do moderation, the user just needs a way to do the report. The main disadvantage of user reports is the delay for the content to be reported and handled by the platform.

To simplify the handling of user reports and make the difference between the possible topics of the message, it is important that users have the option to categorize their reports, with categories such as terrorism or sectarism for instance.

Human moderators

Human moderators are efficient for detecting content related to unsafe organizations as they are expert in detecting implicit information. Yet, such content needs to be detected as soon as possible and the lack of speed is a common issue with human moderation.

Also, detecting extremist content can be challenging even for human moderators. Some slogans or terms used by extremist groups can be unknown to moderators. Such examples would be:

To help detect those, you should make sure that moderators get training on the latest trends used by extremist groups, and encourage your moderators to seek and share feedback on flagged items, to spur continuous improvement and learn from the ever-evolving behaviors of your community. One suggestion would be to have a database of these groups with their names, logos, slogans, head members, etc. for quick reference during reviews. This would probably lower the error rate and increase the speed when it comes to decision-making.

Here are some examples of resources that can be used for a quick reference to identified unsafe groups:

- Foreign Terrorist Organizations from the US Departement of State

- Terrorist entities from the Public Safety Department in Canada

- Hate Symbols Database and Glossary of Extremism from ADL (Anti-Defamation League)

- Mapping Militants Project and Global Right-Wing Extremism Map from the Center for International Security and Cooperation of Stanford

Keyword-based filters

Keywords are a good way to pre-filter comments and messages by detecting all the words related to unsafe organizations such as:

- antisemitism: nazi, hitler, 6mwe, etc.

- white supremacism: kkk, white lives matter, etc.

- islamism: isis, al qaeda, etc.

- anti-government ideas: acab, etc.

Although the above keywords are relevant, we should keep in mind the context of the words and the differences in terms of language and culture. For example, the word kkk is often used to express laughter or something funny in Portuguese and could lead to false positive detection. For more information on existing lists of keywords, you can refer to this repository.

Also, note that when it comes to criticizing government officials or organizations, it is usually allowed as long as it is not threatening or calling violence (acab for instance is not explicitly calling for violence).

With these detected keywords, human moderators can focus on a shorter sample to be verified, but this method is not the best to detect unexplicit content and could lead to a high risk of missing dangerous messages. The example below for instance does not contain any word related to unsafe organizations but could still be a threat depending on the context:

ML models

The use of ML models may complement the keywords and human moderators approaches by detecting such cases with the help of annotated datasets containing examples of unsafe organizations promotion or support.

Automated moderation can also be used to moderate images and videos submitted by users by detecting offensive symbols representing hateful ideas (nazi-era or supremacist symbols) as well as terrorist flags and symbols.

What actions should be taken with unsafe organizations and individuals related content

As soon as the unsafe content is found and verified, platforms and applications must handle the issue depending on its intensity and severity.

High-priority messages

Messages referring to illegal organizations or suggesting an upcoming threat such as a terrorist attack are considered high-risk messages.

When encountering such comments, platforms should react extremely quickly by reporting them to the country's authorities and providing them with all the necessary information.

Rules and legislations regarding such content and actions depend on the country, but below are some examples:

- In the EU, a legislation from 7th June 2022 requires online platforms to remove terrorist content within one hour after it is identified. It also indicates that national competent authorities can send removal orders to hosting service providers to request the take down of terrorist content.

- In the UK, a legislation from 2019 gives law enforcement authorities the power to issue notices requiring online platforms to remove terrorist content within a specified timeframe. Failure to comply with a notice can result in fines or imprisonment for platform operators.

- In Australia, this act from 2019 makes it an offense for online platforms to fail to remove abhorrent violent content, including terrorist content, in a timely manner. The act also sets out the penalties for non-compliance, including fines and imprisonment.

- In China, under article 19 of the Counter-Terrorism Law of 2015, when information containing terrorist or extremist content is discovered, it must be swiftly halted and reported to the appropriate authorities.

- In the US, there are currently no specific laws on the federal level that instructs platforms to remove these contents immediately, however the US has recently joined The Christchurch Call initiative, an international accord that lays out a best practice framework for technology platforms to identify, review and censor terrorist content and activity on social media.

In any case, the platform or application should not handle the issue by itself and should refer to the legal and relevant authorities.

For more information on regulations by countries, you can refer to this guide that was compiled by Tech Against Terrorism and that describes the state of online regulations for terrorism-related content.

Medium and low-priority messages

These messages are less urgent because they are not illegal or because they are less obvious. A user promoting extremist ideas is probably less of a risk than a user expressing a desire to kill anyone who disagrees with his extremist ideas.

In such cases, platforms could decide to ban the user for a limited time or permanently. They also could decide to take some time to see if the user posts more similar comments.

Setting up a moderation log to allow moderators to report the incidents encountered for a specific user is a way to track possible at-risk users.

The process defined for high-priority situations could then be used for users that are in fact at risk.

It is important to remember that in case of any doubt, the issue should still be reported to local authorities to avoid any possible mistake that could lead to very serious consequences, as social media platforms and other platforms hosting user-generated content have become a preferred means to promote extremist ideas, especially among young or vulnerable users.

Read more

Harassment, bullying and intimidation — Content Moderation

This is a guide to detecting, moderating and handling harassment, bullying and intimidation in texts and images.

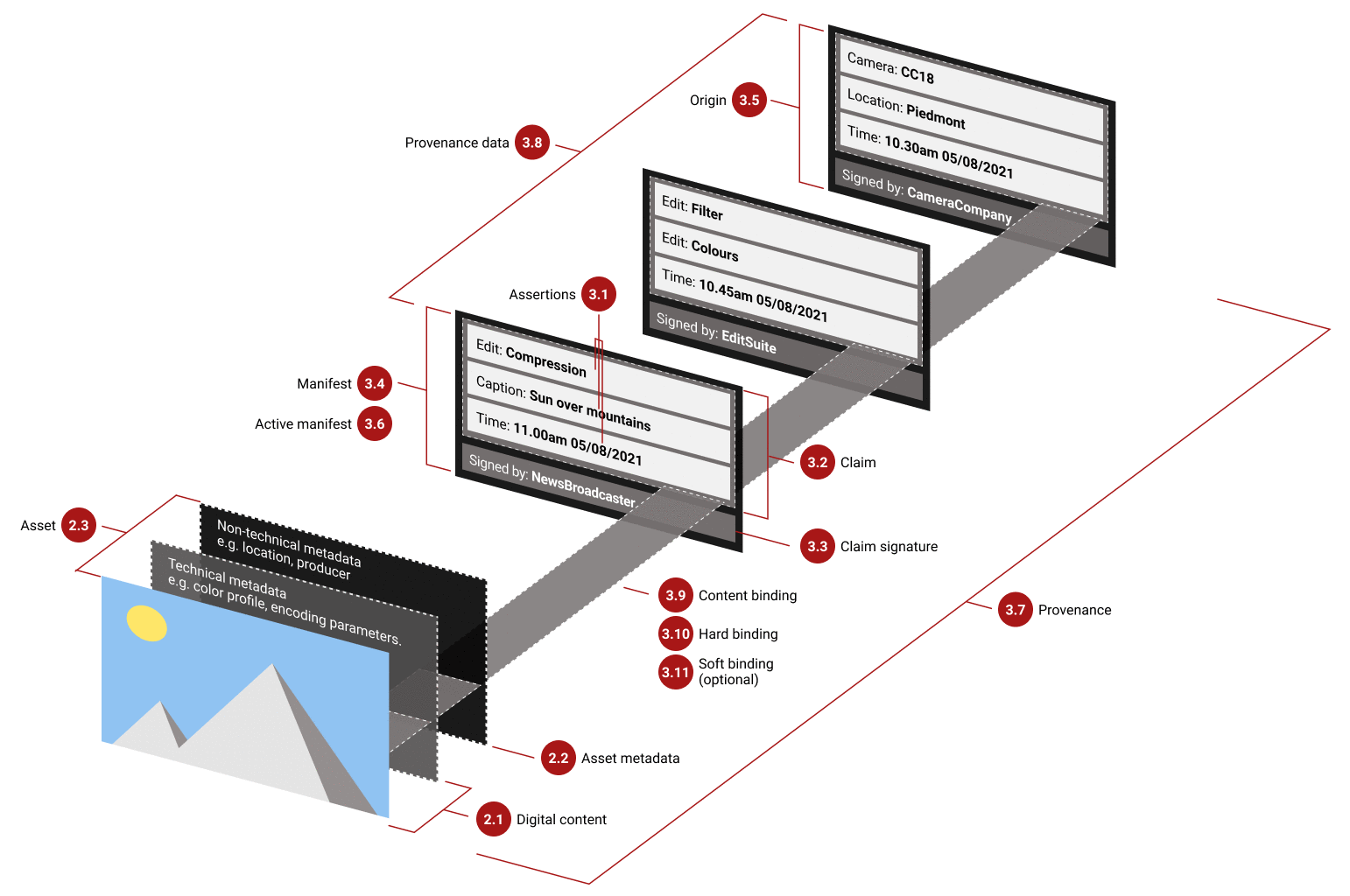

Python C2PA Tutorial: A Hands-on Guide to Verifying Images and Detecting Tampering

A developer's guide to C2PA, showing how to read metadata, detect tampering, and verify content authenticity with Python examples.