Generative AI in 2026, the Trust & Safety guide

AI-generated (GenAI) images are becoming increasingly common online. While they open up possibilites for creative expression and exploration, they can also become a tool to help spread misinformation and generate illegal imagery. As such, Trust & Safety teams will need to keep a close tab on this technology, the regulatory environment and approaches to combat nefarious GenAI.

Page contents

Current GenAI developments

Generative AI is a subset of deep learning models that learn characteristics of training data to create new content having similar patterns to the data it was trained on.

This article focuses on image and video generation. The most popular models here are:

| Model | Latest versions | Description |

| Stable Diffusion (Stability AI) | SDXL, SD2.1 | open-source deep learning, text-to-image model based on generative diffusion techniques that is one of the most popular ones among users and that has led to a large number of variants and submodels |

| MidJourney (MidJourney Inc) | 6.0 | closed-source text-to-image tool that is accessible through a Discord bot and that gives users several variations and engines to choose from, making it very versatile |

| DALL-E (OpenAI) | 3 | closed-source text-to-image model that is available through OpenAI APIs, ChatGPT and Bing Image Creator, and that allows great customization and advanced capabilities |

| Imagen (Google) | closed-source text-to-image diffusion model with a great photorealism capability | |

| Firefly (Adobe) | text-to-image model mainly used in the design field and embedded into Adobe products |

To generate an image with one of these models, you should use a prompt, i.e. an input using natural language that is given to a GenAI model so it can then generate an output (here it would be a visual output).

Let's say we want to generate an image with the following prompt: "A teenager on a computer being tricked by AI-generated images, cartoon style". Below are examples of AI-generated images that we would obtain.

Stable Diffusion

DALL-E 3

MidJourney 5.2

Firefly

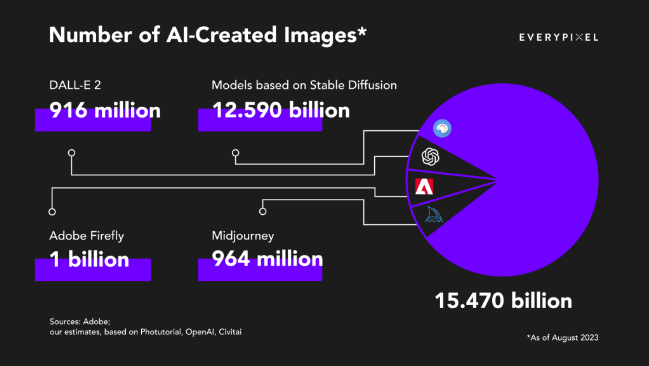

All these tools and platforms have become very popular among people by allowing them to generate any image from a description in natural language. According to this Everypixel study, as of August 2023, more than 15 billion images have been created using text-to-image algorithms, which is as many images as photographers have taken in 150 years, from the first photograph taken in 1826 until 1975.

Number of AI-generated images as of August 2023 (Everypixel, 2023)

Number of AI-generated images as of August 2023 (Everypixel, 2023)

Anyone can become an artist, creating a superb, beautiful or impressive image. But because these tools are very new, accessible to the general public, and used by an increasing number of users, they also come with more negative, even dangerous, aspects that need to be regulated in a very urgent way.

GenAI imagery and regulations

Generating funny, informative or artistic visual content is become increasingly common, thanks to its ease and impressive results. However, GenAI comes with its own set of challenges, including:

- Disinformation through deepfakes or fake news, and especially political deepfakes like this famous video created by Hollywood filmmaker Jordan Peele featuring former US President Barack Obama swearing against Donald Trump.

- Exploitation of vulnerable groups of people such as minors.

- Impersonation and image-based abuse, such as fake nudes.

- Biases that could lead to stereotype replication and therefore hate or discrimination.

- Manipulation to encourage people to perform harmful activities against self or someone else.

- Misuse by problematic or terrorist organizations.

- Intellectual right or copyright infringements.

Note that what we call deepfakes includes both AI generated content that spreads false information about someone, as well as any other content that has been digitally modified by a human to mislead other users.

Generative AI and regulations

Illegal imagery

Images and videos that are AI-generated are subject to the same rules as real images. The fact that the image is fictional and that the people in the image are not "real" does not change the legality of said image. In short:

- AI-generated images featuring abuse, such as CSAM (Child Sexual Abuse Materials), are illegal in all jurisdictions. An IWF (Internet Watch Foundation) report from October 2023 shows that a strong increase in photo-realistic AI-generated images containing CSAM and recommends that governments cooperate to review laws to better cover AI CSAM.

- AI-generated images promoting terrorism, hate or extremism, fall under the same regulations as real images.

- AI-generated images containing impersonation (such as a generated image containing a real person's face) are treated accordingly depending on the jurisdiction:

- In the US, publicity rights, also called personality rights, allow individuals to control the commercial use of their names, images, likenesses, or other identifying aspects of identity. Some states have specific laws against deepfakes, but it is still quite rare: Texas is banning deepfakes created to influence elections, Virginia is prohibiting pornographic deepfakes and California is banning both for instance.

- In the UK, there is at the moment no specific right to protect an individual's own image.

- In the EU, the GDPR (General Data Protection Regulation) requires to inform existent people if they are part of a deepfake. Individuals that are victims of deepfakes are also protected by the ECHR (European Convention on Human Rights), and especially the right to privacy (Article 8) and the right to freedom of expression (Article 10).

- Some countries have specific regulations for impersonation in deepfakes, like China: a new law from this year allows people to be protected from being impersonated by deepfakes without their consent.

- AI-generated images containing intellectual property (logos, designs...) are subject to the same copyright restrictions as real images.

Indicating AI generation or modification

In the EU, the use of AI will soon be regulated by the AI Act, the world’s first comprehensive law on the development and use of AI technologies. GenAI falls under the scope of these regulations.

Companies hosting user-generated content will need to indicate whether a given image or video was AI-generated. This means that companies will have to ask users to label images accordingly and/or automatically detect AI-generated imagery.

Companies developing or deploying GenAI models will further have to comply with the following:

- Develop models in ways that limit the risk of generating illegal content.

- Publish summaries of copyrighted data used for training purposes.

Depending on the scope and risks, some GenAI tools could also be banned or assessed before being put on the market and after, if they are used to manipulate vulnerable groups or if they are related to biometric identification for instance.

You should in any case stay informed about relevant laws and regulations in the regions where your platform operates. Failing to comply with these requirements could have serious legal consequences and damage your reputation.

What companies do - Generative AI

As of today, most platforms currently allow AI-generated content, as long as it adheres to the platform's community standards and advertising policies:

- Facebook does not specifically mention AI-generated content, such content is accepted as long as it complies with the community guidelines.

- LinkedIn's guidelines specify that any misleading or harmful content will be removed, whether AI-generated or not.

- YouTube refers to its 4 Rs (remove violating content, reduce recommendations of borderline content, raise up authoritative sources for news and information, and reward trusted creators) to deal with misleading content but does not explicitly mention AI-generated content. Quality and verified content is highlighted on the platform with additional information from other authoritative third-party sources.

- Snapchat mentions GenAI from the misleading content point of view, indicating that misleading manipulated content (by AI or by editing) is not accepted on the platform.

However, some other social media platforms have introduced new rules or tools for such content:

- X launched a new update on their Community Notes. Community Notes are a way for users to collaborate through notes associated with tweets where they can add integrity information about the content of the tweet, keeping people informed and aware of any misleading content. The "fact-checking" update is allowing to add notes directly to the media that is attached to the tweet. By being appended to all versions of one image shared across the platform, these notes help to avoid their dissemination.

- In its Community guidelines, TikTok mentions an "AI-generated content label" that is supposed to be used by creators before uploading a post that includes a media that is generated or modified by an AI. They also encourage users to report AI-generated posts that are not labeled as such. Finally, they list non-authorized AI-generated content: "AI-generated content that contains the likeness of any real figure, including 1) a young person, 2) an adult private figure, and 3) an adult public figure when used for political or commercial endorsements or any other violations of our policies".

We expect platforms to further update their rules and processes ahead of the upcoming regulations, especially as these issues have already been raised, for instance in the case of political deepfakes: at the beginning of October 2023, US legislators called on Meta to explain why deceptive AI-generated political advertisements were not flagged on Facebook and Instagram.

Identifying AI-generated content

Given the current and upcoming regulations around AI and GenAI, we expect platforms hosting user-generated content to need to systematically identify and flag AI-generated images and videos.

While platforms will in some cases ask users to self-flag or self-report GenAI, additional tools will be needed. Possible tools are: Watermarking and AI-Detection.

Watermarking

Watermarking can be used to mark an image as being AI-generated, and later help platforms identify the source of the image.

While watermarks originally consisted of designs or signs that were simply added to digital images, making them easy to edit out, newer watermarks are invisible to the naked-eye and are embedded into the image itself. They can also used to identify the generator of the image. Examples include:

- SynthID by Google

- RivaGAN

There are some limitations to the use of watermarks for AI-generated image detection:

- Watermarks need to be added to the image. Currently most generators do not include any known watermark, making this approach unscalable.

- Creators can in some cases remove watermarks. This is helped by some open-source repositories sharing code to tamper with watermarks.

- Creators can use GenAI models that they control and that don't add any watermark. This is typically how illegal imagery is generated: creators will rely on models they run on their own.

Automated Detection of AI-generated content

Detecting AI-generated content can also be done by leveraging AI models specifically trained to recognize AI-generated imagery.

At Sightengine, we have trained a model on millions of examples of real and generated images, spanning photography, digital art, memes and illustrations. Platforms and apps can use the AI-generated image detection model to flag AI-generated content and/or apply specific moderation processes to generated imagery.

Read more

AI images: testing human abilities to identify fakes

Results and insights from our AI or not game: how well humans identify AI images, when they get fooled and what we can learn from this.

Sexual abuse and unsolicited sex — Content Moderation

This is a guide to detecting, moderating and handling sexual abuse and unsolicited sex in texts and images.