Illegal traffic and trade — Content Moderation

Page contents

- Definitions: Products involved in illegal traffic and trade

- What's at stake

- How to detect illegal traffic and trade

- What actions should be taken with illegal traffic and trade related content

Definitions: Products involved in illegal traffic and trade

This topic gathers various situations since it covers all the subjects that could potentially be related to traffic or illegal trade such as:

- weapons, i.e. generic words, models or names of brands selling weapons

- recreational drugs, i.e. correctly spelled names of drugs but also other common words to refer to drugs that are not in the dictionary

- medical drugs, i.e. names of medical drugs that could potentially be dangerous because they are available under prescription or because they are used to treat pain, obesity, depression, anxiety or insomnia

- alcoholic beverages, i.e. generic words, names of alcohol or names of brands selling alcohol

- tobacco, i.e. generic words or names of brands selling tobacco, but also vape or e-cigarette products

- chemical products, i.e. names of chemical products that could potentially be used for illegal and dangerous purposes like making a bomb

What's at stake

You should decide the policy of your platform while taking into account the cultural and legal aspects of the countries and states in which you want to develop it.

Selling weapons, alcoholic beverages or some drugs is not illegal in every country. For example, recreational use of cannabis is legal in Canada or South Africa, but illegal in France or Germany.

Also, some countries are more strict than others regarding minors, such as the United States that have very strict rules about the legal age for alcohol use.

More generally, moderation of such content depends on the audience that accesses and uses the platform. If children and teenagers use your platform or application, they probably shouldn’t be exposed to traffic or trade of dangerous products anyway.

The decision to detect the categories listed above also depends on the category. For example, most social media platforms do not authorize recreational drug or medication selling or purchasing, but some could accept the selling of products related to tobacco. Facebook indicates in its policy that neither of the above categories is accepted for trade.

Some exceptions could be made, as happened in April 2022 when Meta decided to allow users in Sri Lanka to solicit drugs on Facebook to fight an ongoing medical supply crisis.

How to detect illegal traffic and trade

Apps and platforms typically resort to four levels of detection measures:

- user reporting

- human moderators

- keyword-based filters

- ML models

User reporting

Platforms should rely on the reports coming from their users. Users can easily report illegal traffic or trade of dangerous substances and products to the trust and safety team so that these are reviewed by moderators, handled by the platform and / or reported to the authorities. It's a simple way to do moderation, the user just needs a way to do the report. The main disadvantage of user reports is the delay for the content to be reported and handled by the platform.

To simplify the handling of user reports and make the difference between the products mentioned in the message, it is important that users have the option to categorize their reports, with categories such as weapon, recreational drug or tobacco for instance.

Human moderators

Human moderators can also help to detect such content, especially because it could be difficult for automated moderation approaches to make the difference between actual unauthorized discussions about dangerous product trading and conversations about dangerous product prevention for example.

However, human moderation has some common issues such as the lack of speed and consistency. Another common issue is the lack of expertise of the moderators who might miss messages containing keywords or expressions that they don't know. To overcome this, platforms could create a database with lists of keywords related to dangerous products and keep it up-to-date for moderators to refer to it when needed.

Keyword-based filters

Keywords are a good way to pre-filter comments and messages by detecting all the words related to potential illegal traffic or trade such as:

- weapons: ak-47, gun, kalashnikov, etc.

- recreational drugs: xtc, weed, shrooms, roofies, etc.

- medical drugs: xanax, fentanyl, morphine, etc.

- alcoholic beverages: beer, martini, heineken, vodka, etc.

- tobacco: marlboro, camel, vogue, etc.

- chemical products: nitromethane, nitric acid, etc.

With these keywords, human moderators can focus on a shorter sample to be verified. Keywords are quite efficient to detect such content as the words above are almost always related to problematic content, but platforms should still be careful with words having several meanings and / or that could possibly lead to false positives depending on the context (if the problematic word is actually used in a safe comment for instance, or if the word actually has a safe meaning like the word weed that could refer to legal plants).

There are some other situations where keywords show limits for the detection. For example, there are on Grindr new words and ways to refer to drugs such as parTy and play where the letter T refers to Tina, a name for meth. The capital T is even used by itself to refer to this drug (see screenshot below).

Screenshot of a Grindr profile using the capital T to refer to meth

Screenshot of a Grindr profile using the capital T to refer to meth

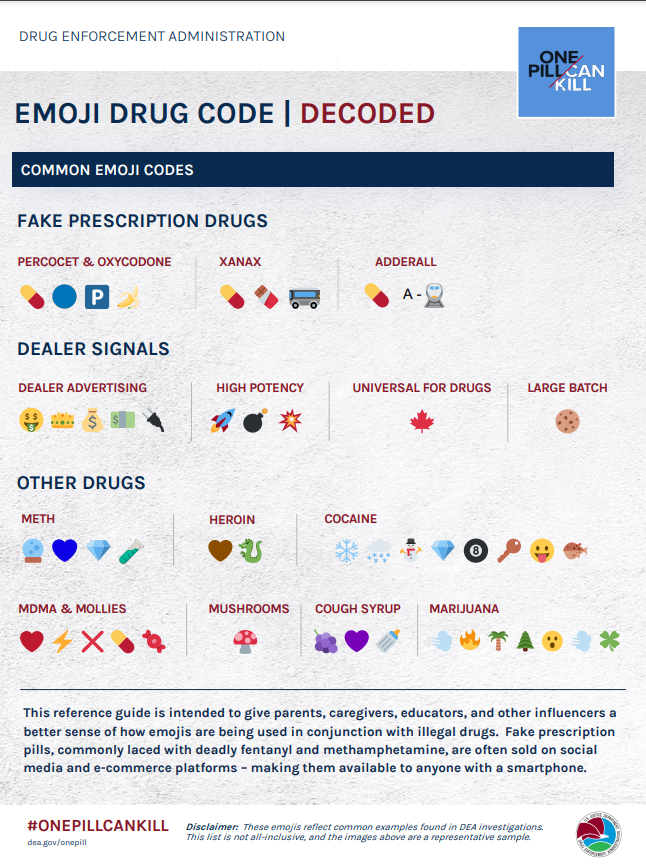

Users are always coming up with new ways to promote illegal substances on social media by using various combinations of characters, but also emojis. In 2021, the US Drug Enforcement Administration released a parental guide to deciphering the "Emoji Drug Code", a graphic bearing popular symbols repurposed for drug deals.

Emoji Drug Code

Emoji Drug Code

A pill emoji could for instance symbolize drugs like Percocet, Adderall, or Oxycodone, heroin could be depicted with a snake or a brown heart, and cocaine with a snowflake. The emblem for marijuana is palm or pine tree and dealers indicate large batches of drugs with a cookie symbol, while high-potency substances are represented with bomb or rocket emojis.

ML models

The use of ML models may complement the keywords and human moderators approach by detecting cases where dangerous products are mentioned in a more ambiguous way with the help of annotated datasets containing references to these products.

Automated moderation can also be used to moderate images and videos submitted by users and by detecting depictions of weapons, alcohol or drugs.

What actions should be taken with illegal traffic and trade related content

Illegal products seller

If a user on the platform is actually selling illegal products, the case should certainly be reported to the legal authorities.

After reporting the user, the most basic action a platform or application could take when encountering a mention of a dangerous product that could be illegally sold or bought is to remove the comment or contents from the platform so that no one sees it. Another way to react would be to punish the user by suspending its account for a limited time or permanently.

Illegal product buyers

Platforms should also pay close attention to potential buyers of illegal products who might be in distress or dangerous. The use of illegal products such as weapons or substances could lead to serious consequences like injury, self-harm, overdose, addiction and even suicide, accidental death or premeditated attacks.

Depending on the situation, platforms and applications should either provide the user with the necessary help (numbers of helplines, information contact of association, etc.) or report him to the authorities.

In any case, platforms and applications should pay close attention to comments mentioning dangerous product, especially if the platform is hosting users being minors and therefore more vulnerable.

Read more

Online promotion, spam and fraud — Content Moderation

This is a guide to detecting, moderating and handling online promotion, spam and fraud in texts and images.

Personal & Identity Attacks — Content Moderation

This is a guide to detecting, moderating and handling insults, personal attacks and identity attacks in texts and images.