Do's and Don't's of Content Moderation

Content moderation is an essential component of maintaining a healthy online community. It ensures a safe environment for users, prevents the spread of harmful content, and fosters positive experiences. As a platform or app owner, you can create a balanced environment that promotes freedom of expression while protecting users from inappropriate or harmful content. To help you navigate this complex task, we have compiled a list of do's and don't's for effective content moderation.

Do's of Content Moderation

Moderate all types of content

Whether you have a platform, a marketplace, a dating app or a gaming community, make sure that all types of User-Generated Content get moderated. Text messages, images, videos, audio, live-streams and even 3D objects can contain sexual, hateful or otherwise inappropriate content.

Set clear guidelines

Establishing clear, specific, and easy-to-understand community guidelines is crucial for effective moderation. This helps users know what is expected of them and makes it easier for moderators to enforce the rules. Guidelines should cover topics such as harassment, hate speech, spam, explicit content, and intellectual property.

Be Transparent and Consistent

Transparency in content moderation fosters trust between the platform and its users. Clearly communicate your moderation processes, decisions, and the reasons behind them. If content is removed or flagged, inform the user and provide an explanation for the action taken. Consistency is key to fair and effective content moderation. Ensure that all moderators are familiar with the community guidelines and apply them uniformly. Regularly review your moderation practices and make necessary adjustments to maintain a consistent approach.

Prioritize user safety

Protecting users from harmful content should be a top priority. Develop and enforce policies that address issues such as cyberbullying, harassment, and hate speech. Use a combination of human moderators and automated tools to identify and remove harmful content promptly.

Encourage user reporting

Empower users to report inappropriate content by providing easy-to-use reporting tools. Encourage a culture of community involvement, where users feel comfortable flagging content that violates community guidelines.

Continuously Improve and Adapt

The digital landscape is ever-evolving, and content moderation must adapt to keep up. Regularly assess your moderation processes and make improvements as needed. Stay informed about new trends, technologies, and best practices to ensure your moderation efforts remain effective.

Respect User Privacy

Maintain user privacy by ensuring that moderators handle sensitive information responsibly. Develop guidelines on how to deal with personal information and establish secure channels for communication when handling such data. Use Automated Moderation for prefiltering.

Don't's of Content Moderation

Don't wait

Get started with content moderation as early as possible, ideally at the earliest stages or your app or platform. It might not be your priority as you are focusing on building your product and growing your audience, and it might feel far away in the future — but once usage starts to pick up your platform could quickly become flooded with bad content, harming user experience and putting you in a difficult position.

Plan ahead and prepare to handle such an increase.

Don't waste time and money

There is probably no need to "reinvent the wheel" and build internal tools and solutions for Content Moderation. You can leverage existing resources, existing tool and automated filters to keep things simple and efficient.

Don't Ignore Cultural Context

Content moderation is not one-size-fits-all. Be aware of cultural differences and context when moderating content, as what may be acceptable in one culture might be offensive in another. Train your moderation team to recognize and account for these differences.

Don't Disregard Legal and Regulatory Requirements

Stay informed about relevant laws and regulations in the regions where your platform operates. Failing to comply with these requirements can result in legal consequences and harm your platform's reputation.

Don't Be Reactive, Be Proactive

Effective content moderation requires proactive measures to prevent

Read more

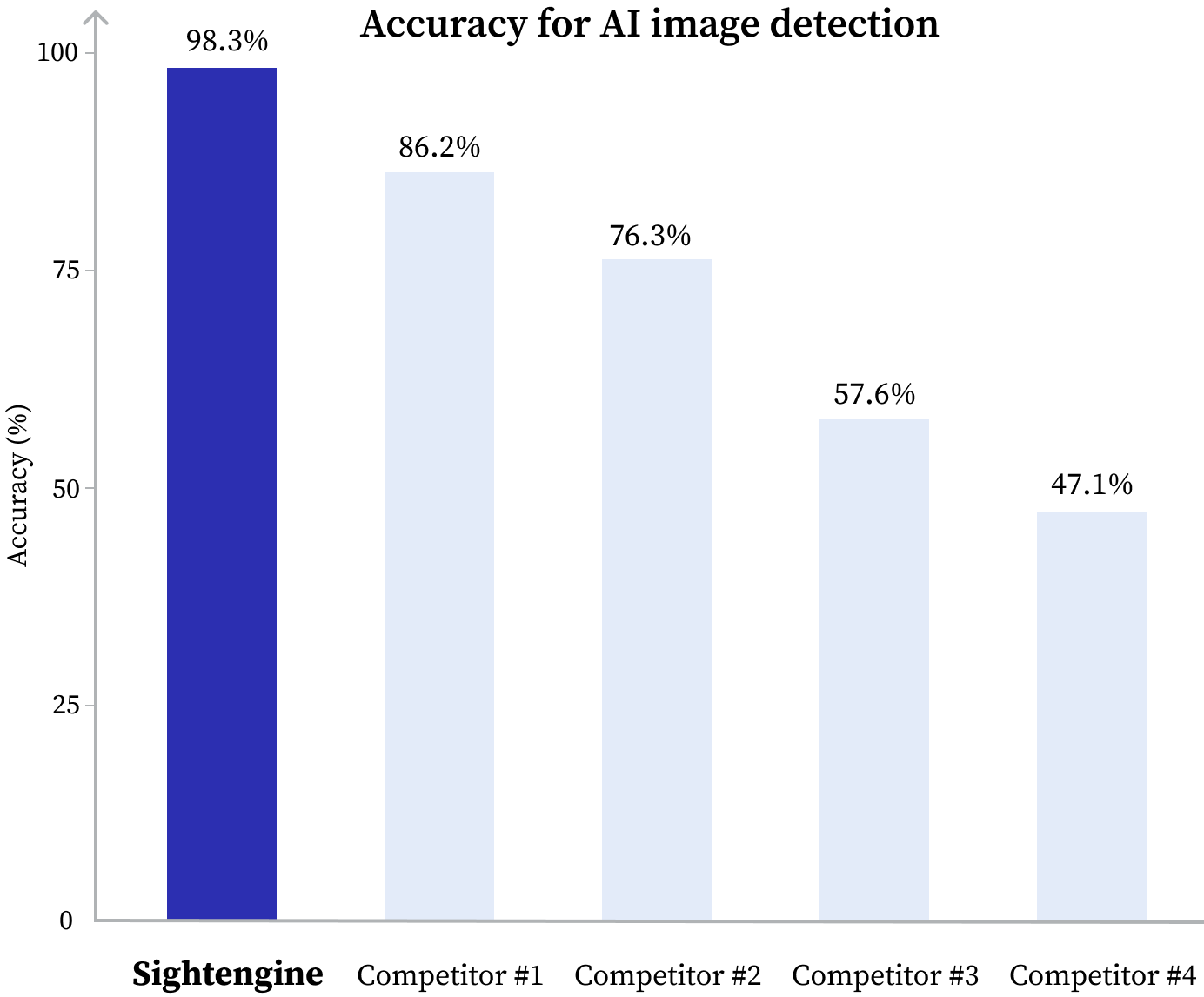

Sightengine Ranks #1 in AI-Media Detection Accuracy: Insights from an Independent Benchmark

Learn how Sightengine performed in an independent AI-media detection benchmark, outperforming competitors with advanced methodologies.

Self-harm and mental health — Content Moderation

This is a guide to detecting, moderating and handling self-harm, self-injury and suicide-related topics in texts and images.