The Ultimate Guide to GenAI Moderation — Part 2

Detecting and moderating AI-generated content with technology

GenAI presents significant challenge for platforms and the Trust & Safety field. As we head into 2025, AI-generated content and detection advancements are poised to take center stage. This post is part of a two-part blog series, co-authored with our partner Checkstep, exploring innovative strategies and solutions to address emerging threats. Happy reading!

Table of content

- Introduction

- Key technical challenges in identifying AI-generated content

- The technology behind AI-generated content detection and moderation

- Detecting and moderating GenAI content with Sightengine & Checkstep

- Conclusion

Introduction

As seen in Part 1 of this blog series, AI-generated images carry inherent risks related to misinformation, impersonation, and spam. Traditional detection methods to find common harms are not enough when it comes to such content, especially in specific contexts like world events (elections, natural disasters, wars, etc.) or more private issues (insurance fraud, AI nudes, etc.). The need for advanced AI-powered tools to detect and moderate genAI is increasing while AI generators are becoming more efficient at producing very authentic-seeming content.

Key technical challenges in identifying AI-generated content

1. GenAI model improvements

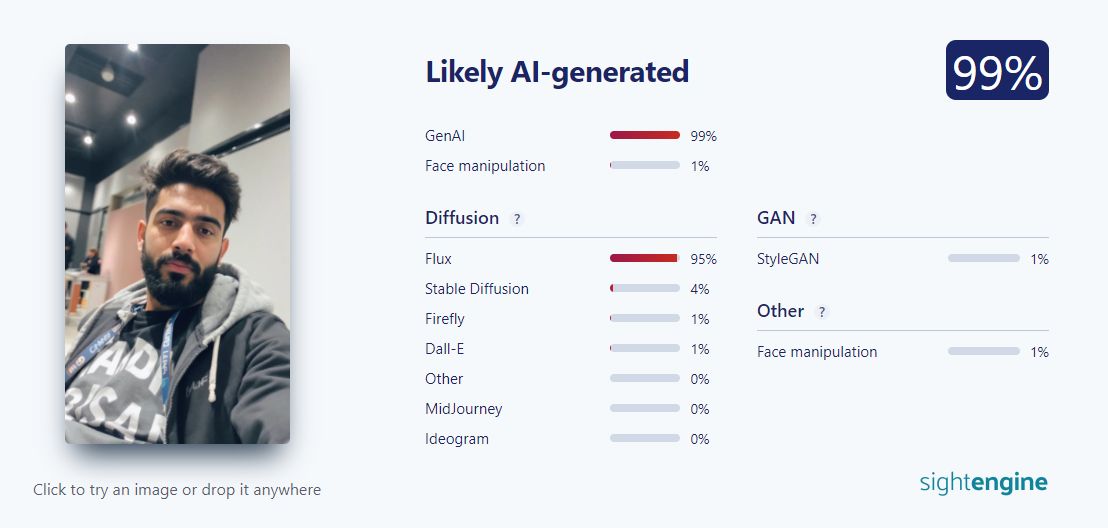

Source: Sightengine

Source: Sightengine

The pace of advancement in AI models for generating realistic images is astonishingly rapid. Just a few years ago, AI-generated images were noticeably flawed, with signs like unnatural textures, strange facial features, or lighting inconsistencies directly identifiable by humans. Recent developments have led to models producing images so realistic that they are nearly indistinguishable from real photographs.

Key breakthroughs like Generative Adversarial Networks (GANs) and diffusion models have accelerated this shift. Models such as DALL-E, Midjourney, and Stable Diffusion have set new benchmarks, while others push boundaries in niche areas, like generating hyper-realistic human faces or photorealistic environments. This is the case for Flux 1.1 Pro that came out just a few weeks ago, beating previous models in terms of realism. The selfie above for instance has been discussed a lot: believe it or not, it is AI-generated!

In sometimes just a few months, popular generators have improved their models impressively, incorporating advanced fine-tuning techniques and big datasets, helping them replicate details like skin texture, lighting variation, and other complex visual concepts like emotions or artistic styles. Midjourney is a good example of this evolution. The same prompt "photo close up of an old sleepy fisherman's face at night" was used to generate each one of these images with different versions of Midjourney models (v5.0, March 2023; v5.2, June 2023; v6.0, December 2023; v6.1, July 2024):

Source: Sightengine

Source: Sightengine

This rapid evolution poses a significant challenge for content moderation systems because they require constant updates and a proactive approach to make sure new emerging models do not bypass the existing moderation rules.

2. Human identification is not enough

Humans are generally not so good at identifying AI-generated content. To prove it, let's have a look at Sightengine's "AI or not?" game. The goal is very simple: players see images we generated and simply vote for "AI" or "Not AI", testing their detection abilities and trying to reach the highest accuracy. According to the results, among 150 images both real and synthetic, 20% of them fooled most human participants. This number is very likely to increase with time as models are better at producing very flawless and realistic images.

Even if humans were good at identifying AI, they'd be overwhelmed by its speed and volume. Scalability and real-time processing are critical challenges for content moderation systems on high-traffic platforms, especially when handling the detection of AI-generated content, as user-generated content volume grows and AI-generated media becomes more prevalent. A robust infrastructure, speed and best-in-class accuracy are essential to avoid the spread of AI-generated content and therefore the risk of misinformation, spam and impersonation.

The technology behind AI-generated content detection and moderation

1. GenAI detection technologies

1.1 AI watermarking

Watermarking techniques aim to mark content at the point of creation. It simply consisted originally in adding a sign, similar to a logo, to an image, but it's now frequent to find images with watermarks that are invisible to the naked eye (SynthID by Google for example). By embedding a subtle, often imperceptible digital watermark within the media, its real or synthetic origin can easily be verified.

While watermarking seems to be an easy solution to identify AI-generated content, there are some limitations too: - Watermarks can in some cases be removed, either by simply cropping the image if the watermark is visible, or by using some open-source repositories sharing code to tamper with watermarks. - Some media just do not contain any watermark, or at least any known watermarks, making watermark detection unscalable. - People using GenAI models don't add any watermark to their creations as the goal is often to fool viewers.

1.2 Machine learning for pattern recognition

AI-generated images often contain subtle anomalies that differ from natural images. They may for instance contain distortions, artifacts or unrealistic light reflections, shadows, or textures.

These are common issues of AI content that are in some cases visible for humans. But AI models are becoming stronger at generating very realistic images with almost invisible inconsistencies as these are generally impacting the pixel-level.

Models based on machine learning algorithms trained on large datasets of authentic and synthetic images can learn to spot such irregularities and are a much more scalable solution.

1.3 Additional behavioral analysis

Using tools to analyze user behavioral patterns is a good additional solution to identify AI-generated content, because such content is often associated with bot-like posting behavior, i.e. spammy behavior. Analyzing posting patterns (posting frequency, consistency or volume) might reveal user accounts deviating from typical user behavior.

Bot-like posting is also related to content duplication and repetition. Behavioral analysis algorithms can detect patterns of similarity across posts, signaling potential bot activity and potential use of GenAI content.

Finally, the use of metadata providing additional context such as IP addresses or device information could help isolate specific sources of AI-generated content, but this method is sometimes limited as some content imported on platforms do not contain any metadata, making it impossible to know the original source.

2. Automation in GenAI moderation workflows

2.1 Decision-making and escalation

In moderation workflows using AI tools to detect GenAI, automated systems using one or more of the methods described above generally make a rapid initial decision, flagging the content before any other action is taken.

Automated systems often assign a "risk score" to the analyzed media. This score reflects the content's likelihood of being AI-generated or violating platform guidelines. Higher scores indicate higher-risk content, allowing systems to rank and prioritize content for moderation.

Depending on the implemented rules, when the media meets certain risk thresholds, the system may remove it or apply containment strategies, such as flagging or temporarily quarantining the content. Flagged content is set aside for review by either more advanced algorithms or human moderators, while quarantined content is kept out of public view until further inspection.

This automated decision-making is also a way to reduce the initial load on human moderators by filtering out low-risk content or images that are undoubtedly AI, allowing moderators to focus on cases requiring nuanced judgment.

By focusing on high-risk cases, automated systems ensure that the most potentially harmful content is dealt with first. These prioritization and escalation prevent potentially harmful content from spreading before it can be reviewed and reduce the chance of high-impact content slipping through, providing an extra layer of protection for users and the platform.

2.2 Feedback loops

Feedback loops allow human moderators to provide critical input on flagged content, labeling it as AI-generated or authentic based on manual review and contextual checks. Such input may help the detection model learn and refine its understanding of complex or edge cases that may have been misclassified. It is invaluable for improving model accuracy, reducing the likelihood of false positives or negatives.

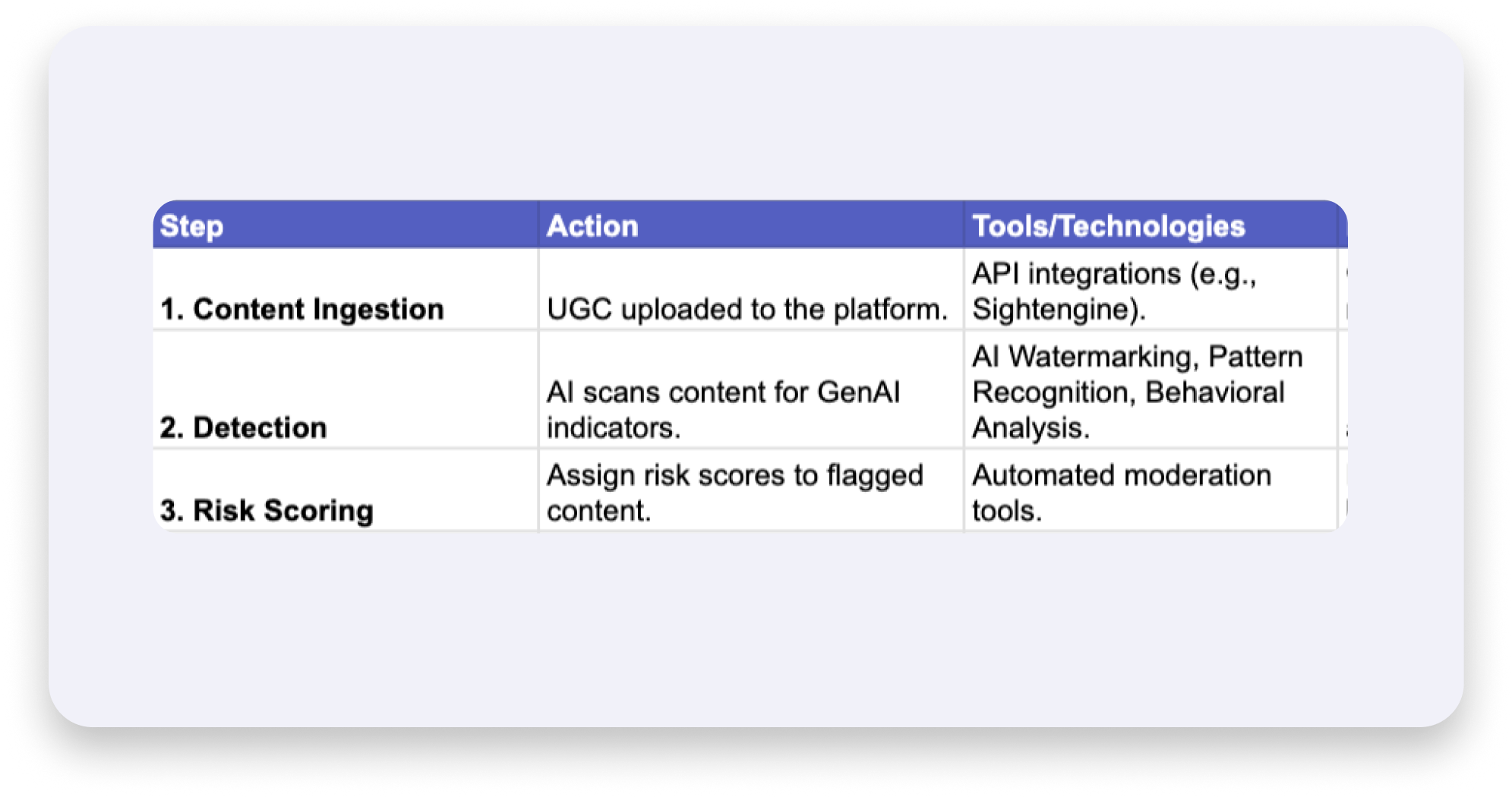

2.3 GenAI moderation workflow - TEMPLATE

Source: Checkstep & Sightengine

Source: Checkstep & Sightengine

How to Use This Template:

- Customize the steps: Tailor the actions to your platform's unique needs (e.g., add specific GenAI detection models relevant to your content types).

- Define tools: List the tools you'll use for each step, such as Sightengine for detection and Checkstep for actionable moderation.

- Clarify outcomes: Specify what success looks like at each stage (e.g., reducing false positives, improving detection accuracy).

- Integrate feedback: Ensure feedback loops are built into your workflow to enhance moderation over time.

To access the template, you can download the complete guide by clicking here.

Detecting and moderating GenAI content with Sightengine & Checkstep

1. Sightengine's detector for AI-generated images and videos

Sightengine provides an API for images, videos and text moderation. The team develops proprietary models to detect problematic content commonly found in UGC. The provided solution is very comprehensive, with 110 moderation classes across nudity, hate, violence, drugs, weapons, self-harm and more.

1.1 GenAI detection models

As GenAI is evolving and presenting its own risks (misinformation, impersonation, spam), it has become important to provide a solution to allow users and platforms to automatically identify:

- AI-generated images: this model helps detect the authenticity and the provenance of an image among the most popular generators (OpenAI, Midjourney, Bing Image Creator, Stable Diffusion, Flux, Ideogram, etc.).

- Deepfakes: this model is specialized in face manipulation and face swap detection. It is trained to analyze the outline and consistency of faces in a media, taking into account any alteration and ensuring authentic facial representations.

These two models do not take watermarking or metadata into account, they only focus on analyzing the visual content, i.e. the pixels, which makes them more scalable. Even the most difficult examples can be identified while humans can not make the difference. Take the below monochromatic image as an example: it is a very difficult case, impossible for a human to differentiate, containing uniform, uninterrupted blue with no features, textures, or variations whatsoever, and yet it is correctly identified as AI-generated from Midjourney with a 96% likelihood.

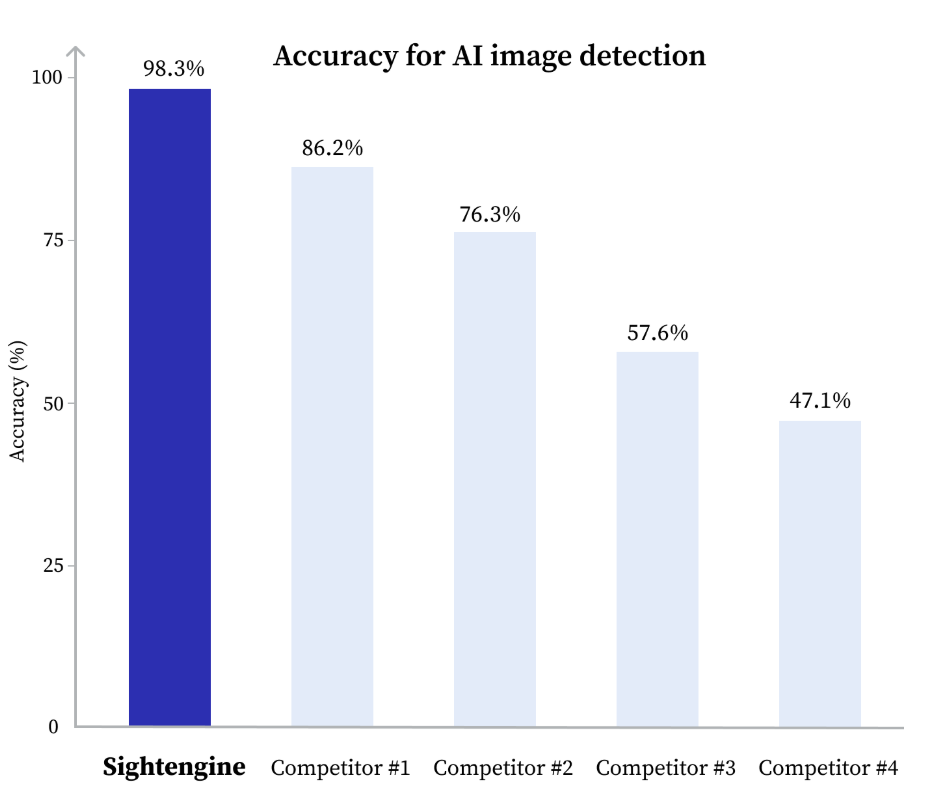

Source: Sightengine

Source: Sightengine

Sightengine's models have a best-in-class accuracy for AI-generated media detection according to this external study by the University of Rochester and the University of Kansas that employed 80,000 real and synthetic images from various text-to-image generators. As accuracy is one of Sightengine's priorities, the team always proactively works to have updated models taking into account the most recent improvements.

1.2 Additional models

While GenAI detection models are useful to check the authenticity of an image, some other models may still be required to ensure safety.

Duplicate and near-duplicate detection models are useful when it comes to identifying images or videos that reflect spammy behaviors, often associated with bot-like behaviors and AI-generated content spreading.

Any other moderation topic can appear in AI images and videos (nudity, hate, violence, weapons, drugs, self-harm, alcohol, etc.) and increase the risks incurred by its spreading. These are standard moderation categories that can also be handled by Sightengine's automated moderation.

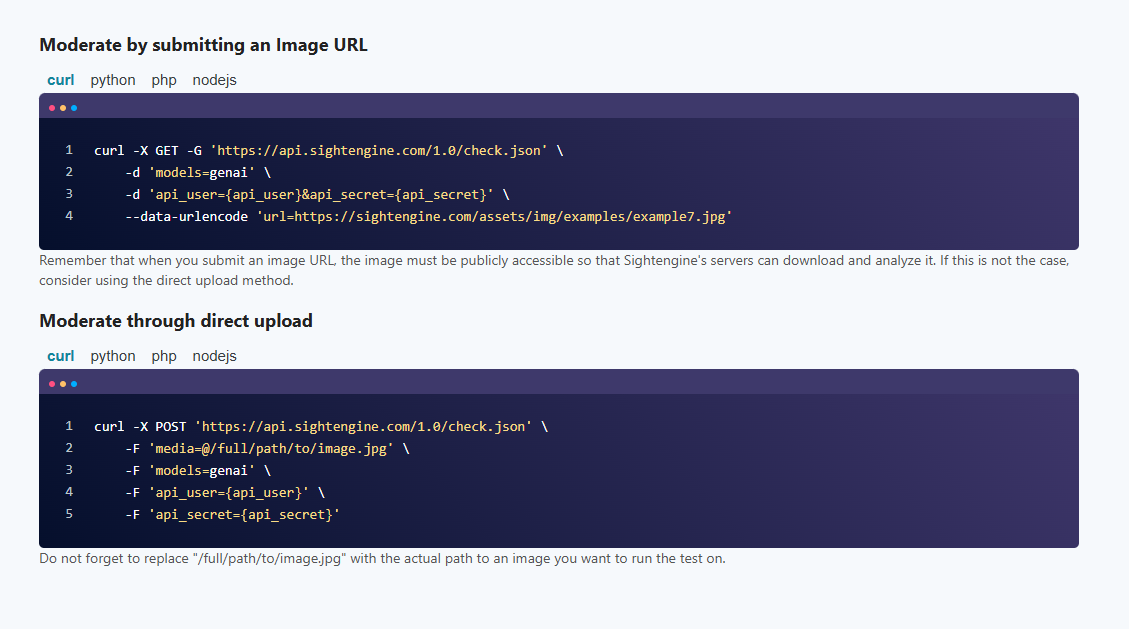

1.3 How Sightengine's API work

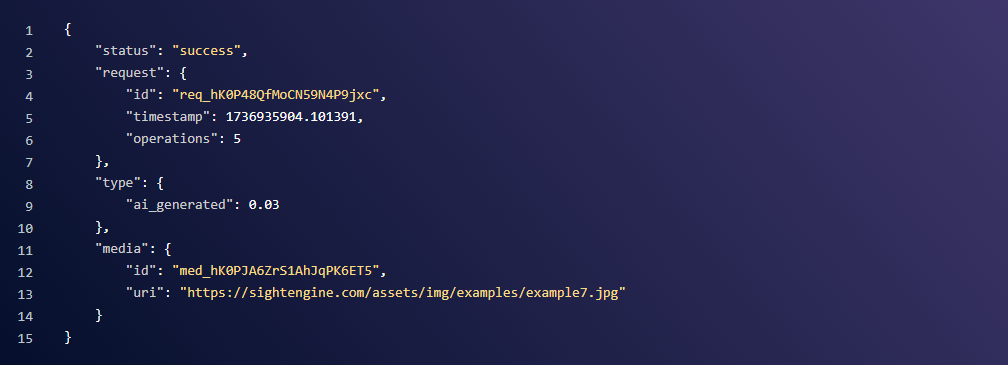

For GenAI and deepfake detection, the API works in a very simple way: a request is sent to the API, with the media to analyze and the moderation models to use, and an instant response is sent back with the associated results.

There are different ways for the API to receive the request:

- To freely test just a few images with the GenAI detection models and make an opinion without creating an account, people can use the AI image detector and the AI deepfake detector directly integrated on Sightengine's website.

- Regular users (it can be individuals, platforms or solution providers like Checkstep for instance) can use the self-served plans, create an account, get their API credentials and start using the models, either by drag-and-dropping images using the same integrated interfaces as previously, sending simple requests, or integrating Sightengine's solutions on their back-end in a more complex way.

When using code to send requests, images can be moderated either by submitting their URL or by directly uploading them, indicating the image path. Let's say you want to check if an image is AI-generated.

Below are the two ways to send the request, using the genai model. If you wanted to check for face manipulation, the deepfake model would be the one to specify. Note that it is possible to specify as many models as needed in one same request, including traditional detection models (nudity-2.1 or weapon for instance). Keep in mind that using multiple models in a single request will consume more operations from your monthly allowance, and the number of operations included in a plan is limited.

The API results consist of a JSON response with the ai_generated and / or deepfake score, depending on the models specified in the request. This score is a float between 0 and 1: the higher the value, the higher the confidence that the image is AI-generated / contains a manipulated face.

2. Integration with Checkstep: an actionable moderation

2.1 Two complementary solutions

As seen previously, Sightengine is a solution providing an API to detect potentially problematic content, whether it is AI-generated content, deepfakes or more traditional harms. Detection models are specialized in specific moderation categories and users get probability scores for each model they call.

Even though some thresholds are recommended to interpret the results, Sightengine's work stops here, it is then up to the user to take advantage of the scores obtained to implement moderation rules matching the platform's guidelines and rules, and comply with regulations.

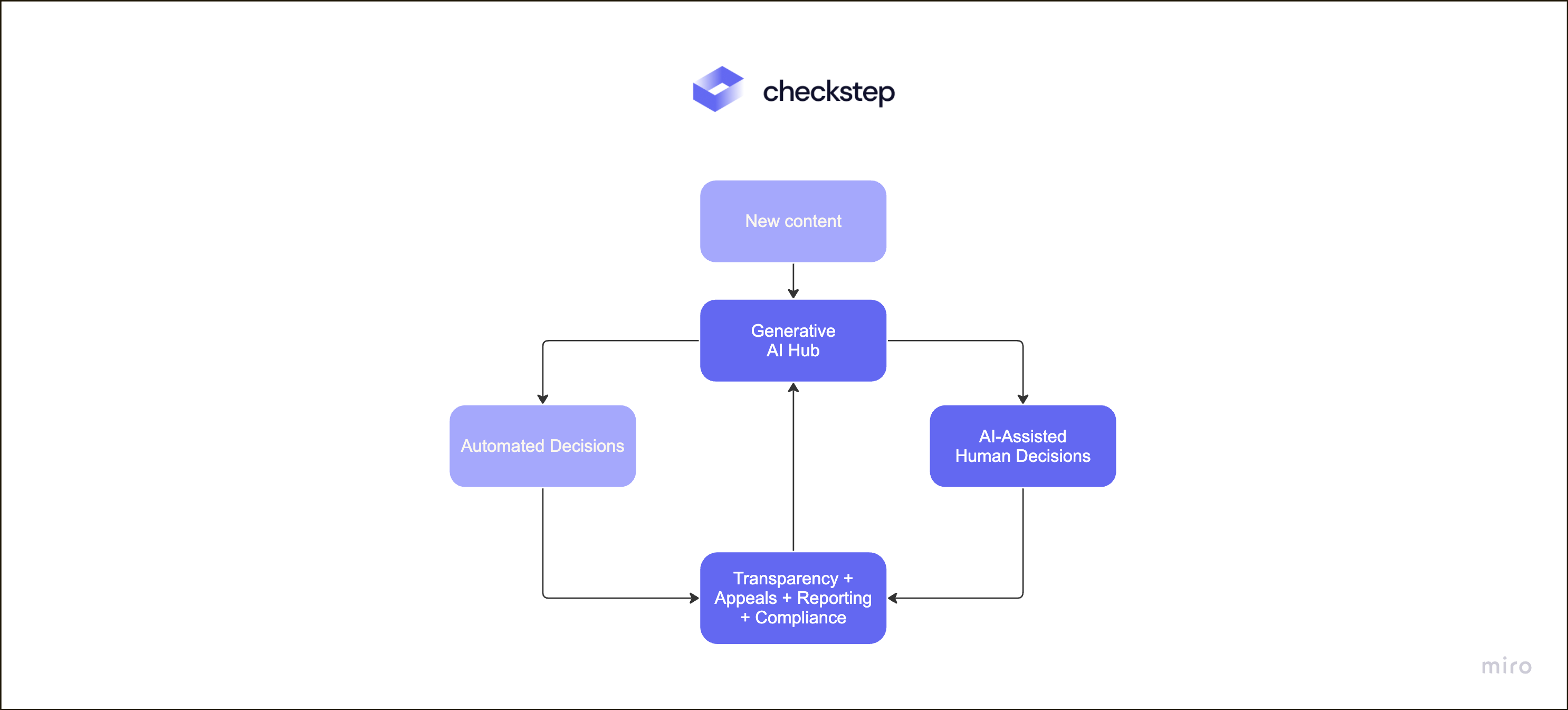

Checkstep facilitates this part by working with automated moderation providers like Sightengine, integrating their system into their platform and making detection results directly actionable. Users can easily manage their own policies from one place and configure what is allowed or not on their platform, essentially interpreting the automated detection results to drive actions and workflows.

Checkstep also provides unified insights and analytics about your content moderation actions and provides the ability to build appeal workflows (as required by the DSA) and manage other moderation triggers in one place.

2.2 Checkstep features

Checkstep allows customers to develop moderation workflows that get the most efficiency from their teams and to automate moderation decisions in accordance with the customer's unique policies and regulatory requirements. Checkstep's foundation is built around the customer's policy, where Checkstep captures the customer's guidelines for harmful content and develops rules and workflows to blend AI scanning results, AI decisions, and human teams of moderators.

With Checkstep, you can route different potential policy violations to make automated enforcement decisions or get human reviews (with information from AI-provided content scanning).

Checkstep has a simple content integration, where customers use the Checkstep API to send references to their content (with access delegation so that Checkstep never stores your data) and Checkstep creates content cases that are scanned with best-in-class AI tools like Sightengine and other providers.

Checkstep customers can run multiple AI scans with multiple providers at once so that they can scan (for example) an image against CSAM detection services and Sightengine's GenAI detection service and take actions based on multiple signals. Checkstep returns details about any potential violations back to the customer and, when moderation decisions are taken via automation or human review, sends back content decisions to remove content or ban a bad actor from the customer's site.

After content moderation decisions are made, Checkstep also provides an easy-to-use transparency and compliance API that gives customers the power to tell you when your moderation got it wrong. For customers that had content removed from a platform, Checkstep powers the ability to see the policy that the content violated and to handle appeals if the user disagrees with the moderation decision.

As part of the Digital Services Act (DSA) and as a best practice for user experience, it's necessary to give users transparency around why content is removed and to give those customers the ability to appeal incorrect decisions.

When content is missed by the AI or human moderation, Checkstep also powers community reporting features to have content reviewed that was otherwise missed. Together, appeals and community reports become an important feedback loop to your human moderators and to your AI scanning tools. Checkstep unifies this data into a single-pane view for Trust & Safety leaders inspecting AI and human moderation.

Checkstep also augments sophisticated scanning providers like Sightengine by layering additional tools like flexible LLM prompts. With flexible LLM prompts moderators and Trust & Safety leaders can develop new rules in natural language to quickly respond to emerging issues or new types of content abuse that aren't already trained into their existing models. This gives unparalleled coverage to spot and respond to novel abuse with the best foundational models and incremental detection capabilities.

Source: Checkstep

Source: Checkstep

3. Real world application

Platforms across various industries are already leveraging tools like Sightengine and Checkstep to effectively detect and moderate AI-generated content. Below are some notable use cases demonstrating how these technologies ensure content integrity and user trust:

| Use Case | Scenario | Solution | Impact |

|---|---|---|---|

| Social Media Platforms: Combating Misinformation and Impersonation | During elections, bad actors create AI-generated images of political figures engaging in fake activities to influence public opinion. | Platforms integrate Sightengine's GenAI detection models to analyze uploaded images for signs of AI-generation. Content flagged with a high-risk score is escalated to human moderators for a thorough review. Checkstep's actionable moderation enables immediate removal or labeling of misleading content. | By quickly identifying and neutralizing fake media, these platforms protect democratic processes and maintain user trust. |

| Dating Platforms: Preventing Impersonation Fraud | Fraudsters create AI-generated profile pictures and bios to impersonate attractive individuals, luring victims into scams or extracting sensitive information. | Sightengine's GenAI detection models analyze profile images to identify signs of AI generation. Behavioral analysis tools, integrated with Checkstep, flag accounts exhibiting unusual patterns, such as excessive messaging or requests for financial help. Platforms can then act by suspending fraudulent accounts and notifying users. | This proactive approach builds user confidence and significantly reduces the risk of impersonation fraud. |

| E-commerce Platforms: Combating Fraudulent Listings with GenAI Detection | Fraudulent sellers use AI to generate highly realistic images of products that do not exist, tricking buyers into making purchases or prepayments. | Sightengine's GenAI detection models analyze product images for signs of AI generation, such as pixel-level inconsistencies or unnatural features. Duplicate and near-duplicate detection models further identify patterns of repeated or spammy behavior, often associated with fraudulent accounts. Integrated with Checkstep, the system flags suspicious sellers and listings for immediate review, helping platforms enforce their policies effectively. | This layered approach ensures that fraudulent listings are identified and removed before they can harm buyers. It also safeguards platform credibility and fosters a trustworthy shopping environment. |

| Content Streaming Services: Preventing Deepfake Abuse | A user uploads a deepfake video featuring a celebrity without consent, violating platform policies. | Sightengine's deepfake detection model evaluates the video, assigning a high probability score for face manipulation. Checkstep integrates this output into its policy enforcement framework, ensuring swift action, such as removal of the video and notifying the uploader of policy violations. | These measures protect public figures and mitigate potential legal and reputational risks for the platform. |

| News and Media Platforms: Preserving Credibility | AI-generated images depicting fake natural disasters go viral, risking public panic. | Sightengine's AI-generated content detection API scans incoming media for authenticity. Checkstep provides a dashboard for editors to manage flagged content, ensuring only verified information reaches the audience. | This approach upholds the platform's credibility and ensures accurate information dissemination. |

| Gaming Communities: Preventing Harassment and Toxicity | A player uses AI tools to generate offensive memes targeting other users. | Sightengine's models detect harmful content categories such as hate speech or violence in images and videos. Checkstep's moderation framework enforces community guidelines by issuing warnings, suspensions, or bans. | By creating a safer environment, platforms enhance user experience and foster positive community engagement. |

These examples show that by combining advanced detection capabilities with actionable moderation workflows, platforms across sectors can address the challenges posed by AI-generated content, enhancing trust and safety for their users.

Conclusion

The growing prevalence of generative AI content has created new challenges for platforms, making robust moderation solutions essential. Technology plays a crucial role in addressing these risks, offering scalable, efficient tools for detecting and managing AI-generated content.

Solutions like Sightengine and Checkstep demonstrate how advanced AI tools combined with actionable workflows and regulatory compliance features can help platforms stay ahead of threats like misinformation, impersonation, and fraud. This also shows that collaboration across industries is necessary to standardize AI content detection and maintain shared detection resources across platforms.

By establishing clear, adaptable rules and leveraging moderation solutions that integrate transparency, cutting-edge AI tools, and user education, platforms can mitigate risks while ensuring content aligns with their guidelines and create safer, more trustworthy environments.

If you want to know more about Sightengine and Checkstep, you can contact here Sightengine and here Checkstep. We're happy to answer all your questions and assist you in any way to keep your platform safe!

Read more

Towards a fine-grained and contextual approach to Nudity Moderation

Blanket bans on nudity and specifically on bare breasts are coming under increased scrutiny. We hear they clash with cultural expectations and impede right to expression for women, trans and nonbinary people.

Online promotion, spam and fraud — Content Moderation

This is a guide to detecting, moderating and handling online promotion, spam and fraud in texts and images.