Sightengine Ranks #1 in AI-Media Detection Accuracy: Insights from an Independent Benchmark

Introduction

As AI-generated media continues to proliferate, the ability to accurately detect AI-generated content has become vital for industries like content moderation, social platforms, and news verification.

Recently, an independent benchmark conducted by researchers from the Universities of Kansas and Rochester evaluated various tools for detecting AI-generated media, including Sightengine.

Key points

- The benchmark assessed the performance of multiple state-of-the-art open-source and commercial AI media detection models and APIs.

- Five commercial detectors were tested, including Sightengine.

- We’re proud to share that Sightengine ranked #1 in the task, surpassing competing solutions by a significant margin.

Benchmark methodology and results

The benchmark evaluated the performance of AI detection models using a rigorous and transparent methodology.

Datasets

The dataset, named ARIA (AdversaRIal AI-Art), was collected and shared with over 140K real/human and AI-generated images.

Real images are diverse, representative of images that could be linked to social media fraud, fake news and misinformation or unauthorized art style imitation. They are from datasets that were collected before 2022 to ensure they are actually human-generated.

AI images were generated with the intention of pairing them with human images from the dataset to minimize bias. The study employed two different generation modes: Text-to-Image (T2I) Generation, based only on the text description of the image, and (Image+Text)-to-Image (IT2I) Generation, based both on the real image and the text description of it. Midjourney’s Discord bot, Dreamstudio’s API, Starry AI’s API and DALL-E 3 were used to produce the AI images.

In the end, when it comes to real images and T2I AI images, the benchmark employed 80k images from the ARIA dataset.

Evaluation of human detection

The study researchers created a survey asking humans to judge images as real or AI and to tell what visual clues helped them.

The average accuracy for participants is 63.35% for AI images and 79.85% for real images. Results indicate that spotting AI images is more challenging for humans than identifying real ones.

Visual clues that apparently helped humans spot AI images were related to texture and material anomalies as well as anatomical errors. If you want to know more about the details in images that can help you make the difference between real images and artificial images, check out this blogpost!

Evaluation of detectors

Top accuracy

The benchmark assessed the performance of multiple state-of-the-art open-source and commercial AI media detection models and APIs. Five commercial detectors were tested, including Sightengine.

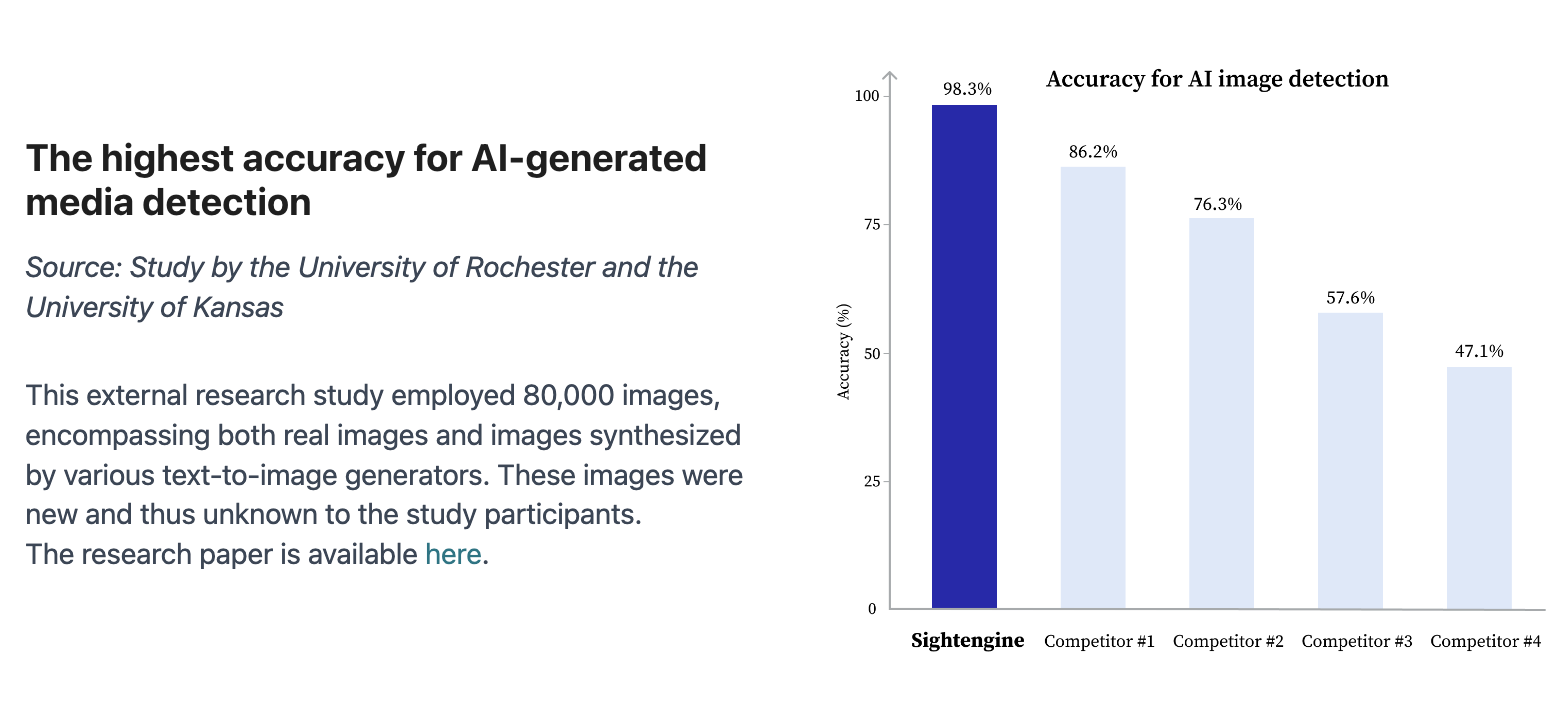

When it comes to real images and images generated by text-to-image generators, Sightengine achieves the highest score with a 98.3% accuracy, making it the most reliable solution for identifying AI-generated media.

The highest accuracy for AI-generated media detection

The highest accuracy for AI-generated media detection

Other interesting results

The competitor following Sightengine reaches 86.2%. The other competitors get scores between 76.3% and 47.1%.

The benchmark observes that open-source detectors provide inconclusive results with accuracies in the seventies.

Conclusion

Sightengine’s top ranking in this independent benchmark solidifies our position as a leader in AI-media detection and cutting-edge solutions for businesses and organizations looking to safeguard their platforms from manipulated or synthetic content.

As AI-generated media evolves, we remain committed to continuing to innovate and improve our detection technologies to meet the challenges of tomorrow.

To know more about our AI detection product, see our documentation.

Read more

AI-generated images - The Trust & Safety Guide

This is a complete guide to the Trust&Safety challenges, regulations and evolutions around GenAI for imagery.

Harassment, bullying and intimidation — Content Moderation

This is a guide to detecting, moderating and handling harassment, bullying and intimidation in texts and images.