Face Detection & Analysis

face-analysisThe Face Analysis model detects and analyzes faces in images and videos, providing insights on realness, position, obstruction, quality, angle, and filters.

Overview

The Face Analysis model is designed to detect faces in an image or video and provide additional insights, including:

- Realness: Determines whether the face belongs to a real human, or a human-like object / illustration

- Position: Identifies the face's coordinates and angle within the image

- Validation criteria: Checks for obstruction, quality issues or applied filters

This model can be used to validate profile pictures or assess face recognizability.

Real vs Artificial faces

These classes categorize faces as either real or artificial, considering only human-like faces. Animal faces are automatically disregarded.

For all detected faces, coordinates are provided. However, additional analysis (obstruction, quality, angle, filters) is applied only to real faces, helping to define image profile criteria.

- Real Faces: Real human faces, including those seen in reflections (e.g., mirrors) or within photos. Minor alterations from filters or post-processing are acceptable

faces

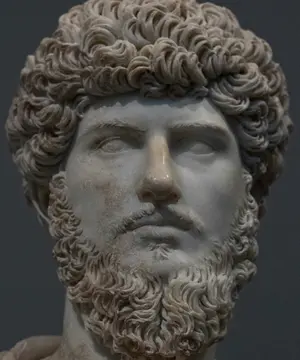

- Artificial Faces: Any artificially created face, whether as a representation (2D) or a physical object (3D)

artificial_faces- 2D representations: Drawings, paintings, animations, 3D renders, obvious AI-generated faces, or real faces altered to appear artificial

- 3D objects: Statues, dolls, masks, mannequins, or figurines

Face Detection and Position

For all detected faces, coordinates define their position within the frame. These consist of two points forming a bounding box:

- (x1, y1): Top-left corner

- (x2, y2): Bottom-right corner

For real faces, additional major facial landmarks (eyes, nose tip, mouth corners) are also provided:

- left_eye

- right_eye

- nose_tip

- left_mouth_corner

- right_mouth_corner

If any landmark is obstructed, its position is approximated.

All coordinates are normalized between 0 and 1, relative to the image's width and height, where x increases from left to right and y increases from top to bottom.

Obstruction classes

These classes quantify facial obstruction based on the visibility of key recognizable features (mouth, nose, eyes) rather than the overall percentage of coverage. Obstruction occurs when objects overlay the face or when parts of the face are out of frame.

Preliminary considerations:

- Hair and ears are not considered facial features and are excluded from obstruction analysis

- Properly worn real sunglasses are not classified as obstruction

- Features hidden due to head rotation are not considered obstructed since no object blocks them

- Distortions from pixelation, blurring, or filters do not count as obstruction

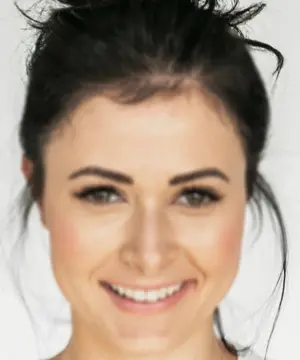

The following images illustrate cases where no obstruction is detected:

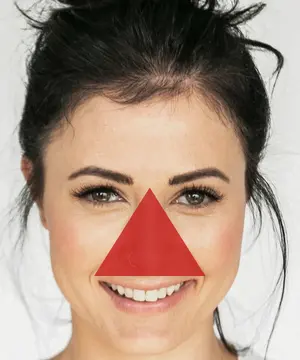

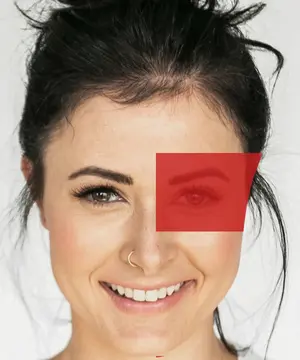

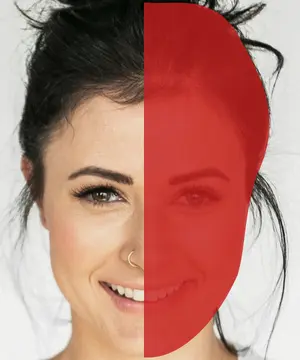

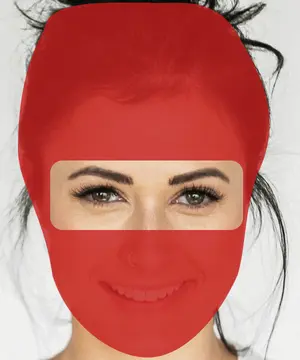

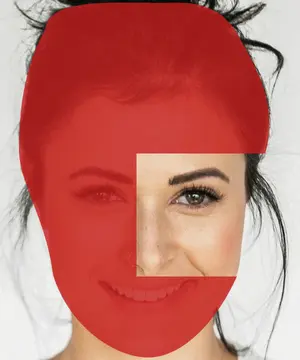

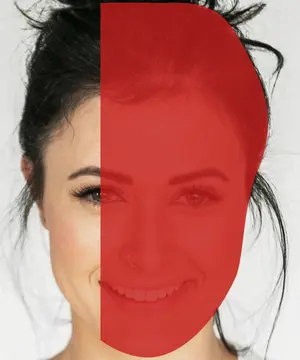

Obstruction is categorized into six levels of increasing severity. The red-highlighted areas in the images below indicate obstructed regions.

- No Obstruction: No facial features are covered, though hair and ears may be (e.g., bare head, hijab, turban)

faces.attributes.obstruction.none

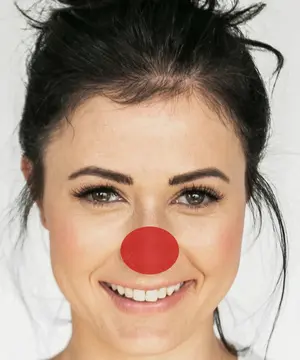

- Light Obstruction: Minor obstruction that does not significantly impact facial recognition. The cheeks or forehead may be fully covered, or there may be slight obstruction of the mouth or nose (e.g., clown nose, hands on cheeks, low-profile cap)

faces.attributes.obstruction.light

- Medium Obstruction: Partial obstruction of the eyes is possible, or one key facial feature (eye, mouth, or nose) is fully covered. Includes coverage of the periorbital region (e.g., domino mask, large nose cover, eye patch)

faces.attributes.obstruction.medium

- Heavy Obstruction: Significant obstruction affecting multiple facial features, making recognition difficult. Either the eyes and eyebrow bridge or both the mouth and nose are fully covered. Includes cases where roughly half the face is obstructed (e.g., surgical masks, blindfolds, masquerade half-masks)

faces.attributes.obstruction.heavy

- Extreme Obstruction: Most facial features are obstructed, making recognition very difficult or impossible. Classification is based on the remaining visible features: eyes and eyebrow bridge, eyes and mouth, or a single eye and nose (e.g., wrestling mask, niqab, balaclava, gas mask)

faces.attributes.obstruction.extreme

- Complete Obstruction: All facial features are entirely covered, preventing any facial recognition. Hair and ears may still be visible (e.g., opaque full-face mask)

faces.attributes.obstruction.complete

Quality classes

These classes define the objective visual quality of a face, independent of artistic intent. Factors considered include lighting color and intensity, noise, blurring, and distortions caused by filters. A key criterion is facial recognizability, but face rotation and obstructions are not considered.

Faces are categorized into four quality levels, ranked from highest to lowest:

- Perfect Quality: The face is pristine, with no elements hindering recognition. Lighting appears natural (black-and-white images are accepted) and is neither too bright nor too dark. Minor brightness variations are acceptable as long as they do not create excessive contrast. No noticeable pixelation, blurring, or filter-induces distortion is present

faces.attributes.quality.perfect

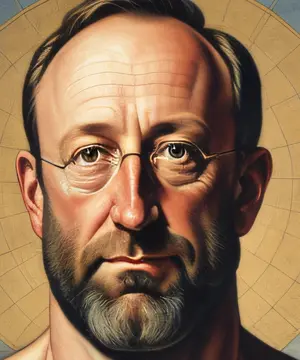

- High Quality: The face is high quality with minor flaws but remains fully recognizable. Lighting may have unusual coloration, be slightly too bright or dark, or show localized brightness variations. Slight pixelation, blurring, or subtle filters (e.g., blushing) may be present

faces.attributes.quality.high

- Medium Quality: The face is recognizable but has significant flaws affecting clarity. These include strong brightness or darkness covering major facial areas, noticeable pixelation or blurring, or slight feature distortions from filters (as long as the face retains human proportions)

faces.attributes.quality.medium

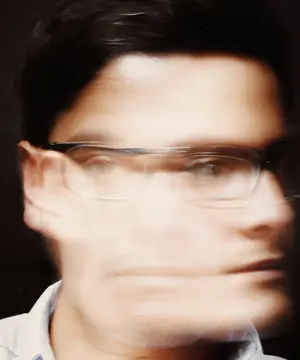

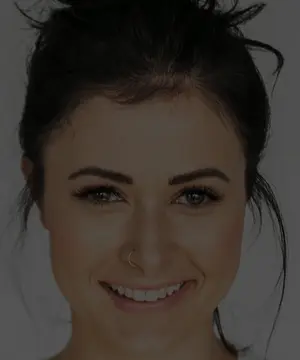

- Low Quality: The face is barely recognizable or unrecognizable due to severe flaws. Extreme brightness, darkness, pixelation, blurring, or heavy feature-distorting filters make identification highly difficult or impossible

faces.attributes.quality.low

Angle classes

These classes define the face's relative position to the viewer, based on expected eye visibility. Obstructions or visual quality issues (e.g., a blindfold) are not considered.

- Straight Angle: The face is mostly frontal, though it may be slightly slanted. Both eyes should be visible

faces.attributes.angle.straight

- Side Angle: The face is mostly in profile, with only one eye visible

faces.attributes.angle.side

- Back Angle: The face is seen from behind or from above, with no eyes visible

faces.attributes.angle.back

Filter classes

These classes indicate whether a face has post-processing overlays, referred to as filters.

- No Filters: No noticeable filters are applied to the face. Global adjustments (e.g., saturation changes, black-and-white, colored lighting) as well as subtle effects (e.g., beauty filters) are disregarded

faces.attributes.filters.false

- Filters On: Clearly visible filters are present on the face. They may be obstructive (e.g., fake glasses, masks, smileys, text), distorting (e.g., warping facial contours, cartoon-like alterations), or both

faces.attributes.filters.true

Sunglasses detection

The sunglasses class is useful to detect the presence of properly worn (i.e. on the nose) sunglasses on a face.

- Sunglasses: properly worn sunglasses on a face faces.sunglasses

- No sunglasses: Normal glasses and other protective eyewears (such as motorcycle helmets) are ignored, but would count as obstruction. Therefore, these faces would not be flagged as having sunglasses:

In-scene presence detection

This category requires additional compute time and is therefore optional. To use it, add face-presence to the model list in your API request.

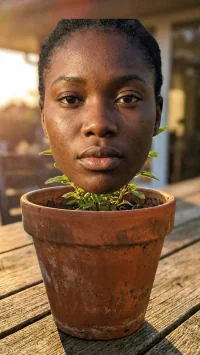

The presence classes indicate whether a detected face belongs to a person who is physically present within the photographed scene. This distinction helps separate actual subjects present in the environment from representations such as printed or digital media:

- in-scene: The face belongs to a person who is physically present in the scene.

faces.presence.in-scene- Individuals captured directly in the environment.

- Reflections in natural reflective surfaces (e.g., mirrors, windows).

- not-in-scene: The face belongs to a person not physically present in the scene.

faces.presence.out-of-scene- Faces on posters, signs, magazine or book covers.

- Faces displayed on digital screens (e.g., phones, TVs, monitors).

- Faces added as overlays or digitally inserted elements.

- If a non-present face obscures a present one (e.g., someone wearing a mask with another person's face), only the non-present face is annotated.

Prerequisites: These classes are only applicable to real-world photographs, not to digital montages, composites, or screenshots. Minor visual effects such as text overlays or color filters are acceptable. Other types of images may introduce ambiguity and are excluded to ensure consistent model output.

Use the model (images)

If you haven't already, create an account to get your own API keys.

Profile picture analysis

Let's say you want to analyze the following image:

You can either share a URL to the image, or upload the image file.

Option 1: Send image URL

Here's how to proceed if you choose to share the image URL:

curl -X GET -G 'https://api.sightengine.com/1.0/check.json' \

-d 'models=face-analysis' \

-d 'api_user={api_user}&api_secret={api_secret}' \

--data-urlencode 'url=https://sightengine.com/assets/img/examples/example7.jpg'

# this example uses requests

import requests

import json

params = {

'url': 'https://sightengine.com/assets/img/examples/example7.jpg',

'models': 'face-analysis',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

r = requests.get('https://api.sightengine.com/1.0/check.json', params=params)

output = json.loads(r.text)

$params = array(

'url' => 'https://sightengine.com/assets/img/examples/example7.jpg',

'models' => 'face-analysis',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/check.json?'.http_build_query($params));

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios

const axios = require('axios');

axios.get('https://api.sightengine.com/1.0/check.json', {

params: {

'url': 'https://sightengine.com/assets/img/examples/example7.jpg',

'models': 'face-analysis',

'api_user': '{api_user}',

'api_secret': '{api_secret}',

}

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| url | string | URL of the image to analyze |

| models | string | comma-separated list of models to apply |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Option 2: Send image file

Here's how to proceed if you choose to upload the image file:

curl -X POST 'https://api.sightengine.com/1.0/check.json' \

-F 'media=@/path/to/image.jpg' \

-F 'models=face-analysis' \

-F 'api_user={api_user}' \

-F 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

'models': 'face-analysis',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

files = {'media': open('/path/to/image.jpg', 'rb')}

r = requests.post('https://api.sightengine.com/1.0/check.json', files=files, data=params)

output = json.loads(r.text)

$params = array(

'media' => new CurlFile('/path/to/image.jpg'),

'models' => 'face-analysis',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/check.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('media', fs.createReadStream('/path/to/image.jpg'));

data.append('models', 'face-analysis');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/check.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| media | file | image to analyze |

| models | string | comma-separated list of models to apply |

| api_user | string | your API user id |

| api_secret | string | your API secret |

API response

The API will then return a JSON response with the following structure:

{

"status": "success",

"request": {

"id": "req_gcTp4s63IAAni0lFOT7KK",

"timestamp": 1714997478.552115,

"operations": 1

},

"faces": [

{

"x1": 0.435,

"y1": 0.2439,

"x2": 0.5675,

"y2": 0.4991,

"features": {

"left_eye": {

"x": 0.5288,

"y": 0.334

},

"right_eye": {

"x": 0.4713,

"y": 0.3377

},

"nose_tip": {

"x": 0.5,

"y": 0.3677

},

"left_mouth_corner": {

"x": 0.5275,

"y": 0.4184

},

"right_mouth_corner": {

"x": 0.475,

"y": 0.4221

}

},

"attributes": {

"glasses": {

"sunglasses": 0.01,

"no_sunglasses": 0.99

},

"angle": {

"back": 0,

"side": 0.001,

"straight": 0.999

},

"filter": {

"false": 1,

"true": 0

},

"obstruction": {

"complete": 0,

"extreme": 0,

"heavy": 0,

"light": 0.335,

"medium": 0.006,

"none": 0.659

},

"quality": {

"high": 0.413,

"low": 0.001,

"medium": 0.001,

"perfect": 0.585

}

}

}

],

"artificial_faces": [],

"media": {

"id": "med_gcTpqyOZ18IMsiMe4Ar28",

"uri": "https://sightengine.com/assets/img/examples/example7.jpg"

}

}

Successful Response

Status code: 200, Content-Type: application/json| Field | Type | Description |

| status | string | status of the request, either "success" or "failure" |

| request | object | information about the processed request |

| request.id | string | unique identifier of the request |

| request.timestamp | float | timestamp of the request in Unix time |

| request.operations | integer | number of operations consumed by the request |

| faces | object | results for the model |

| artificial_faces | object | results for the model |

| media | object | information about the media analyzed |

| media.id | string | unique identifier of the media |

| media.uri | string | URI of the media analyzed: either the URL or the filename |

Error

Status codes: 4xx and 5xx. See how error responses are structured.Use the model (videos)

Face analysis in videos

Option 1: Short video

Here's how to proceed to analyze a short video (less than 1 minute):

curl -X POST 'https://api.sightengine.com/1.0/video/check-sync.json' \

-F 'media=@/path/to/video.mp4' \

-F 'models=face-analysis' \

-F 'api_user={api_user}' \

-F 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

# specify the models you want to apply

'models': 'face-analysis',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

files = {'media': open('/path/to/video.mp4', 'rb')}

r = requests.post('https://api.sightengine.com/1.0/video/check-sync.json', files=files, data=params)

output = json.loads(r.text)

$params = array(

'media' => new CurlFile('/path/to/video.mp4'),

// specify the models you want to apply

'models' => 'face-analysis',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/video/check-sync.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('media', fs.createReadStream('/path/to/video.mp4'));

// specify the models you want to apply

data.append('models', 'face-analysis');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/video/check-sync.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| media | file | image to analyze |

| models | string | comma-separated list of models to apply |

| interval | float | frame interval in seconds, out of 0.5, 1, 2, 3, 4, 5 (optional) |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Option 2: Long video

Here's how to proceed to analyze a long video. Note that if the video file is very large, you might first need to upload it through the Upload API.

curl -X POST 'https://api.sightengine.com/1.0/video/check.json' \

-F 'media=@/path/to/video.mp4' \

-F 'models=face-analysis' \

-F 'callback_url=https://yourcallback/path' \

-F 'api_user={api_user}' \

-F 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

# specify the models you want to apply

'models': 'face-analysis',

# specify where you want to receive result callbacks

'callback_url': 'https://yourcallback/path',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

files = {'media': open('/path/to/video.mp4', 'rb')}

r = requests.post('https://api.sightengine.com/1.0/video/check.json', files=files, data=params)

output = json.loads(r.text)

$params = array(

'media' => new CurlFile('/path/to/video.mp4'),

// specify the models you want to apply

'models' => 'face-analysis',

// specify where you want to receive result callbacks

'callback_url' => 'https://yourcallback/path',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/video/check.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('media', fs.createReadStream('/path/to/video.mp4'));

// specify the models you want to apply

data.append('models', 'face-analysis');

// specify where you want to receive result callbacks

data.append('callback_url', 'https://yourcallback/path');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/video/check.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| media | file | image to analyze |

| callback_url | string | callback URL to receive moderation updates (optional) |

| models | string | comma-separated list of models to apply |

| interval | float | frame interval in seconds, out of 0.5, 1, 2, 3, 4, 5 (optional) |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Option 3: Live-stream

Here's how to proceed to analyze a live-stream:

curl -X GET -G 'https://api.sightengine.com/1.0/video/check.json' \

--data-urlencode 'stream_url=https://domain.tld/path/video.m3u8' \

-d 'models=face-analysis' \

-d 'callback_url=https://your.callback.url/path' \

-d 'api_user={api_user}' \

-d 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

'stream_url': 'https://domain.tld/path/video.m3u8',

# specify the models you want to apply

'models': 'face-analysis',

# specify where you want to receive result callbacks

'callback_url': 'https://your.callback.url/path',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

r = requests.post('https://api.sightengine.com/1.0/video/check.json', data=params)

output = json.loads(r.text)

$params = array(

'stream_url' => 'https://domain.tld/path/video.m3u8',

// specify the models you want to apply

'models' => 'face-analysis',

// specify where you want to receive result callbacks

'callback_url' => 'https://your.callback.url/path',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/video/check.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('stream_url', 'https://domain.tld/path/video.m3u8');

// specify the models you want to apply

data.append('models', 'face-analysis');

// specify where you want to receive result callbacks

data.append('callback_url', 'https://your.callback.url/path');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/video/check.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| stream_url | string | URL of the video stream |

| callback_url | string | callback URL to receive moderation updates (optional) |

| models | string | comma-separated list of models to apply |

| interval | float | frame interval in seconds, out of 0.5, 1, 2, 3, 4, 5 (optional) |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Moderation result

The Moderation result will be provided either directly in the request response (for sync calls, see below) or through the callback URL your provided (for async calls).

Here is the structure of the JSON response with moderation results for each analyzed frame under the data.frames array:

{

"status": "success",

"request": {

"id": "req_gmgHNy8oP6nvXYaJVLq9n",

"timestamp": 1717159864.348989,

"operations": 21

},

"data": {

"frames": [

{

"info": {

"id": "med_gmgHcUOwe41rWmqwPhVNU_1",

"position": 0

},

"faces": [

{

"x1": 0.435,

"y1": 0.2439,

"x2": 0.5675,

"y2": 0.4991,

"features": {

"left_eye": {

"x": 0.5288,

"y": 0.334

},

"right_eye": {

"x": 0.4713,

"y": 0.3377

},

"nose_tip": {

"x": 0.5,

"y": 0.3677

},

"left_mouth_corner": {

"x": 0.5275,

"y": 0.4184

},

"right_mouth_corner": {

"x": 0.475,

"y": 0.4221

}

},

"attributes": {

"glasses": {

"sunglasses": 0.01,

"no_sunglasses": 0.99

},

"angle": {

"back": 0,

"side": 0.001,

"straight": 0.999

},

"filter": {

"false": 1,

"true": 0

},

"obstruction": {

"complete": 0,

"extreme": 0,

"heavy": 0,

"light": 0.335,

"medium": 0.006,

"none": 0.659

},

"quality": {

"high": 0.413,

"low": 0.001,

"medium": 0.001,

"perfect": 0.585

}

}

}

],

"artificial_faces": [],

},

...

]

},

"media": {

"id": "med_gmgHcUOwe41rWmqwPhVNU",

"uri": "yourfile.mp4"

},

}