Face attribute detection

Deprecated face-attributesOverview

The Faces Attributes Detection model is useful to determine if an image contains faces and get descriptive attributes on those faces (face landmarks, presence of sun glasses etc).

Face Detection

Face Detection has been designed to be efficient and robust, with a high precision/recall, supporting different face sizes, arbitrary face orientations as well as partially occluded faces, faces with sunglasses, hats or sanitary masks.

Use-cases

- Detect people or faces in images or videos

- Force users to upload pictures of their face

- Prevent users from uploading group pictures

- Group or classify your images/videos

To help you locate each individual face, the corners of the boxes containing those faces will be returned (x1,x2,y1,y2 values).

Face Features / Face Landmarks

For each detected face, the positions of the main face features are returned. Face features include:

- Left and right eyes

- Left and right mouth corners

- Nose tip

Use-cases

- Apply masks, transforms or other augmented-reality elements on faces

- Adjust face crops based on the relative locations of key face landmarks

Age group Detection

For each detected face, the Face Attribute Model will return a "minor" field that will help you determine if a given face belongs to someone that is less than 18 years or more than 18 years old.

The 18-year threshold corresponds to the legal age of majority (adulthood) in many countries.

The Age Group information is determined solely using the face. Other signs such as clothes or context will not influence the result.

The returned value is between 0 and 1, face with a minor value closer to 1 indicate that the person is minor while faces with a minor value closer to 0 indicate that the person is major

Use-cases

- Remove profile pictures belonging to minors

- Prevent users from posting pictures /videos of their children

- Blur child faces in images or videos

- Group or classify your images based on content

15-20 year olds

Determining if someone is 17 or 19 based on a single face can be tricky. The API has therefore been developed to show that its confidence is low whenever it encounters pictures of users that are visually close to 18. The "minor" value would in this case be close to 0.5.

Sunglasses

For each detected face, the Face Attribute Model will return a "sunglasses" field that will help you determine if a face is covered with sunglasses or not.

The returned value is between 0 and 1, face with a sunglasses value closer to 1 indicate that the person wear sunglasses while faces with a sunglasses value closer to 0 indicate that the person doesn't wear sunglasses.

Use-cases

- Detect if a person is wearing sunglasses

- Prevent users from hiding their faces with sunglasses

- Group or classify your images

Limitations

- The limitations applicable to face detection remain relevant

Use the model (images)

If you haven't already, create an account to get your own API keys.

Detect faces in images

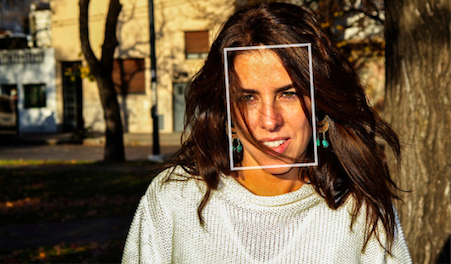

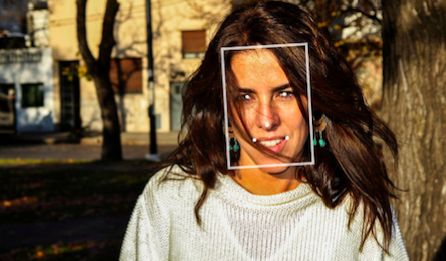

Let's say you want to moderate the following image:

You can either share a URL to the image, or upload the image file.

Option 1: Send image URL

Here's how to proceed if you choose to share the image URL:

curl -X GET -G 'https://api.sightengine.com/1.0/check.json' \

-d 'models=face-attributes' \

-d 'api_user={api_user}&api_secret={api_secret}' \

--data-urlencode 'url=https://sightengine.com/assets/img/examples/example7.jpg'

# this example uses requests

import requests

import json

params = {

'url': 'https://sightengine.com/assets/img/examples/example7.jpg',

'models': 'face-attributes',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

r = requests.get('https://api.sightengine.com/1.0/check.json', params=params)

output = json.loads(r.text)

$params = array(

'url' => 'https://sightengine.com/assets/img/examples/example7.jpg',

'models' => 'face-attributes',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/check.json?'.http_build_query($params));

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios

const axios = require('axios');

axios.get('https://api.sightengine.com/1.0/check.json', {

params: {

'url': 'https://sightengine.com/assets/img/examples/example7.jpg',

'models': 'face-attributes',

'api_user': '{api_user}',

'api_secret': '{api_secret}',

}

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| url | string | URL of the image to analyze |

| models | string | comma-separated list of models to apply |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Option 2: Send image file

Here's how to proceed if you choose to upload the image file:

curl -X POST 'https://api.sightengine.com/1.0/check.json' \

-F 'media=@/path/to/image.jpg' \

-F 'models=face-attributes' \

-F 'api_user={api_user}' \

-F 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

'models': 'face-attributes',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

files = {'media': open('/path/to/image.jpg', 'rb')}

r = requests.post('https://api.sightengine.com/1.0/check.json', files=files, data=params)

output = json.loads(r.text)

$params = array(

'media' => new CurlFile('/path/to/image.jpg'),

'models' => 'face-attributes',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/check.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('media', fs.createReadStream('/path/to/image.jpg'));

data.append('models', 'face-attributes');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/check.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| media | file | image to analyze |

| models | string | comma-separated list of models to apply |

| api_user | string | your API user id |

| api_secret | string | your API secret |

API response

The API will then return a JSON response with the following structure:

{

"status": "success",

"request": {

"id": "req_0MsK5ptZx713xt5aRmckl",

"timestamp": 1494406445.3718,

"operations": 1

},

"faces": [

{

"x1": 0.5121,

"y1": 0.1879,

"x2": 0.6926,

"y2": 0.6265,

"features": {

"left_eye": {

"x": 0.6438,

"y": 0.3634

},

"right_eye": {

"x": 0.5578,

"y": 0.3714

},

"nose_tip": {

"x": 0.6047,

"y": 0.4801

},

"left_mouth_corner": {

"x": 0.6469,

"y": 0.5305

},

"right_mouth_corner": {

"x": 0.5719,

"y": 0.5332

}

},

"attributes": {

"minor": 0.01,

"sunglasses": 0.01

}

}

],

"media": {

"id": "med_0MsK3A6i2vNxQgHkc11j9",

"uri": "https://sightengine.com/assets/img/examples/example7.jpg"

}

}

Successful Response

Status code: 200, Content-Type: application/json| Field | Type | Description |

| status | string | status of the request, either "success" or "failure" |

| request | object | information about the processed request |

| request.id | string | unique identifier of the request |

| request.timestamp | float | timestamp of the request in Unix time |

| request.operations | integer | number of operations consumed by the request |

| faces | object | results for the model |

| media | object | information about the media analyzed |

| media.id | string | unique identifier of the media |

| media.uri | string | URI of the media analyzed: either the URL or the filename |

Error

Status codes: 4xx and 5xx. See how error responses are structured.Use the model (videos)

Detect faces in videos

Option 1: Short video

Here's how to proceed to analyze a short video (less than 1 minute):

curl -X POST 'https://api.sightengine.com/1.0/video/check-sync.json' \

-F 'media=@/path/to/video.mp4' \

-F 'models=face-attributes' \

-F 'api_user={api_user}' \

-F 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

# specify the models you want to apply

'models': 'face-attributes',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

files = {'media': open('/path/to/video.mp4', 'rb')}

r = requests.post('https://api.sightengine.com/1.0/video/check-sync.json', files=files, data=params)

output = json.loads(r.text)

$params = array(

'media' => new CurlFile('/path/to/video.mp4'),

// specify the models you want to apply

'models' => 'face-attributes',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/video/check-sync.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('media', fs.createReadStream('/path/to/video.mp4'));

// specify the models you want to apply

data.append('models', 'face-attributes');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/video/check-sync.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| media | file | image to analyze |

| models | string | comma-separated list of models to apply |

| interval | float | frame interval in seconds, out of 0.5, 1, 2, 3, 4, 5 (optional) |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Option 2: Long video

Here's how to proceed to analyze a long video. Note that if the video file is very large, you might first need to upload it through the Upload API.

curl -X POST 'https://api.sightengine.com/1.0/video/check.json' \

-F 'media=@/path/to/video.mp4' \

-F 'models=face-attributes' \

-F 'callback_url=https://yourcallback/path' \

-F 'api_user={api_user}' \

-F 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

# specify the models you want to apply

'models': 'face-attributes',

# specify where you want to receive result callbacks

'callback_url': 'https://yourcallback/path',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

files = {'media': open('/path/to/video.mp4', 'rb')}

r = requests.post('https://api.sightengine.com/1.0/video/check.json', files=files, data=params)

output = json.loads(r.text)

$params = array(

'media' => new CurlFile('/path/to/video.mp4'),

// specify the models you want to apply

'models' => 'face-attributes',

// specify where you want to receive result callbacks

'callback_url' => 'https://yourcallback/path',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/video/check.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('media', fs.createReadStream('/path/to/video.mp4'));

// specify the models you want to apply

data.append('models', 'face-attributes');

// specify where you want to receive result callbacks

data.append('callback_url', 'https://yourcallback/path');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/video/check.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| media | file | image to analyze |

| callback_url | string | callback URL to receive moderation updates (optional) |

| models | string | comma-separated list of models to apply |

| interval | float | frame interval in seconds, out of 0.5, 1, 2, 3, 4, 5 (optional) |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Option 3: Live-stream

Here's how to proceed to analyze a live-stream:

curl -X GET -G 'https://api.sightengine.com/1.0/video/check.json' \

--data-urlencode 'stream_url=https://domain.tld/path/video.m3u8' \

-d 'models=face-attributes' \

-d 'callback_url=https://your.callback.url/path' \

-d 'api_user={api_user}' \

-d 'api_secret={api_secret}'

# this example uses requests

import requests

import json

params = {

'stream_url': 'https://domain.tld/path/video.m3u8',

# specify the models you want to apply

'models': 'face-attributes',

# specify where you want to receive result callbacks

'callback_url': 'https://your.callback.url/path',

'api_user': '{api_user}',

'api_secret': '{api_secret}'

}

r = requests.post('https://api.sightengine.com/1.0/video/check.json', data=params)

output = json.loads(r.text)

$params = array(

'stream_url' => 'https://domain.tld/path/video.m3u8',

// specify the models you want to apply

'models' => 'face-attributes',

// specify where you want to receive result callbacks

'callback_url' => 'https://your.callback.url/path',

'api_user' => '{api_user}',

'api_secret' => '{api_secret}',

);

// this example uses cURL

$ch = curl_init('https://api.sightengine.com/1.0/video/check.json');

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, $params);

$response = curl_exec($ch);

curl_close($ch);

$output = json_decode($response, true);

// this example uses axios and form-data

const axios = require('axios');

const FormData = require('form-data');

const fs = require('fs');

data = new FormData();

data.append('stream_url', 'https://domain.tld/path/video.m3u8');

// specify the models you want to apply

data.append('models', 'face-attributes');

// specify where you want to receive result callbacks

data.append('callback_url', 'https://your.callback.url/path');

data.append('api_user', '{api_user}');

data.append('api_secret', '{api_secret}');

axios({

method: 'post',

url:'https://api.sightengine.com/1.0/video/check.json',

data: data,

headers: data.getHeaders()

})

.then(function (response) {

// on success: handle response

console.log(response.data);

})

.catch(function (error) {

// handle error

if (error.response) console.log(error.response.data);

else console.log(error.message);

});

See request parameter description

| Parameter | Type | Description |

| stream_url | string | URL of the video stream |

| callback_url | string | callback URL to receive moderation updates (optional) |

| models | string | comma-separated list of models to apply |

| interval | float | frame interval in seconds, out of 0.5, 1, 2, 3, 4, 5 (optional) |

| api_user | string | your API user id |

| api_secret | string | your API secret |

Moderation result

The Moderation result will be provided either directly in the request response (for sync calls, see below) or through the callback URL your provided (for async calls).

Here is the structure of the JSON response with moderation results for each analyzed frame under the data.frames array:

{

"status": "success",

"request": {

"id": "req_gmgHNy8oP6nvXYaJVLq9n",

"timestamp": 1717159864.348989,

"operations": 21

},

"data": {

"frames": [

{

"info": {

"id": "med_gmgHcUOwe41rWmqwPhVNU_1",

"position": 0

},

"faces": [

{

"x1": 0.5121,

"y1": 0.1879,

"x2": 0.6926,

"y2": 0.6265,

"features": {

"left_eye": {

"x": 0.6438,

"y": 0.3634

},

"right_eye": {

"x": 0.5578,

"y": 0.3714

},

"nose_tip": {

"x": 0.6047,

"y": 0.4801

},

"left_mouth_corner": {

"x": 0.6469,

"y": 0.5305

},

"right_mouth_corner": {

"x": 0.5719,

"y": 0.5332

}

},

"attributes": {

"minor": 0.01,

"sunglasses": 0.01

}

}

],

},

...

]

},

"media": {

"id": "med_gmgHcUOwe41rWmqwPhVNU",

"uri": "yourfile.mp4"

},

}

You can use the classes under the faces object to analyze faces in the video.