Is it possible to customize the Image Moderation API to my needs?

There is no one-size-fits-all solution with Image Moderation. Expectations will vary between countries, cultures and applications.

This is why we have worked to make our API endpoints and models flexible and suitable to most needs.

You choose the models you want to apply

As you may know, we have multiple moderation models available. When you perform a request to the API, you get to choose what models to apply and how each model is used to then decide if an image should be approved, rejected or reviewed. See our Model Reference to learn more about each model output.

Each model returns fine-grained results

Our models do not return simple binary classifications (such as 'nudity' or 'no nudity'). They send you more information so that you can make a fine-grained decision on how to handle each case.

The Nudity Detection, for instance, will tell you what level of nudity it has encountered. When faced with suggestive content, we tell you exactly what the image contains, so that you can take appropriate action - or simply accept the photo.

In other words, you don't need to worry about how images get handled when they are border-line nudity. You can simply define actions for each possible situation:

| Woman in bikinis | Accept Reject or Review? |

| Bare male chest | Accept Reject or Review? |

| Man in underwear | Accept Reject or Review? |

| Woman in lingerie | Accept Reject or Review? |

| Suggestive cleavage | Accept Reject or Review? |

| Miniskirt | Accept Reject or Review? |

| and so on... | see more details |

This applies to Nudity Detection but also to all the other available models.

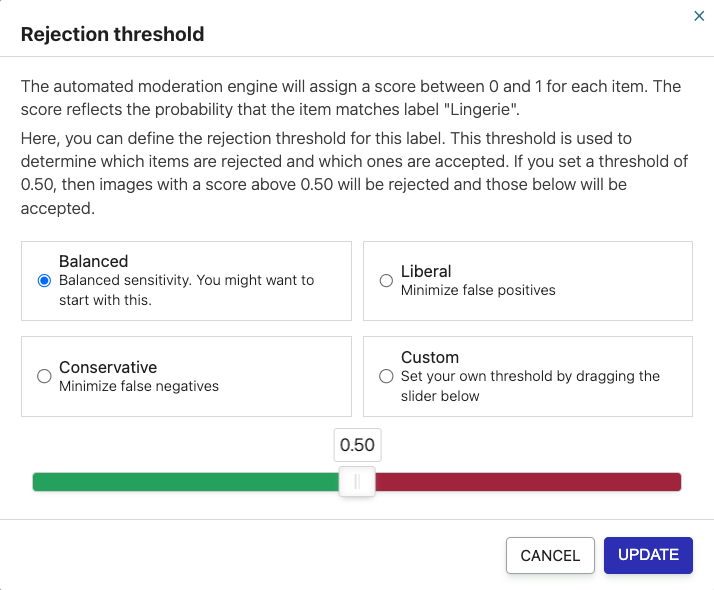

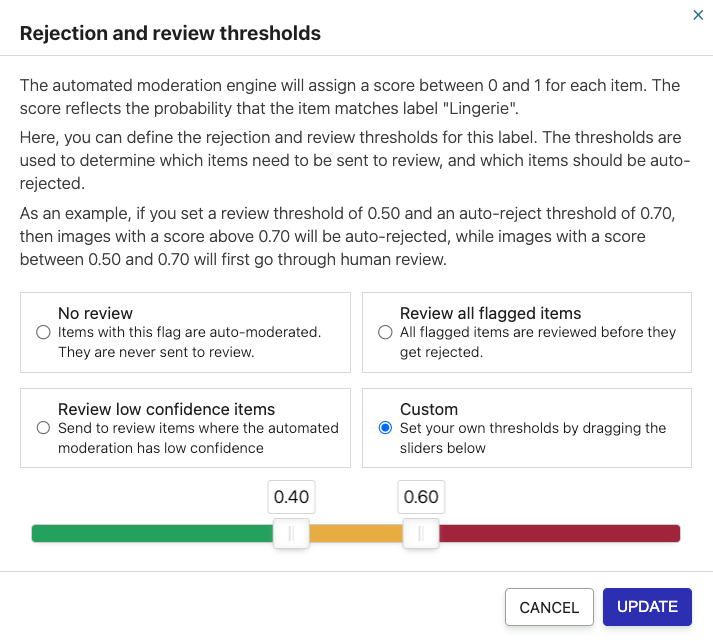

Each moderation category is associated with a score

A score between 0 and 1 is returned for each moderation category. This value reflects the model’s confidence. A score closer to 1 indicates that the image has a higher probability of containing what the model is looking for.

With these scores, you can define thresholds to help you make a decision about the image you submitted. It could simply be to automatically reject the image using Fully Automated Moderation if the score is above a predefined value.

It could also be to review some of the submitted images using Hybrid Moderation if the score is between two predefined thresholds.

You can create custom disallow lists

Disallow Lists can help you blacklist some specific images by fingerprinting them and preventing them from (re)appearing on your site or app. This is useful to make sure known copyrighted images, illegal images, or previously removed images do not get re-uploaded to your properties.

Once you add an image to the list, any near-duplicate image will be detected.

The steps to setup and use an Image Disallow List are the following:

- Create an Image Disallow List from your dashboard.

- Add Images to the disallow list (through the dashboard or through the API).

- Check new and existing images against the disallow list.

To get more information on how to use Image Disallow Lists, check the documentation.

And send images to the feedback API

Our Image Moderation models have been trained to achieve best-in-class accuracy, yet you might want to report images you believe were misclassified. To do that, you can submit the image to the Feedback API.

Submitted images are used to improve the models on a continuous basis so your feedback is precious ! It can also be used to progressively train custom models adapted to your specific needs if you so wish.

To send an image to the Feedback API, you will need the image itself, the name of the model and the expected class (or the unknown label if the expected class is not available). Here are the available class:

| Model | Possible classes |

| nudity | safe partial raw bikini chest cleavage lingerie unknown |

| nudity-2.0 | safe sexual_activity sexual_display erotica bikini male_chest cleavage lingerie miniskirt male_underwear miniskirt unknown |

| nudity-2.1 | safe sexual_activity sexual_display erotica bikini male_chest cleavage lingerie miniskirt male_underwear miniskirt unknown |

| gore | safe gore unknown |

| gore-2.0 | safe gore unknown |

| type | photo illustration unknown |

| face | none single multiple unknown |

| wad | no-weapons weapons no-alcohol alcohol no-drugs drugs unknown |

| weapon | no-weapons weapons unknown |

| recreational_drug | no-drugs drugs unknown |

| alcohol | no-alcohol alcohol unknown |

| text | no-text text-artificial text-natural unknown |

| offensive | not-offensive offensive unknown |

| offensive-2.0 | not-offensive nazi terrorist confederate supremacist asian_swastika offensive unknown |

| scam | not-scam scam unknown |

| tobacco | no-tobacco tobacco unknown |

| gambling | no-gambling gambling unknown |

| money | no-money money unknown |

| text-content | safe unsafe unknown |

| text-content-2.0 | safe unsafe unknown |

| qr-content | safe unsafe unknown |

| military | not-military military unknown |

| destruction | not-destruction destruction unknown |

| genai | ai not-ai |

| people-counting | 0 1 2 3 4 5+ unknown |

| unknown | unknown miss safe |

Sending a request to the Feedback API is very similar to requests sent to the Image Moderation API, see our documentation to get some code examples.

Please note that submitting an image to the Feedback API does not count as an operation and will not impact your billing.

Need more?

That said, if you believe you need more customization than provided out-of-the-box, for instance with new detection capacities or custom decision filters, please get in touch.

Other frequent questions

- What is a model?

- What are the ways to send an image to the API?

- What image types are supported?

- What are the acceptable / recommended image dimensions?

- How do you process GIF images with multiple frames (animated GIFs)?

- Do you use image metadata to moderate images?

- Is it possible to perform bulk image moderation / to submit images in batch? How many images can be submitted at once?

- Can you detect duplicate images, or image spam?

- Can you improve the moderation results based on my reports of incorrectly classified or mislabeled images?